Question: ( book # 3 . 8 ) Suppose that we have an algorithm that takes as input a string of n bits. We are told

book #

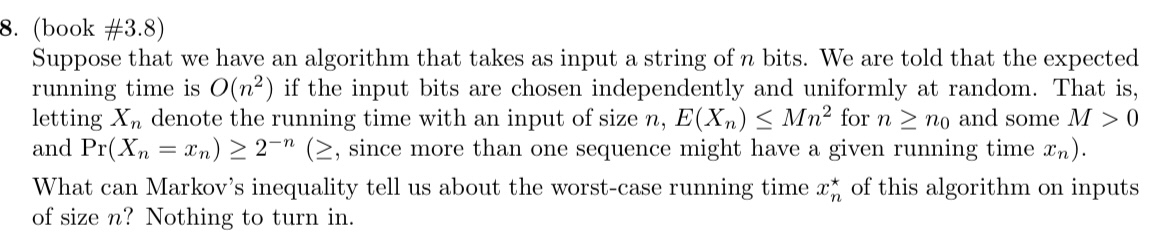

Suppose that we have an algorithm that takes as input a string of bits. We are told that the expected running time is if the input bits are chosen independently and uniformly at random. That is letting denote the running time with an input of size for and some and since more than one sequence might have a given running time :

What can Markov's inequality tell us about the worstcase running time of this algorithm on inputs of size Nothing to turn in

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock