Question: c) Consider an SVM with decision boundary defined by the hyperplane w(x)+b=0 for a two-dimensional (2D) feature space, where (x)=(x1,x2,x12+x22) transforms 2 D inputs to

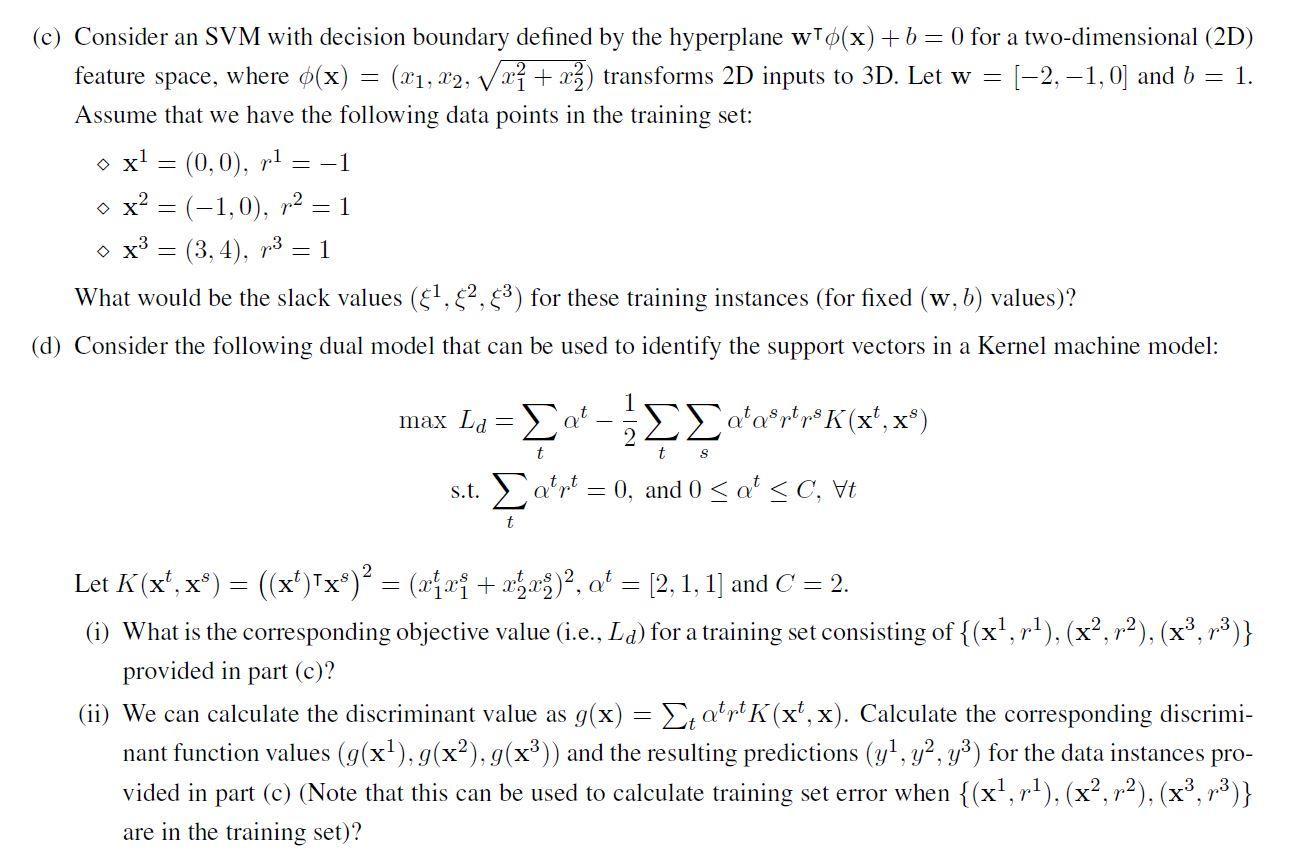

c) Consider an SVM with decision boundary defined by the hyperplane w(x)+b=0 for a two-dimensional (2D) feature space, where (x)=(x1,x2,x12+x22) transforms 2 D inputs to 3D. Let w=[2,1,0] and b=1. Assume that we have the following data points in the training set: x1=(0,0),r1=1x2=(1,0),r2=1x3=(3,4),r3=1 What would be the slack values (1,2,3) for these training instances (for fixed (w,b) values)? d) Consider the following dual model that can be used to identify the support vectors in a Kernel machine model: maxLd=tt21tstsrtrsK(xt,xs)s.t.ttrt=0,and0tC,t Let K(xt,xs)=((xt)xs)2=(x1tx1s+x2tx2s)2,t=[2,1,1] and C=2. (i) What is the corresponding objective value (i.e., Ld) for a training set consisting of {(x1,r1),(x2,r2),(x3,r3)} provided in part (c)? (ii) We can calculate the discriminant value as g(x)=ttrtK(xt,x). Calculate the corresponding discriminant function values (g(x1),g(x2),g(x3)) and the resulting predictions (y1,y2,y3) for the data instances provided in part (c) (Note that this can be used to calculate training set error when {(x1,r1),(x2,r2),(x3,r3)} are in the training set)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts