Question: can you help me with problem 1? Problem 1: (30 points) Consider the algorithm for building a CART model in the case of regression. Following

can you help me with problem 1?

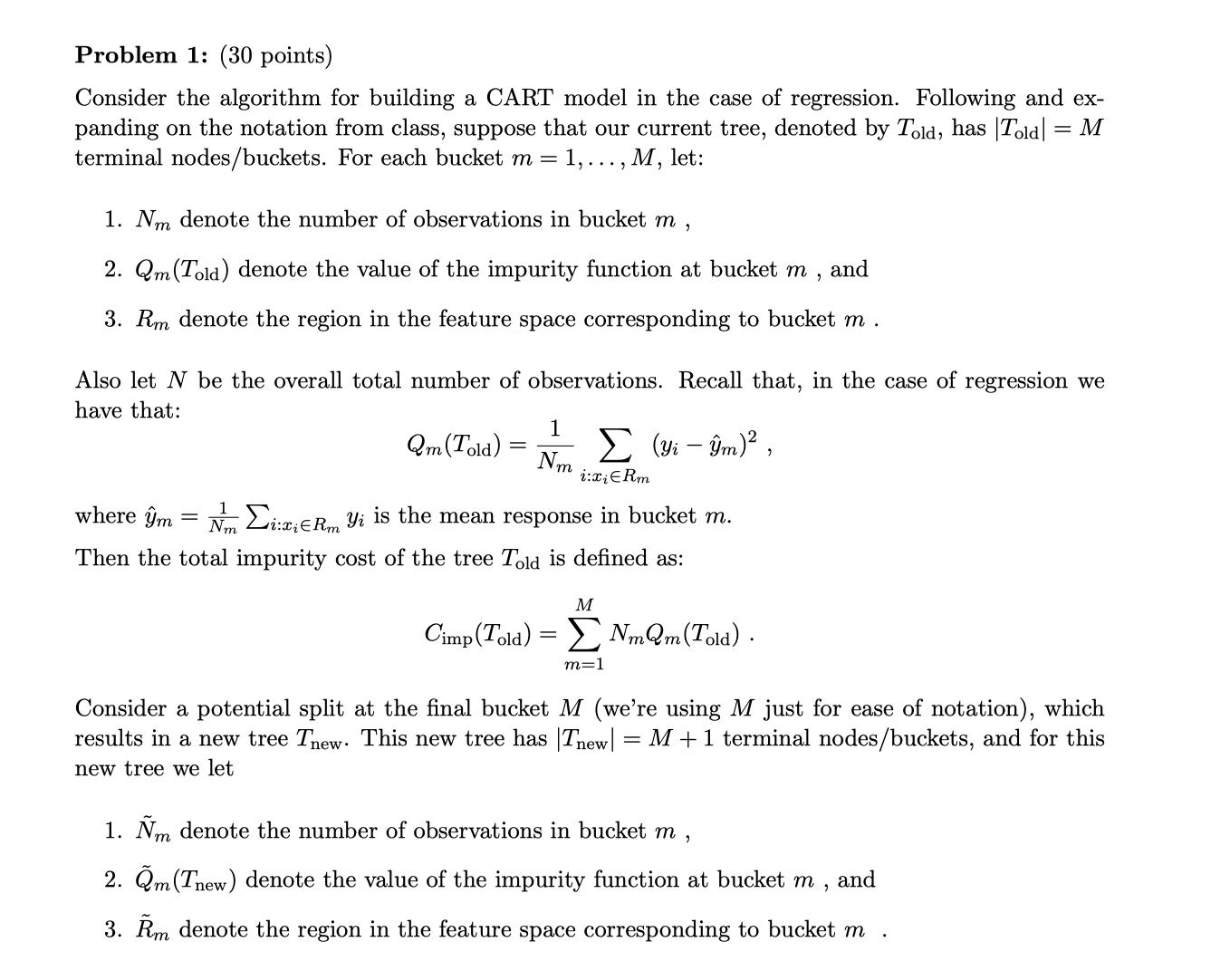

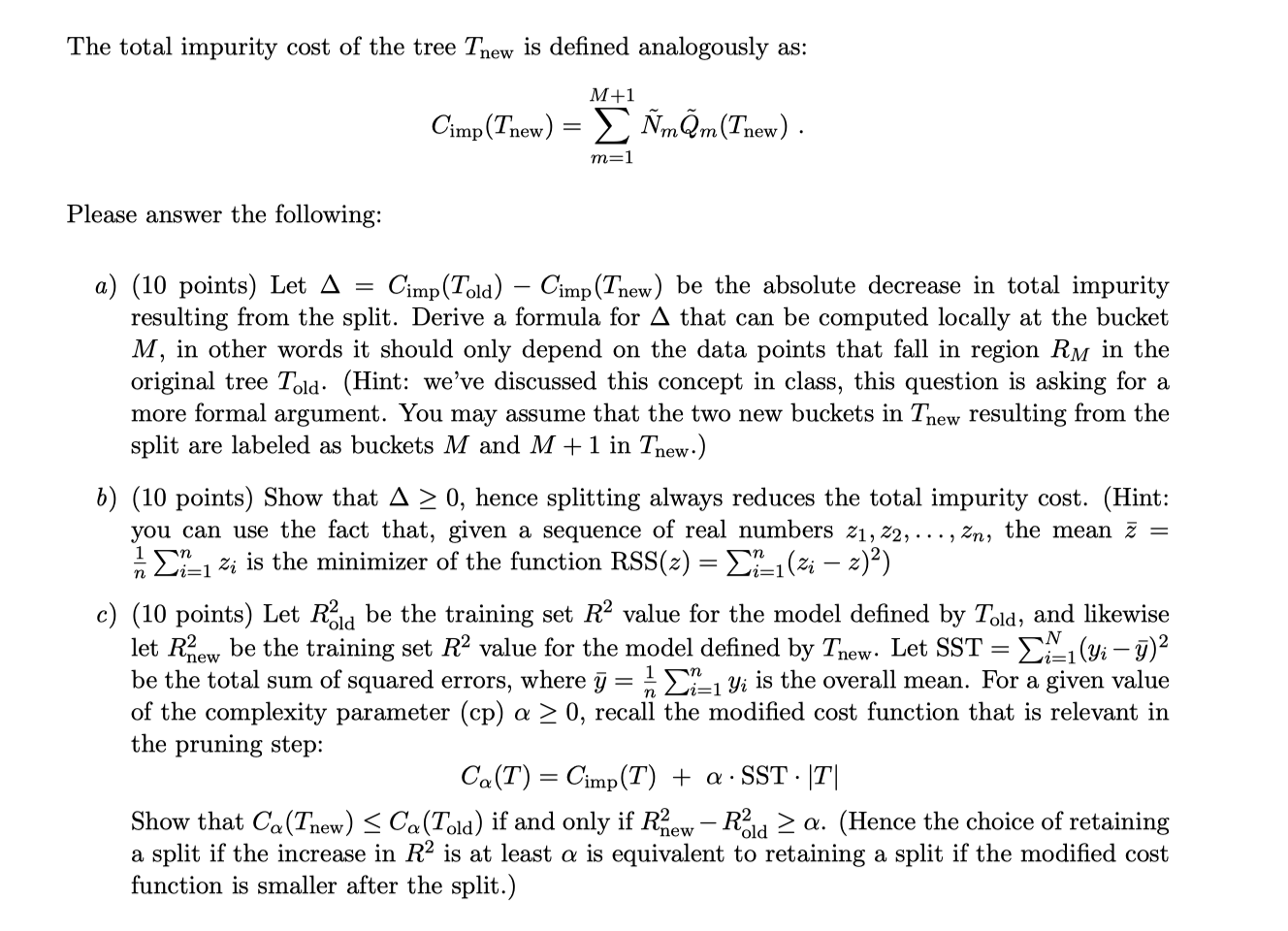

Problem 1: (30 points) Consider the algorithm for building a CART model in the case of regression. Following and ex- panding on the notation from class, suppose that our current tree, denoted by Told, has |T01d| = M terminal nodes/ buckets. For each bucket m = 1,. .. , M , let: 1. Nm denote the number of observations in bucket m , 2. Qm (Told) denote the value of the impurity function at bucket m , and 3. Rm denote the region in the feature space corresponding to bucket m . Also let N be the overall total number of observations. Recall that, in the case of regression we have that: Qaaa=eraaf. #324612": " L . - - where ym Nm 23-3163"; y% is the mean response 111 bucket 111. Then the total impurity cost of the tree Told is dened as: M %Mm=2m%mm m=1 Consider a potential split at the nal bucket M (we're using M just for ease of notation), which results in a new tree Tnew. This new tree has |Tnew| = M + 1 terminal nodes/ buckets, and for this new tree we let 1. rm denote the number of observations in bucket m , 2. Qm (Tnew) denote the value of the impurity function at bucket m , and 3. Rm denote the region in the feature space corresponding to bucket m . The total impurity cost of the tree Tnew is defined analogously as: M+1 Cimp(Tnew) = _ NmQm(Inew) . m=1 Please answer the following: a) (10 points) Let A = Cimp(Told) - Cimp (Tnew) be the absolute decrease in total impurity resulting from the split. Derive a formula for A that can be computed locally at the bucket M, in other words it should only depend on the data points that fall in region RM in the original tree Told. (Hint: we've discussed this concept in class, this question is asking for a more formal argument. You may assume that the two new buckets in Thew resulting from the split are labeled as buckets M and M + 1 in Thew.) b) (10 points) Show that A 2 0, hence splitting always reduces the total impurity cost. (Hint: you can use the fact that, given a sequence of real numbers z1, Z2, ..., Zn, the mean z = " Et Zi is the minimizer of the function RSS(z) = Et-1( zi - z)2) c) (10 points) Let Rod be the training set R2 value for the model defined by Told, and likewise let Rnew be the training set R2 value for the model defined by Thew. Let SST = Zi-1(yi -y)2 be the total sum of squared errors, where y = , ET_, yi is the overall mean. For a given value of the complexity parameter (cp) a 2 0, recall the modified cost function that is relevant in the pruning step: Ca(T) = Cimp (T) + a . SST . ITI Show that Ca(Tnew)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts