Question: ChAPTER 9 Serial Correlation 9 . 1 Time SeriesVirtually every equation in the text so far has been cross - sectional in nature,but thats going

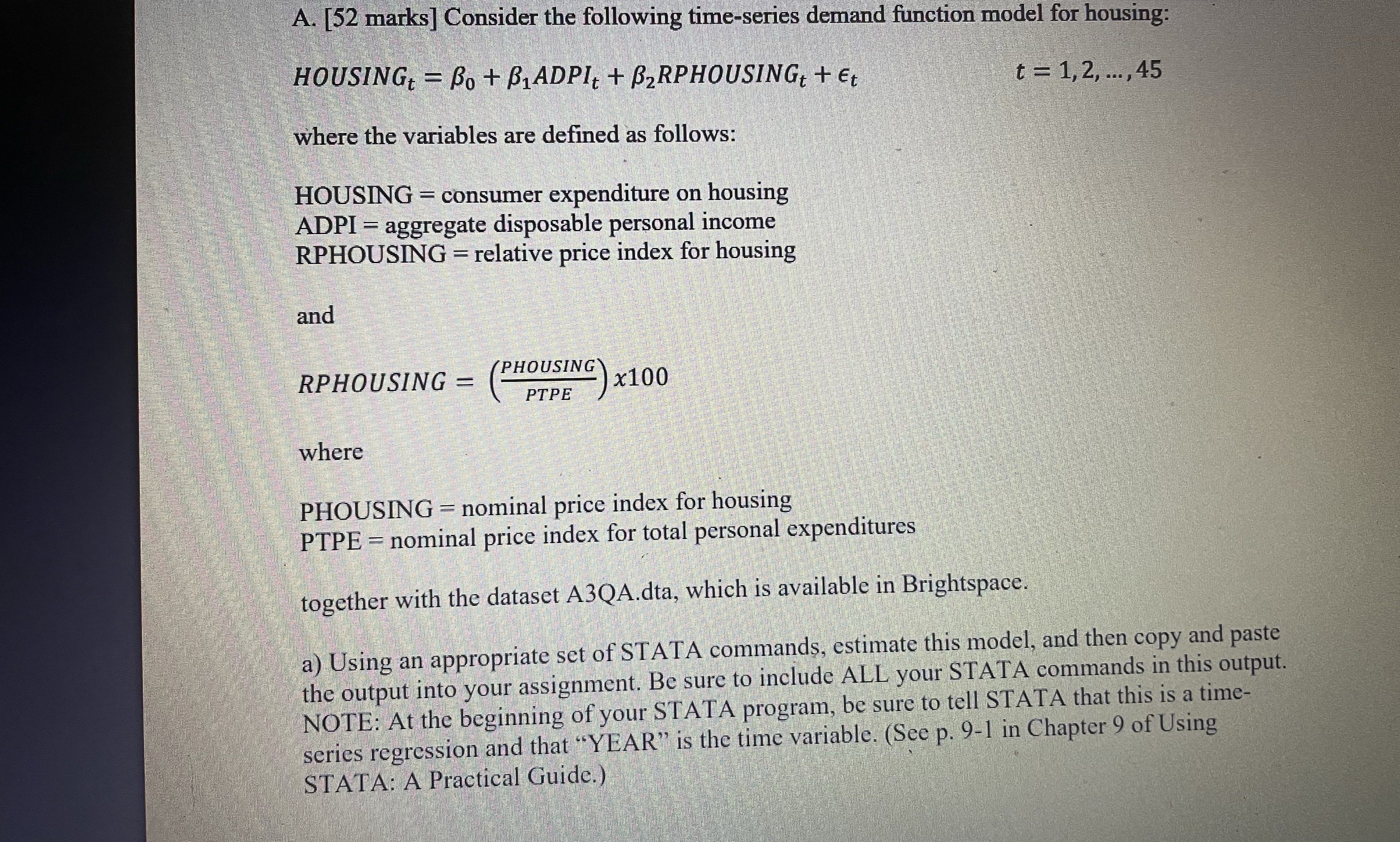

ChAPTER Serial CorrelationTime SeriesVirtually every equation in the text so far has been crosssectional in nature,but thats going to change dramatically in this chapter. As a result, its probablyworthwhile to talk about some of the characteristics of timeseries equations.Timeseries data involve a single entity like a person, corporation, or stateover multiple points in time. Such a timeseries approach allows researchersto investigate analytical issues that cant be examined very easily with a crosssectional regression. For example, macroeconomic models and supplyanddemand models are best studied using timeseries, not crosssectional, data.The notation for a timeseries study is different from that for a crosssectional one. Our familiar crosssectional notation for one time period andN different entities is:Yi Xi Xi Xi eiwhere i goes from to NA timeseries regression has one entity and T different time periods, however,so well switch to this notation:YtXt Xt Xt etwhere t goes from to TThus:YXXX e refers to observations from the firsttime periodYXXX e refers to observations from the secondtime periodgYT XT XT XT eT refers to observations from the Tthtime periodWhats so tough about that, you say? All weve done is change from i to tand change from N to T Well, it turns out that timeseries studies have somecharacteristics that make them more difficult to deal with than crosssections: The order of observations in a time series is fixed. With a crosssectional dataset, you can enter the observations in any order you want, but with timeseries data, you must keep the observations in chronological order.Timeseries samples tend to be much smaller than crosssectional ones. Mosttimeseries populations have many fewer potential observations thando crosssectional ones, and these smaller data sets make statisticalinference more difficult. In addition, its much harder to generate a Pure versus Impure Serial Correlation timeseries observation than a crosssectional one. After all, it takes ayear to get one more observation in an annual time series!The theory underlying timeseries analysis can be quite complex. In partbecause of the problems mentioned above, timeseries econometricsincludes a number of complex topics that require advanced estimationtechniques. Well tackle these topics in Chapters and The stochastic error term in a timeseries equation is often affected by eventsthat took place in a previous time period. This is serial correlation, thetopic of our chapter, so lets get started!Pure versus Impure Serial CorrelationPure Serial CorrelationPure serial correlation occurs when Classical Assumption IV whichassumes uncorrelated observations of the error term, is violated in a correctlyspecified equation. If there is correlation between observations of the errorterm, then the error term is said to be serially correlated. When econometricians use the term serial correlation without any modifier, they are referringto pure serial correlation.The most commonly assumed kind of serial correlation is firstorderserial correlation, in which the current value of the error term is a functionof the previous value of the error term:et et ut where: e the error term of the equation in question the firstorder autocorrelation coefficientu a classical not serially correlated error termThe functional form in Equation is called a firstorder Markov scheme.The new symbol, rho pronounced row called the firstorder autocorrelation coefficient, measures the functional relationship between the valueof an observation of the error term and the value of the previous observationof the error term.The magnitude of indicates the strength of the serial correlation in anequation. If is zero, then there is no serial correlation because e wouldequal u a classical error term As approaches in absolute value, thevalue of the previous observation of the error term becomes more importantin determining the current value of et and a high degree of serial correlationexists. For to be greater than in absolute value is unreasonable because ChAPTER Serial Correlationit implies that the error term has a tendency to continually increase in absolute value over time explode As a result of this, we can state that:The sign of indicates the nature of the serial correlation in an equation.A positive value for implies that the error term tends to have the same signfrom one time period to the next; this is called positive serial correlation.Such a tendency means that if et happens by chance to take on a large valuein one time period, subsequent observations would tend to retain a portionof this original large value and would have the same sign as the original.For example, in timeseries models, the effects of a large external shock toan economy like an earthquake in one period may linger for several timeperiods. The error term will tend to be positive for a number of observations,then negative for several more, and then back positive again.Figure shows two different examples of positive serial correlation.The error term observations plotted in Figure are arranged in chronological order, with the first observation being the first period for which dataare available, the second being the second, and so on To see the differencebetween error terms with and without positive serial correlation, comparethe patterns in Figure with the depiction of no serial correlation in Figure A negative value of implies that the error term has a tendency to switchsigns from negative to positive and back again in consecutive observations;this is called negative serial correlation. It implies that there is some sortof cycle like a pendulum behind the drawing of stochastic disturbances.Figure shows two different examples of negative serial correlation. Forinstance, negative serial correlation might exist in the error term of anequation that is in first differences because changes in a variable often follow a cyclical pattern. In most timeseries applications, however, negativepure serial correlation is much less likely than positive pure serial correlation. As a result, most econometricians analyzing pure serial correlationconcern themselves primarily with positive serial correlation.Serial correlation can take on many forms other than firstorder serial correlation. For example, in a quarterly model, the current quarters error termobservation may be functionally related to the observation of the error termfrom the same quarter in the previous year. This is called seasonally based serialcorrelation:et et ut Pure versus Impure Serial Correlatione Timee TimeFigure Positive Serial CorrelationWith positive firstorder serial correlation, the current observation of the error termtends to have the same sign as the previous observation of the error term. An exampleof positive serial correlation would be external shocks to an economy that take morethan one time period to completely work through the system.Similarly, it is possible that the error term in an equation might be a functionof more than one previous observation of the error term:etetet utSuch a formulation is called secondorder serial correlation ChAPTER Serial Correlatione TimeFigure No Serial CorrelationWith no serial correlation, different observations of the error term are completely uncorrelated with each other. Such error terms would conform to Classical Assumption IVImpure Serial CorrelationBy impure serial correlation we mean serial correlation that is caused bya specification error such as an omitted variable or an incorrect functionalform. While pure serial correlation is caused by the underlying distributionof the error term of the true specification of an equation which cannot bechanged by the researcher impure serial correlation is caused by a specification error that often can be corrected.How is it possible for a specification error to cause serial correlation?Recall that the error term can be thought of as the effect of omitted variables,nonlinearities, measurement errors, and pure stochastic disturbances on thedependent variable. This means, for example, that if we omit a relevant variable or use the wrong functional form, then the portion of that omitted effectthat cannot be represented by the included explanatory variables must beabsorbed by the error term. The error term for an incorrectly specified equation thus includes a portion of the effect of any omitted variables andor aportion of the effect of the difference between the proper functional formand the one chosen by the researcher. This new error term might be seriallycorrelated even if the true one is not. If this is the case, the serial correlationhas been caused by the researchers choice of a specification and not by thepure error term associated with the correct specification.As youll see in Section the proper remedy for serial correlationdepends on whether the serial correlation is likely to be pure or impure. Not Pure versus Impure Serial Correlatione Timee TimeFigure Negative Serial CorrelationWith negative firstorder serial correlation, the current observation of the error termtends to have the opposite sign from the previous observation of the error term. In mosttimeseries applications, negative serial correlation is much less likely than positive serial correlation.surprisingly, the best remedy for impure serial correlation is to attempt tofind the omitted variable or at least a good proxy or the correct functionalform for the equation. Both the bias and the impure serial correlation willdisappear if the specification error is corrected. As a result, most econometricians try to make sure they have the best specification possible before theyspend too much time worrying about pure serial correlation ChAPTER Serial CorrelationTo see how an omitted variable can cause the error term to be serially correlated, suppose that the true equation is:YtXt Xt et where et is a classical error term. As shown in Section if X is accidentallyomitted from the equation or if data for X are unavailable then:YtXt et where etXt et Thus the error term in the omitted variable case is not the classical errorterm e Instead, its also a function of one of the independent variables, X Asa result, the new error term, e can be serially correlated even if the true errorterm e is not. In particular, the new error term e will tend to exhibit detectable serial correlation when:X itself is serially correlated this is quite likely in a time series and the size of e is small compared to the size of XThese tendencies hold even if there are a number of included andor omittedvariables. Therefore:et et ut Another common kind of impure serial correlation is caused by an incorrect functional form. Here, the choice of the wrong functional form can causethe error term to be serially correlated. Lets suppose that the true equation ispolynomial in nature:YtXt Xt et but that instead a linear regression is run:YtXt et The new error term e is now a function of the true error term e and of thedifferences between the linear and the polynomial functional forms. As canbe seen in Figure these differences often follow fairly autoregressive patterns. That is positive differences tend to be followed by positive differences,and negative differences tend to be followed by negative differences. As a If typical values of e are significantly larger in absolute size than X then even a seriallycorrelated omitted variable X will not change e very much. In addition, recall that the omitted variable, X will cause bias in the estimate of depending on the correlation between thetwo Xs If n is biased because of the omission of X then a portion of the X effect must havebeen absorbed by n and will not end up in the residuals. As a result, tests for serial correlationbased on those residuals may give incorrect readings. Such residuals may leave misleading cluesas to possible specification errors.the Consequences of Serial CorrelationYY b bXXeXFigure Incorrect Functional Form as a Source of Impure Serial CorrelationThe use of an incorrect functional form tends to group positive and negative residualstogether, causing positive impure serial correlation.result, using a linear functional form when a nonlinear one is appropriatewill usually result in positive impure serial correlationThe Consequences of Serial CorrelationThe consequences of serial correlation are quite different in nature from theconsequences of the problems discussed so far in this text. Omitted variables,irrelevant variables, and multicollinearity all have fairly recognizable external ChAPTER Serial Correlationsymptoms. Each problem changes the estimated coefficients and standarderrors in a particular way, and an examination of these changes and theunderlying theory often provides enough information for the problem tobe detected. As we shall see, serial correlation is more likely to have internalsymptoms; it affects the estimated equation in a way that is not easily observable from an examination of just the results themselves.The existence of serial correlation in the error term of an equation violatesClassical Assumption IV and the estimation of the equation with OLS has atleast three consequences: Pure serial correlation does not cause bias in the coefficient estimates.Serial correlation causes OLS to no longer be the minimum varianceestimator of all the linear unbiased estimatorsSerial correlation causes the OLS estimates of the SEns to be biased, leading to unreliable hypothesis testing Pure serial correlation does not cause bias in the coefficient estimates. If theerror term is serially correlated, one of the assumptions of the GaussMarkov Theorem is violated, but this violation does not cause the coefficient estimates to be biased. If the serial correlation is impure, however,bias may be introduced by the use of an incorrect specification.This lack of bias does not necessarily mean that the OLS estimatesof the coefficients of a serially correlated equation will be close to thetrue coefficient values. A single estimate observed in practice can comefrom a wide range of possible values. In addition, the standard errors ofthese estimates will typically be increased by the serial correlation. Thisincrease will raise the probability that a nwill differ significantly fromthe true value. What unbiased means in this case is that the distribution of the ns is still centered around the true Serial correlation causes OLS to no longer be the minimum variance estimatorof all the linear unbiased estimators Although the violation of ClassicalAssumption IV causes no bias, it does affect the other main conclusionof the GaussMarkov Theorem, that of minimum variance. In particular,

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock