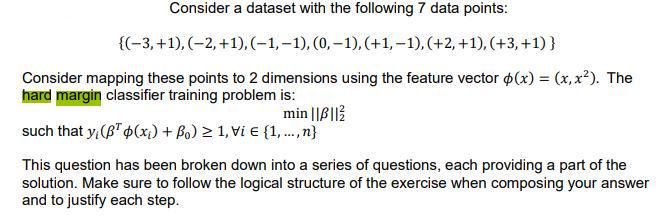

Question: Consider a dataset with the following 7 data points: {(3, +1), (2,+1), (-1,-1), (0,-1), (+1,-1), (+2, +1), (+3, +1)} Consider mapping these points to

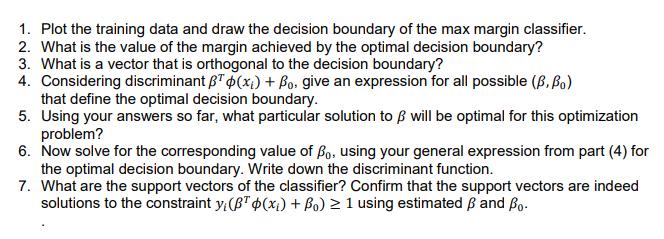

Consider a dataset with the following 7 data points: {(3, +1), (2,+1), (-1,-1), (0,-1), (+1,-1), (+2, +1), (+3, +1)} Consider mapping these points to 2 dimensions using the feature vector (x) = (x, x). The hard margin classifier training problem is: min ||B|| such that y(T(x) + Bo) 1, Vi E {1, ..., n} This question has been broken down into a series of questions, each providing a part of the solution. Make sure to follow the logical structure of the exercise when composing your answer and to justify each step. 1. Plot the training data and draw the decision boundary of the max margin classifier. 2. What is the value of the margin achieved by the optimal decision boundary? 3. What is a vector that is orthogonal to the decision boundary? 4. Considering discriminant T(x) + Bo, give an expression for all possible (, Bo) that define the optimal decision boundary. 5. Using your answers so far, what particular solution to will be optimal for this optimization problem? 6. Now solve for the corresponding value of o, using your general expression from part (4) for the optimal decision boundary. Write down the discriminant function. 7. What are the support vectors of the classifier? Confirm that the support vectors are indeed solutions to the constraint y,(BT(x) + Bo) 1 using estimated and Bo.

Step by Step Solution

There are 3 Steps involved in it

I see that you have a machine learning problem focused on finding a hard margin classifier for a provided dataset using Support Vector Machines SVM Lets go through the series of questions step by step ... View full answer

Get step-by-step solutions from verified subject matter experts