Question: Consider a multi-bandit problem with 2 arms with Bernoulli rewards with unknown param- eters, M1, M2. = We would like to implement the UCB algorithm

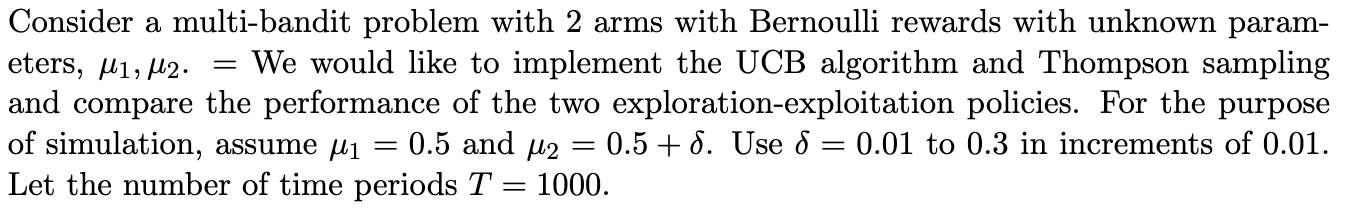

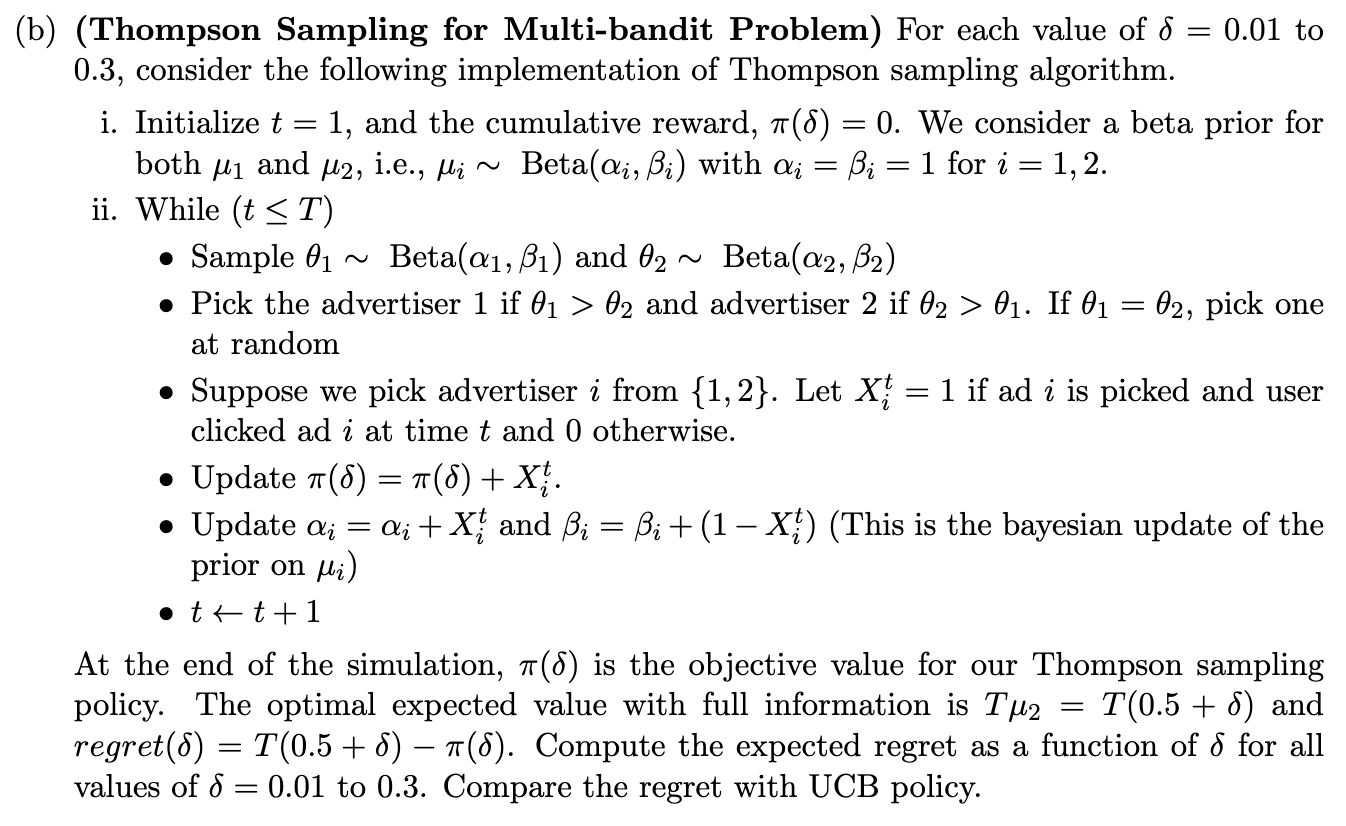

Consider a multi-bandit problem with 2 arms with Bernoulli rewards with unknown param- eters, M1, M2. = We would like to implement the UCB algorithm and Thompson sampling and compare the performance of the two exploration-exploitation policies. For the purpose of simulation, assume /1 = 0.5 and /2 = 0.5 + 6. Use 8 = 0.01 to 0.3 in increments of 0.01. Let the number of time periods T = 1000.(b) (Thompson Sampling for Multibandit Problem) For each value of 6 = 0.01 to 0.3, consider the following implementation of Thompson sampling algorithm. i. Initialize t = 1, and the cumulative reward, (6) = 0. We consider a beta prior for both p1 and p2, i.e., u,- N Beta(o:,-,,6,-) with a, = ,6,- = 1 for 2' = 1,2. ii. While (t g T) Sample 91 ~ Beta(a1, [31) and 62 ~ Beta(a2, [32) Pick the advertiser 1 if 61 > (92 and advertiser 2 if 62 > 61. If 61 = 92, pick one at random Suppose we pick advertiser i from {1, 2}. Let X: = 1 if ad 13 is picked and user clicked ad 3' at time t and 0 otherwise. Update 1r(

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts