Question: Consider a neuron n_i which is getting inputs, a_1 = 1, a_2 = 2, a_3 = 1, from three other neurons from the previous layers.

Consider a neuron n_i which is getting inputs, a_1 = 1, a_2 = 2, a_3 = 1, from three other neurons from the previous layers. The weight for each of these inputs are w_1 = 0, w_2 = 1, w_3 = 0, respectively.

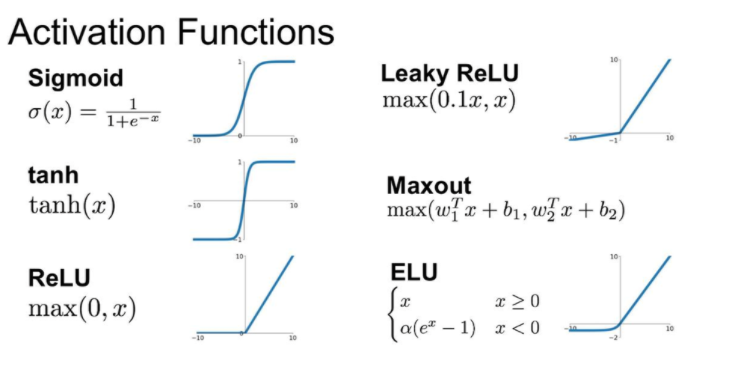

The activation function used by the neuron n_i is the ReLU function shown below. Also the bias at n_i is -2.

What is the output of n_i? (1 point) Please provide a justification for your answer. (1 point)

10 Activation Functions Sigmoid 0(x) = ite-1 Leaky ReLU max(0.1x, x) J 10 tanh tanh(c) Maxout max(w{ x +b1, w x + b2) -10 10 ReLU max(0,x) ELU x > 0 {a(e" 1)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts