Question: Consider an MLP with three input nodes, two hidden layers, and three outputs. The hidden layers use the ReLU activation function and the output layer

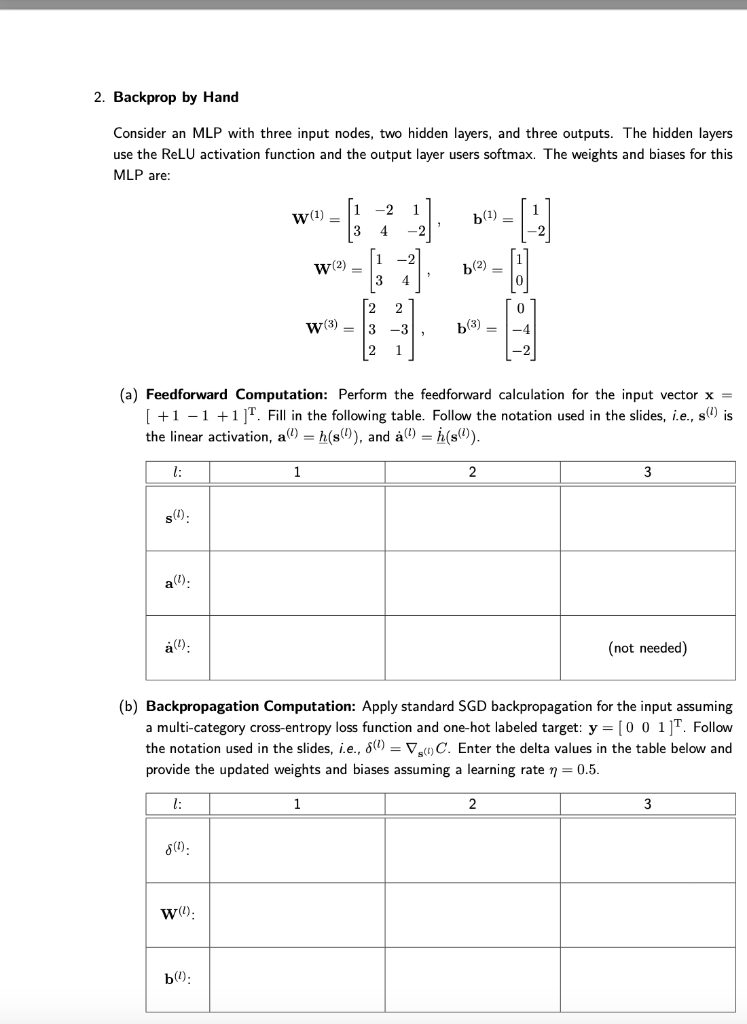

Consider an MLP with three input nodes, two hidden layers, and three outputs. The hidden layers use the ReLU activation function and the output layer users softmax. The weights and biases for this MLP are: W(1)=[132412],W(2)=[1324],W(3)=232231,b(1)=[12]b(2)=[10]b(3)=042 (a) Feedforward Computation: Perform the feedforward calculation for the input vector x= [+11+1]T. Fill in the following table. Follow the notation used in the slides, i.e., s(l) is the linear activation, a(l)=h(s(l)), and a(l)=h(s(l)). (b) Backpropagation Computation: Apply standard SGD backpropagation for the input assuming a multi-category cross-entropy loss function and one-hot labeled target: y=[001]T. Follow the notation used in the slides, i.e., (l)=s(l)C. Enter the delta values in the table below and provide the updated weights and biases assuming a learning rate =0.5

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts