Question: Consider Least-Squares (LS) basis function regression for a single variable input/output problem, i.e., K y = f(x) = wo + wkbk(r). k=1 Let the training

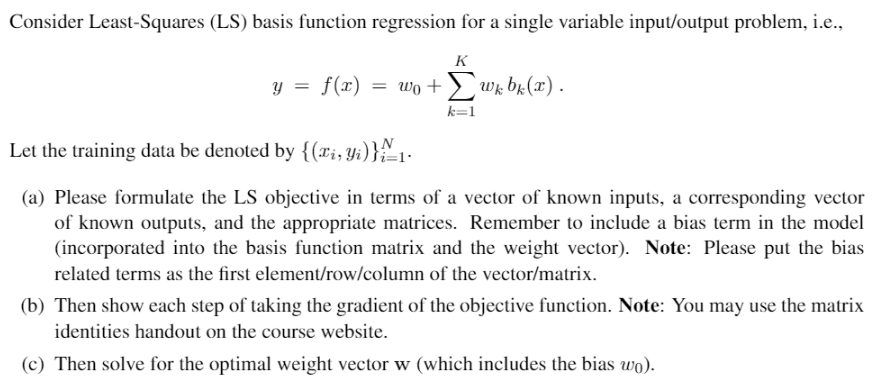

Consider Least-Squares (LS) basis function regression for a single variable input/output problem, i.e., K y = f(x) = wo + wkbk(r). k=1 Let the training data be denoted by {(Li, Yi)}1: (a) Please formulate the LS objective in terms of a vector of known inputs, a corresponding vector of known outputs, and the appropriate matrices. Remember to include a bias term in the model (incorporated into the basis function matrix and the weight vector). Note: Please put the bias related terms as the first element/row/column of the vector/matrix. (b) Then show each step of taking the gradient of the objective function. Note: You may use the matrix identities handout on the course website. (c) Then solve for the optimal weight vector w (which includes the bias wo). Consider Least-Squares (LS) basis function regression for a single variable input/output problem, i.e., K y = f(x) = wo + wkbk(r). k=1 Let the training data be denoted by {(Li, Yi)}1: (a) Please formulate the LS objective in terms of a vector of known inputs, a corresponding vector of known outputs, and the appropriate matrices. Remember to include a bias term in the model (incorporated into the basis function matrix and the weight vector). Note: Please put the bias related terms as the first element/row/column of the vector/matrix. (b) Then show each step of taking the gradient of the objective function. Note: You may use the matrix identities handout on the course website. (c) Then solve for the optimal weight vector w (which includes the bias wo)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts