Question: Consider the decision trees shown in Figures (a) and (b). For each approach described below, you need to compute the generalization errors for both

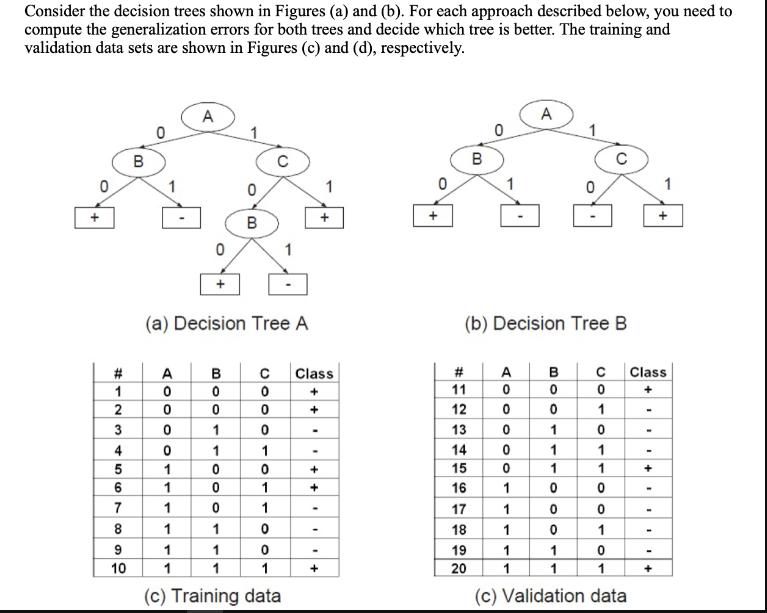

Consider the decision trees shown in Figures (a) and (b). For each approach described below, you need to compute the generalization errors for both trees and decide which tree is better. The training and validation data sets are shown in Figures (c) and (d), respectively. + 0 B A A 0 C B 1 0 1 0 + + B 0 + (a) Decision Tree A (b) Decision Tree B #123 0 3 OOOD A 0 B00 C Class 0 0 + 11 0 0 + 12 0 1 0 - 13 4567 0 1 1 14 1 0 0 + 15 #12345 AO A 0 BO B 0 00 C Class 0 + 0 0 1 - 0 1 0 - 0 1 1 - 0 1 1 + 1 0 1 + 16 1 0 0 - 1 0 1 - 17 1 0 0 8 1 1 0 - 18 1 0 1 - 90 1 1 0 - 19 1 1 0 - 10 1 1 1 + 20 1 1 1 + (c) Training data (c) Validation data (a) Optimistic approach (assumes generalization error is given by the training error). (b) Reduced error pruning approach (generalization error is computed using the validation set shown in Figure (d)), which means generalization error = validation error. (c) Pessimistic error approach, where generalization error = training error + omega * complexity of the tree. Consider omega = 1.0 and complexity of the tree = number of leaves / total number of training points. (d) minimum description length (MDL) approach. The total description length of a tree is given by: Cost(tree, data)=Cost(tree) + Cost(data tree); Each internal node of the tree is encoded by the ID of the splitting attribute. If there are m attributes, the cost of encoding each attribute is log2m bits. Each leaf node is encoded using the ID of the class it is associated with. If there are k classes, the cost of encoding a class is log2 k bits. Cost(tree) is the cost of encoding all the nodes in the tree. To simplify the computation, you can assume that the total cost of the tree is obtained by adding up the costs of encoding each internal node and each leaf node. Cost(data tree) is encoded using the classification errors the tree commits on the training set. Each error is encoded by log2 n bits, where n is the total number of training examples.

Step by Step Solution

There are 3 Steps involved in it

To compute the generalization errors for both Decision Tree A and Decision Tree B and decide which tree is better we will apply various approaches as described starting with the optimistic approach an... View full answer

Get step-by-step solutions from verified subject matter experts