Question: Consider the examples shown below for a binary classification problem. a. What is the entropy of this collection of training examples with respect to the

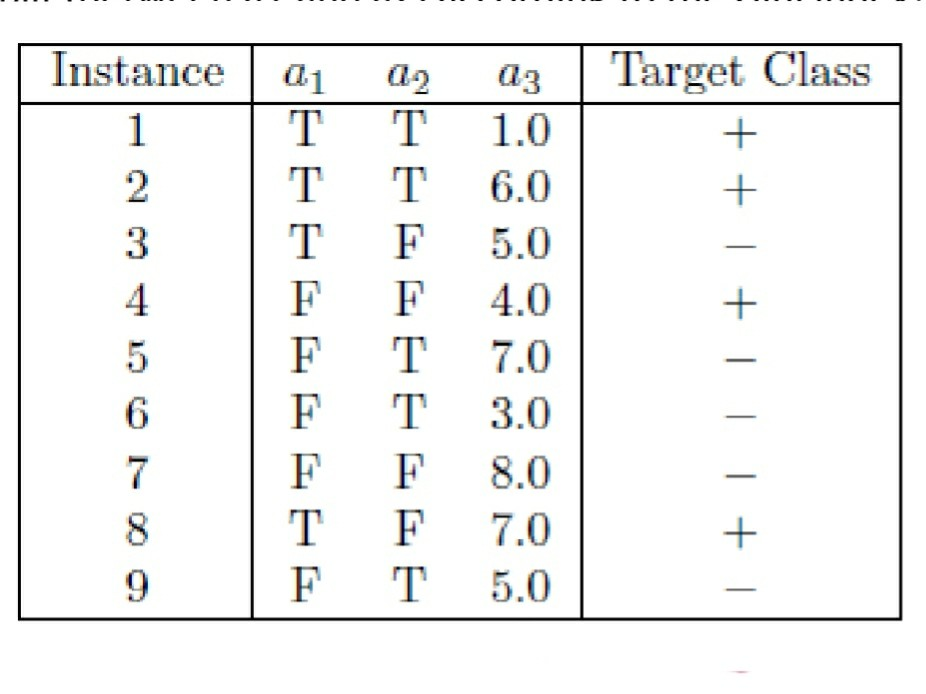

Consider the examples shown below for a binary classification problem. a. What is the entropy of this collection of training examples with respect to the class attribute? b. What are the information gains of a1 and a2 relative to these training examples? c. For a3, which is a continuous attribute, compute the information gain for every possible split. d. Calculate Split Info and Gain Ratio. e. What is the best split (among a1, a2, and a3) according to the information gain? f. What is the best split (between a1 and a2) according to the misclassification error rate? g. What is the best split (between a1 and a2) according to the Gini index?

Instance Target Class + + ai 02 03 TT 1.0 TT 6.0 T F 5.0 F F 4.0 FT 7.0 FT 3.0 F F 8.0 T F 7.0 T 5.0 I + | | + ||

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts