Question: Consider the following algorithm (written in pseudocode) that searches an array for an integer: Input: array A of n integers, and an integer t. Output:

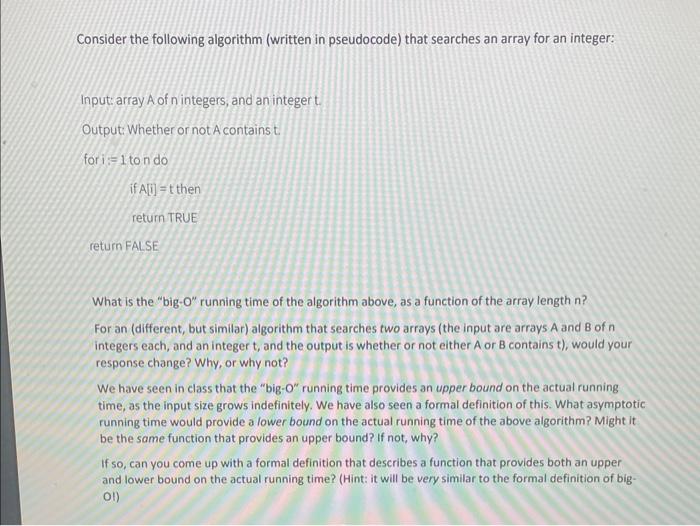

Consider the following algorithm (written in pseudocode) that searches an array for an integer: Input: array A of n integers, and an integer t. Output: Whether or not A contains t for i:=1 to n do if A[i]=t then return TRUE return FALSE What is the "big-0" running time of the algorithm above, as a function of the array length n ? For an (different, but similar) algorithm that searches two arrays (the input are arrays A and B of n integers each, and an integer t, and the output is whether or not either A or B contains t ), would your response change? Why, or why not? We have seen in class that the "big- O " running time provides an upper bound on the actual running time, as the input size grows indefinitely. We have also seen a formal definition of this. What asymptotic running time would provide a lower bound on the actual running time of the above algorithm? Might it be the same function that provides an upper bound? If not, why? If so, can you come up with a formal definition that describes a function that provides both an upper and lower bound on the actual running time? (Hint: it will be very similar to the formal definition of big- OI)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts