Question: Consider the following data set for a binary class problem. Calculate the information gain when splitting on attribute A and B, respectively. Which attribute would

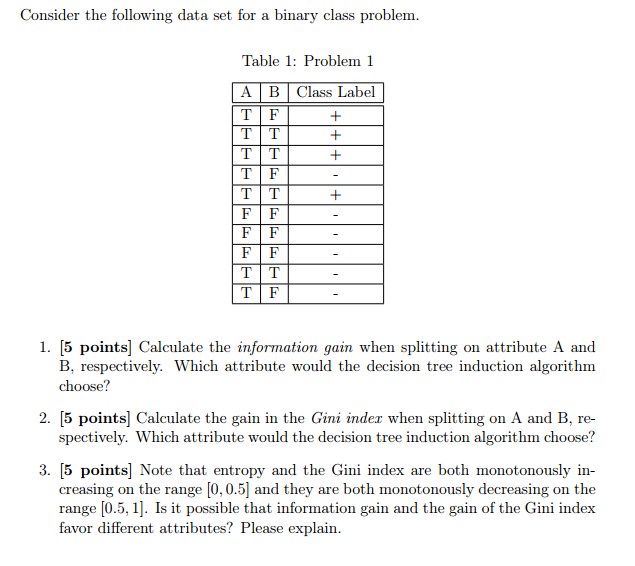

Consider the following data set for a binary class problem. Calculate the information gain when splitting on attribute A and B, respectively. Which attribute would the decision tree induction algorithm choose? Calculate the gain in the Gini index when splitting on A and B, respectively. Which attribute would the decision tree induction algorithm choose? Note that entropy and the Gini index are both monotonously increasing on the range [0, 0.5] and they are both monotonously decreasing on the range [0.5, 1]. Is it possible t information gain and the gain of the Gini index favor different attributes? Please explain. Consider the following data set for a binary class problem. Calculate the information gain when splitting on attribute A and B, respectively. Which attribute would the decision tree induction algorithm choose? Calculate the gain in the Gini index when splitting on A and B, respectively. Which attribute would the decision tree induction algorithm choose? Note that entropy and the Gini index are both monotonously increasing on the range [0, 0.5] and they are both monotonously decreasing on the range [0.5, 1]. Is it possible t information gain and the gain of the Gini index favor different attributes? Please explain

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts