Question: Consider the one-dimensional convex function J()=2+1. If a gradient descent algorithm with learning rate =0.3 is initialized so (0)=3 (a) determine, by hand, (k) and

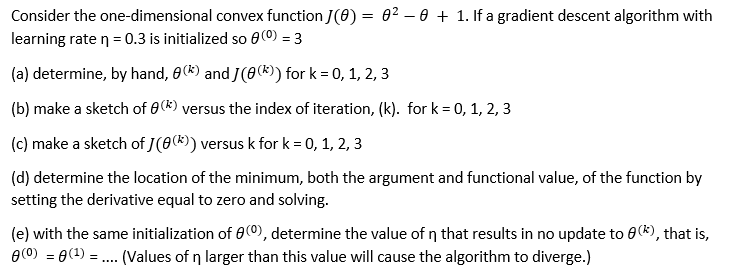

Consider the one-dimensional convex function J()=2+1. If a gradient descent algorithm with learning rate =0.3 is initialized so (0)=3 (a) determine, by hand, (k) and J((k)) for k=0,1,2,3 (b) make a sketch of (k) versus the index of iteration, (k). for k=0,1,2,3 (c) make a sketch of J((k)) versus k for k=0,1,2,3 (d) determine the location of the minimum, both the argument and functional value, of the function by setting the derivative equal to zero and solving. (e) with the same initialization of (0), determine the value of that results in no update to (k), that is, (0)=(1)= (Values of larger than this value will cause the algorithm to diverge.)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts