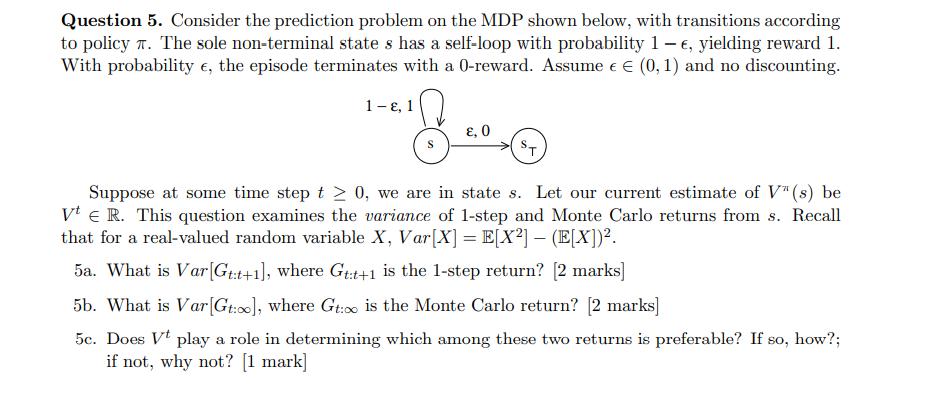

Question: Consider the prediction problem on the MDP shown below, with transitions according to policy. The sole non-terminal state s has a self-loop with probability

Consider the prediction problem on the MDP shown below, with transitions according to policy. The sole non-terminal state s has a self-loop with probability 1-e, yielding reward 1. With probability , the episode terminates with a 0-reward. Assume (0, 1) and no discounting. 1-E, 1 " 8,0 Suppose at some time step t 0, we are in state s. Let our current estimate of V" (s) be VER. This question examines the variance of 1-step and Monte Carlo returns from s. Recall that for a real-valued random variable X, Var[X] = E[X2] - (E[X]). 5a. What is Var[Gt:t+1], where Gt:t+1 is the 1-step return? [2 marks] 5b. What is Var[Gt:], where Gt: is the Monte Carlo return? [2 marks] 5c. Does Vt play a role in determining which among these two returns is preferable? If so, how?; if not, why not? [1 mark]

Step by Step Solution

3.52 Rating (152 Votes )

There are 3 Steps involved in it

The detailed ... View full answer

Get step-by-step solutions from verified subject matter experts