Question: Consider the same example of classifying comments as toxic or not toxic. For the sake of consistent terminology, let's label a toxic comment as

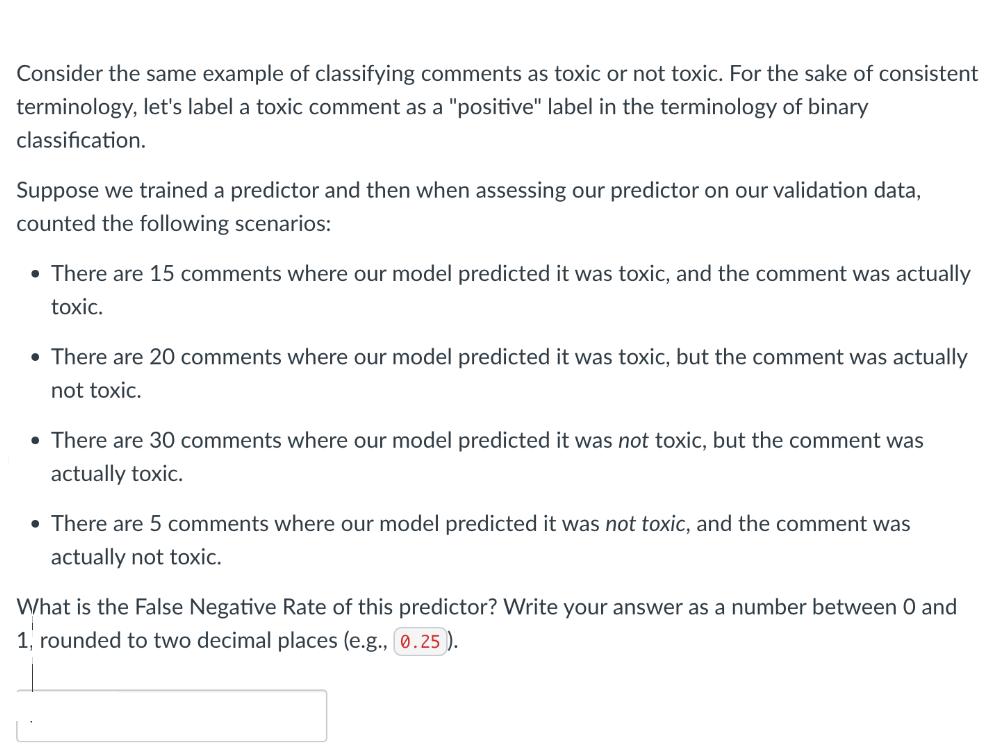

Consider the same example of classifying comments as toxic or not toxic. For the sake of consistent terminology, let's label a toxic comment as a "positive" label in the terminology of binary classification. Suppose we trained a predictor and then when assessing our predictor on our validation data, counted the following scenarios: There are 15 comments where our model predicted it was toxic, and the comment was actually toxic. There are 20 comments where our model predicted it was toxic, but the comment was actually not toxic. There are 30 comments where our model predicted it was not toxic, but the comment was actually toxic. There are 5 comments where our model predicted it was not toxic, and the comment was actually not toxic. What is the False Negative Rate of this predictor? Write your answer as a number between 0 and 1, rounded to two decimal places (e.g., 0.25).

Step by Step Solution

3.48 Rating (158 Votes )

There are 3 Steps involved in it

the Fake negative ovote the persporter of actual positive instances that are ... View full answer

Get step-by-step solutions from verified subject matter experts