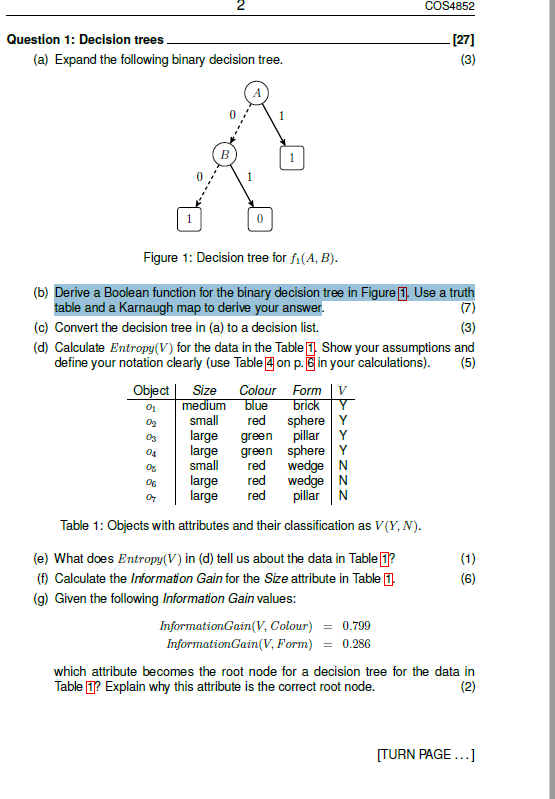

Question: COS4852 Question 1: Decision trees (a) Expand the following binary decision tree. [27] (3) B 1 1 0 Figure 1: Decision tree for fi(A,B). (b)

COS4852 Question 1: Decision trees (a) Expand the following binary decision tree. [27] (3) B 1 1 0 Figure 1: Decision tree for fi(A,B). (b) Derive a Boolean function for the binary decision tree in Figure 1. Use a truth table and a Karnaugh map to derive your answer. (7) (c) Convert the decision tree in (a) to a decision list. (3) (d) Calculate Entropy(V) for the data in the Table 1. Show your assumptions and define your notation clearly (use Table on p. 6 in your calculations). (5) Object Size Colour Form V medium blue bricky small red sphere Y large green pillary large green sphere small red wedge N large wedge N large red pillar N Table 1: Objects with attributes and their classification as V(Y, N). 01 02 Os 04 06 red (e) What does Entropy(V) in (d) tell us about the data in Table 1? (1) (1) Calculate the Information Gain for the Size attribute in Table 1 (6) (g) Given the following Information Gain values: Information Gain(V. Colour) = 0.799 Information Gain(V. Form) = 0.286 which attribute becomes the root node for a decision tree for the data in Table 1? Explain why this attribute is the correct root node. (2) [TURN PAGE...)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts