Question: Dear ElderMetalBadger4, here is the complete question. Thanks. 2(a) Gradient descent represents the simplest optimization method. We can express it using: Then repeat 2(b) for

Dear ElderMetalBadger4, here is the complete question. Thanks.

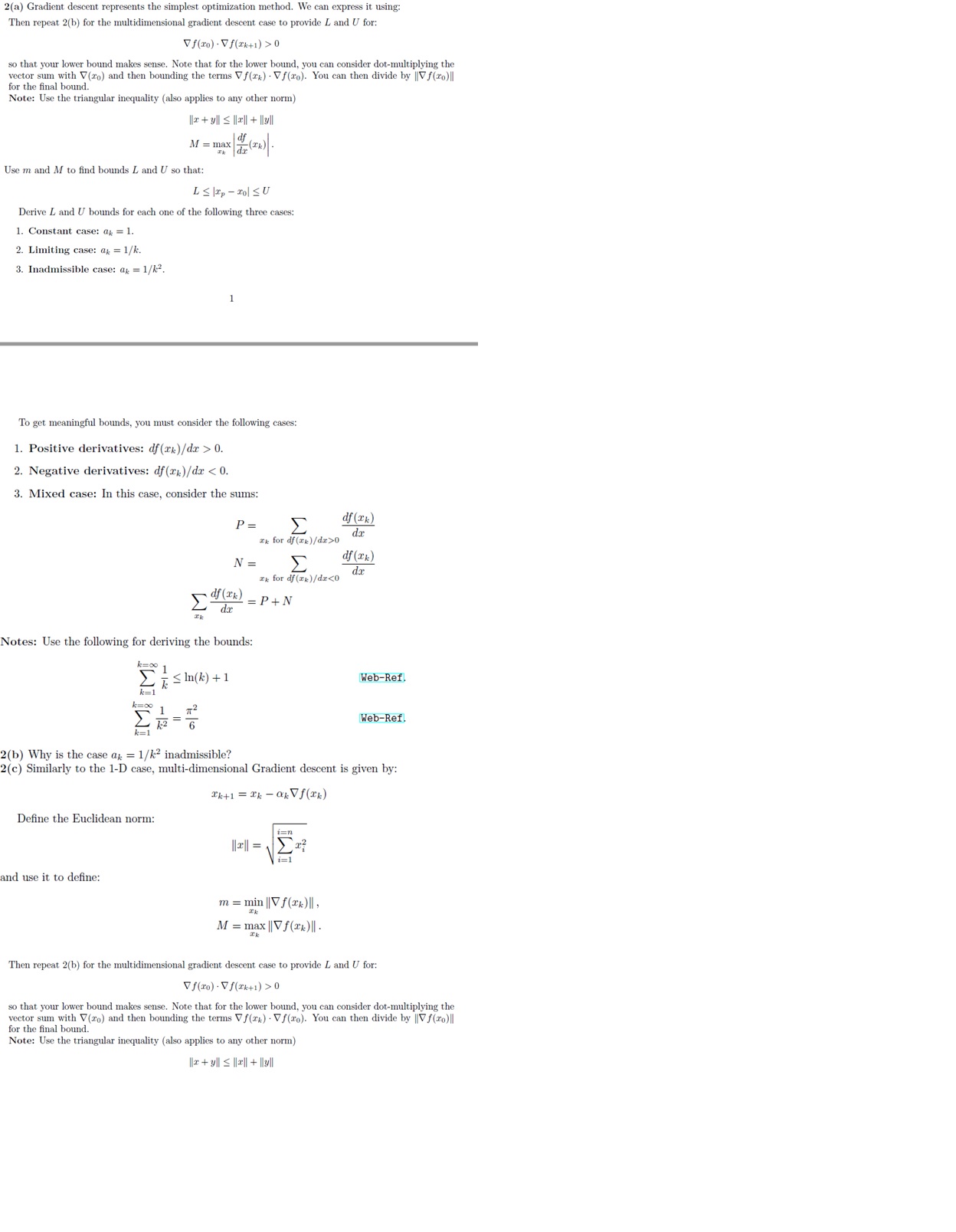

2(a) Gradient descent represents the simplest optimization method. We can express it using: Then repeat 2(b) for the multidimensional gradient descent case to provide L and U for: Vf(ro) . Vf(Ik+1) > 0 so that your lower bound makes sense. Note that for the lower bound, you can consider dot-multiplying the vector sum with V(ro) and then bounding the terms Vf(TK) . Vf(ro). You can then divide by ||V f(ro)|| for the final bound. Note: Use the triangular inequality (also applies to any other norm) Iz + yll s lx]| + lyll M = max (Ik) - Use m and M to find bounds L and U so that: L SIXp - Tol SU Derive L and U bounds for each one of the following three cases: 1. Constant case: at = 1 2. Limiting case: ak = 1/k. 3. Inadmissible case: ax = 1/12. To get meaningful bounds, you must consider the following cases: 1. Positive derivatives: df (Tk)/dx > 0. 2. Negative derivatives: df (Tk)/dr 0 dx N = df (Ik) Ik for df (Ik)/dx

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts