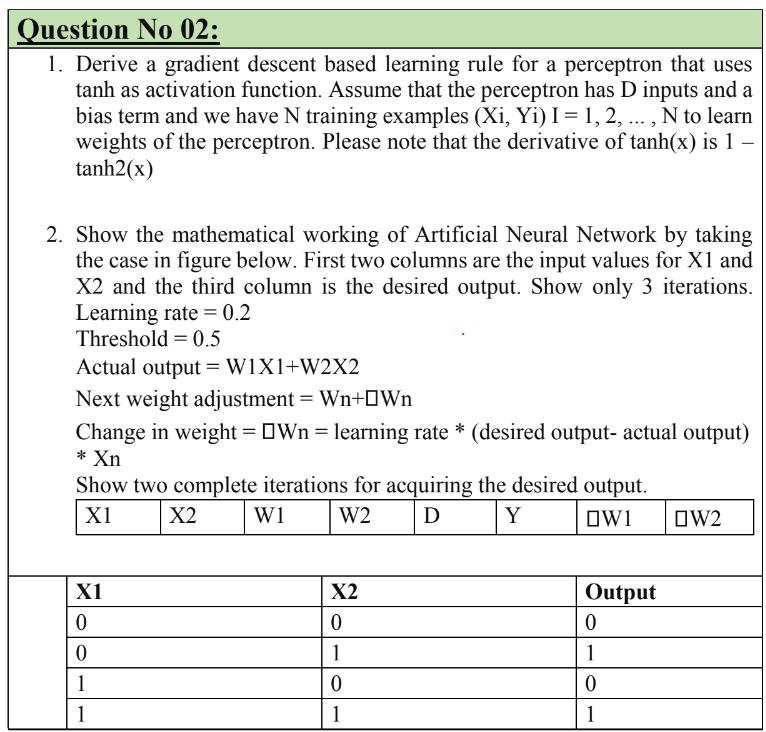

Question: Derive a gradient descent based learning rule for a perceptron that uses tanh as activation function. Assume that the perceptron has D inputs and a

Derive a gradient descent based learning rule for a perceptron that uses

tanh as activation function. Assume that the perceptron has D inputs and a

bias term and we have N training examples Xi Yi I N to learn

weights of the perceptron. Please note that the derivative of tanhx is

tanhx

Show the mathematical working of Artificial Neural Network by taking

the case in figure below. First two columns are the input values for X and

X and the third column is the desired output. Show only iterations.

Learning rate

Threshold

Actual output WXWX

Next weight adjustment Wn Wn

Change in weight Wn learning rate desired output actual output

Xn

Show two complete iterations for acquiring the desired output.

X X W W D Y W W

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock