Question: Do not copy-paste anything from google or any previous solves. kindly write in your own language. The answer should be within (50 to 60 words)

Do not copy-paste anything from google or any previous solves. kindly write in your own language.

The answer should be within (50 to 60 words)

The answer should be based on the paragraph below. I will upvote after getting the answer :) thanks

-----------------------------------------------------------------------------------------------------

Question:

How_authors_show_that_in_the_paper_that_under_relatively_weak_assumptions,_recurrence_in_RNNs_and_consequently,_the_inference_cost_can_be_reduced_significantly.

-----------------------------------------------------------------------------------------------------

Paragraph:

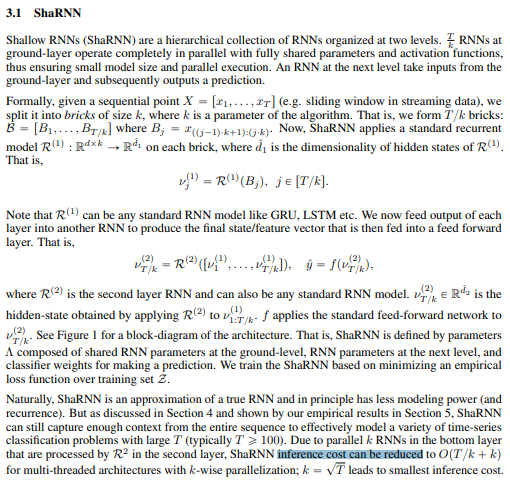

3.1 ShaRNN Shallow RNNs (ShaRNN) are a hierarchical collection of RNNs organized at two levels. RNNs at ground-layer operate completely in parallel with fully shared parameters and activation functions, thus ensuring small model size and parallel execution. An RNN at the next level take inputs from the ground-layer and subsequently outputs a prediction. Formally, given a sequential point X = [11,..., OT] (e.g. sliding window in streaming data), we split it into bricks of size k, where k is a parameter of the algorithm. That is, we form T/k bricks: B = [B1,...,Brx] where B; = 2(0-1)2+13:43-4). Now, ShaRNN applies a standard recurrent model R(1) : Rdxk Rdon each brick, where d, is the dimensionality of hidden states of R). That is, ) = R(1)(B;), je [1/4]. Note that can be any standard RNN model like GRU, LSTM etc. We now feed output of each layer into another RNN to produce the final state/feature vector that is then fed into a feed forward layer. That is, 112 = R2) [") 27]), y = f(ur), where R(2) is the second layer RNN and can also be any standard RNN model. V e Rdz is the hidden-state obtained by applying R(2) tove- f applies the standard feed-forward network to 22 See Figure 1 for a block-diagram of the architecture. That is, ShaRNN is defined by parameters A composed of shared RNN parameters at the ground-level, RNN parameters at the next level, and classifier weights for making a prediction. We train the ShaRNN based on minimizing an empirical loss function over training set 2. Naturally, ShaRNN is an approximation of a true RNN and in principle has less modeling power (and recurrence). But as discussed in Section 4 and shown by our empirical results in Section 5, ShaRNN can still capture enough context from the entire sequence to effectively model a variety of time-series classification problems with large T (typically T > 100). Due to parallel k RNNs in the bottom layer that are processed by R2 in the second layer, ShaRNN inference cost can be reduced to O(T/k+ k) for multi-threaded architectures with k-wise parallelization; k = VT leads to smallest inference cost. (1) a

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts