Question: E Considering the following neural network with inputs (x1, x2), outputs (21, 22, 23), and parameters = (a, b, c, d, e, f, i,

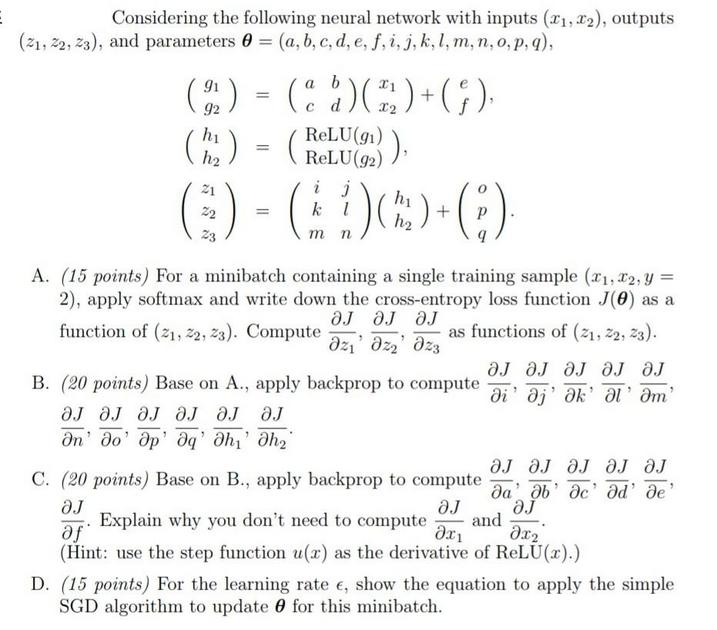

E Considering the following neural network with inputs (x1, x2), outputs (21, 22, 23), and parameters = (a, b, c, d, e, f, i, j, k, l, m, n, o, p, q), 91 a b (2) = ( ))+(}). 92 == (h) = 21 22 = 23 C ReLU(91)), ReLU(92) i j k l 6)-(490-8 m n + P h2 A. (15 points) For a minibatch containing a single training sample (x1, x2, y = 2), apply softmax and write down the cross-entropy loss function J(6) as a function of (21, 22, 23). Compute as functions of (21, 22, 23). 3 B. (20 points) Base on A., apply backprop to compute On do' Op' Oq h' h , ai' aj ak' al' m' C. (20 points) Base on B., apply backprop to compute af Explain why you don't need to compute and x1 (Hint: use the step function u(x) as the derivative of ReLU(x).) D. (15 points) For the learning rate e, show the equation to apply the simple SGD algorithm to update 0 for this minibatch.

Step by Step Solution

3.47 Rating (154 Votes )

There are 3 Steps involved in it

A For a single training sample x1 x2 y 2 we first calculate the outputs z1 z2 z3 using the given neu... View full answer

Get step-by-step solutions from verified subject matter experts