Question: Example 2 The linear regression model is estimated by minimizing the sum of squared residuals from fitting a linear model Bo +iBi + to the

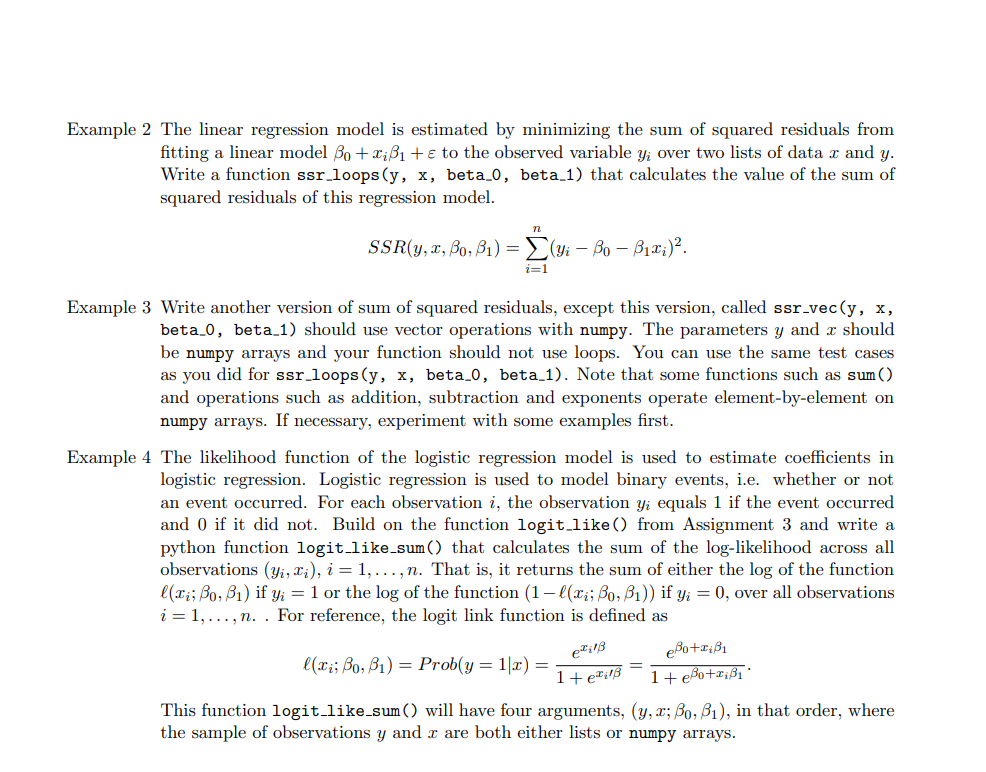

Example 2 The linear regression model is estimated by minimizing the sum of squared residuals from fitting a linear model Bo +iBi + to the observed variable yi over two lists of data and y. Write a function ssr_loops(y, x, beta.0, beta_1) that calculates the value of the sum of squared residuals of this regression model. SSR(y, 2, B, B1) = (yi - Bo - B12;). ( i=1 Example 3 Write another version of sum of squared residuals, except this version, called ssr_vec(y, x, beta_0, beta_1) should use vector operations with numpy. The parameters y and x should be numpy arrays and your function should not use loops. You can use the same test cases as you did for ssr_loops (y, x, beta_0, beta-1). Note that some functions such as sum() and operations such as addition, subtraction and exponents operate element-by-element on numpy arrays. If necessary, experiment with some examples first. Example 4 The likelihood function of the logistic regression model is used to estimate coefficients in logistic regression. Logistic regression is used to model binary events, i.e. whether or not an event occurred. For each observation i, the observation yi equals 1 if the event occurred and 0 if it did not. Build on the function logit like() from Assignment 3 and write a python function logit_like_sum() that calculates the sum of the log-likelihood across all observations (yi,ri), i = 1,...,n. That is, it returns the sum of either the log of the function llli; Bo, B1) if yi = 1 or the log of the function (1 - lli; Bo, B1)) if yi = 0, over all observations i = 1,...,n. . For reference, the logit link function is defined as eBo+2:31 l(li; Bo, B1) = Prob(y = 12) = 1 + eBo+2iBi This function logit_like_sum() will have four arguments, (y,x; Bo, B1), in that order, where the sample of observations y and r are both either lists or numpy arrays. e2:18 1+eti/B Example 2 The linear regression model is estimated by minimizing the sum of squared residuals from fitting a linear model Bo +iBi + to the observed variable yi over two lists of data and y. Write a function ssr_loops(y, x, beta.0, beta_1) that calculates the value of the sum of squared residuals of this regression model. SSR(y, 2, B, B1) = (yi - Bo - B12;). ( i=1 Example 3 Write another version of sum of squared residuals, except this version, called ssr_vec(y, x, beta_0, beta_1) should use vector operations with numpy. The parameters y and x should be numpy arrays and your function should not use loops. You can use the same test cases as you did for ssr_loops (y, x, beta_0, beta-1). Note that some functions such as sum() and operations such as addition, subtraction and exponents operate element-by-element on numpy arrays. If necessary, experiment with some examples first. Example 4 The likelihood function of the logistic regression model is used to estimate coefficients in logistic regression. Logistic regression is used to model binary events, i.e. whether or not an event occurred. For each observation i, the observation yi equals 1 if the event occurred and 0 if it did not. Build on the function logit like() from Assignment 3 and write a python function logit_like_sum() that calculates the sum of the log-likelihood across all observations (yi,ri), i = 1,...,n. That is, it returns the sum of either the log of the function llli; Bo, B1) if yi = 1 or the log of the function (1 - lli; Bo, B1)) if yi = 0, over all observations i = 1,...,n. . For reference, the logit link function is defined as eBo+2:31 l(li; Bo, B1) = Prob(y = 12) = 1 + eBo+2iBi This function logit_like_sum() will have four arguments, (y,x; Bo, B1), in that order, where the sample of observations y and r are both either lists or numpy arrays. e2:18 1+eti/B

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts