Question: Exercise 11 (5 points) Let P and Q be two probability distributions over the same sample space. The KL-divergence is a measure of how different

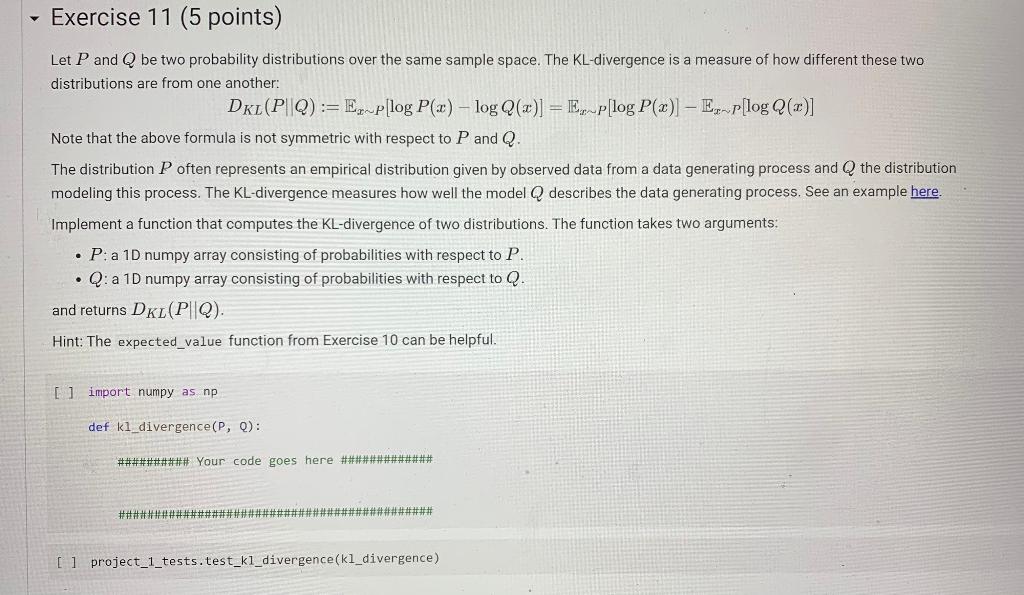

Exercise 11 (5 points) Let P and Q be two probability distributions over the same sample space. The KL-divergence is a measure of how different these two distributions are from one another: DKL(PQ):= Exp[log P(x) - log Q(2)] = Exp[log P(x)]- Ex-p[log Q(x)] Note that the above formula is not symmetric with respect to P and Q. The distribution P often represents an empirical distribution given by observed data from a data generating process and Q the distribution modeling this process. The KL-divergence measures how well the model Q describes the data generating process. See an example here. Implement a function that computes the KL-divergence of two distributions. The function takes two arguments: P:a 1D numpy array consisting of probabilities with respect to P. Q:a 1D numpy array consisting of probabilities with respect to Q and returns DKI(PIQ). Hint: The expected_value function from Exercise 10 can be helpful. [] import numpy as np def ki_divergence(P, 0): ########## Your code goes here ############# ####################### ################ [l project_1_tests.test_ki_divergence(kl_divergence)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts