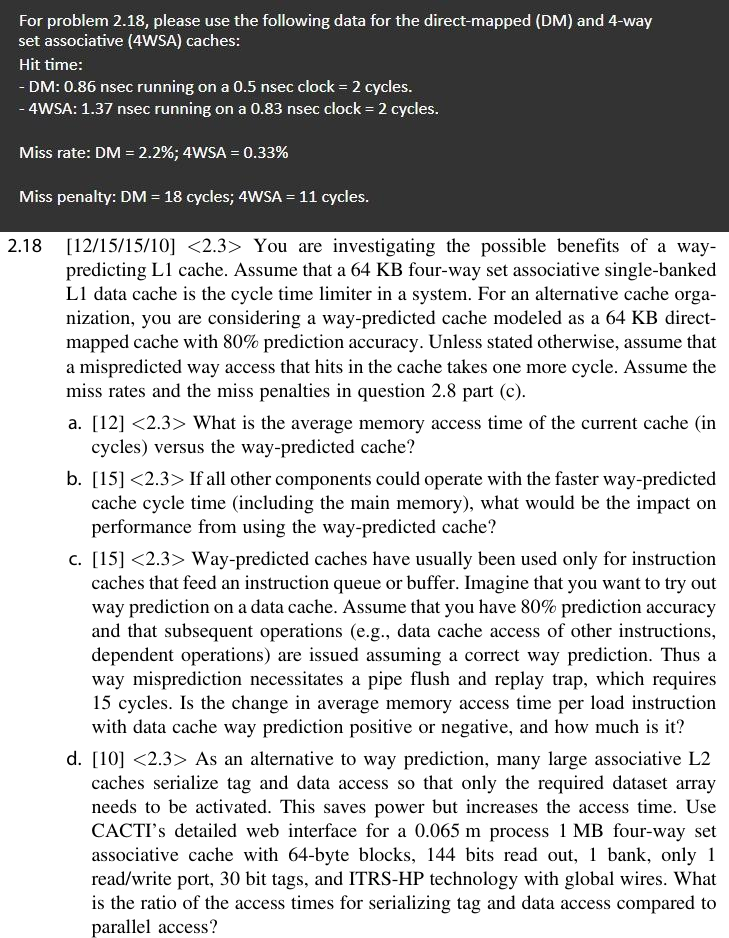

Question: For problem 2 . 1 8 , please use the following data for the direct - mapped ( DM ) and 4 - way set

For problem please use the following data for the directmapped DM and way

set associative WSA caches:

Hit time:

DM: nsec running on a nsec clock cycles.

WSA: nsec running on a nsec clock cycles.

Miss rate: ;WSA

Miss penalty: DM cycles; WSA cycles.

You are investigating the possible benefits of a way

predicting L cache. Assume that a KB fourway set associative singlebanked

L data cache is the cycle time limiter in a system. For an alternative cache orga

nization, you are considering a waypredicted cache modeled as a direct

mapped cache with prediction accuracy. Unless stated otherwise, assume that

a mispredicted way access that hits in the cache takes one more cycle. Assume the

miss rates and the miss penalties in question part c

a: What is the average memory access time of the current cache in

cycles versus the waypredicted cache?

b: If all other components could operate with the faster waypredicted

cache cycle time including the main memory what would be the impact on

performance from using the waypredicted cache?

c Waypredicted caches have usually been used only for instruction

caches that feed an instruction queue or buffer. Imagine that you want to try out

way prediction on a data cache. Assume that you have prediction accuracy

and that subsequent operations eg data cache access of other instructions,

dependent operations are issued assuming a correct way prediction. Thus a

way misprediction necessitates a pipe flush and replay trap, which requires

cycles. Is the change in average memory access time per load instruction

with data cache way prediction positive or negative, and how much is it

d: As an alternative to way prediction, many large associative L

caches serialize tag and data access so that only the required dataset array

needs to be activated. This saves power but increases the access time. Use

CACTI's detailed web interface for a process fourway set

associative cache with byte blocks, bits read out, bank, only

readwrite port, bit tags, and ITRSHP technology with global wires. What

is the ratio of the access times for serializing tag and data access compared to

parallel access?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock