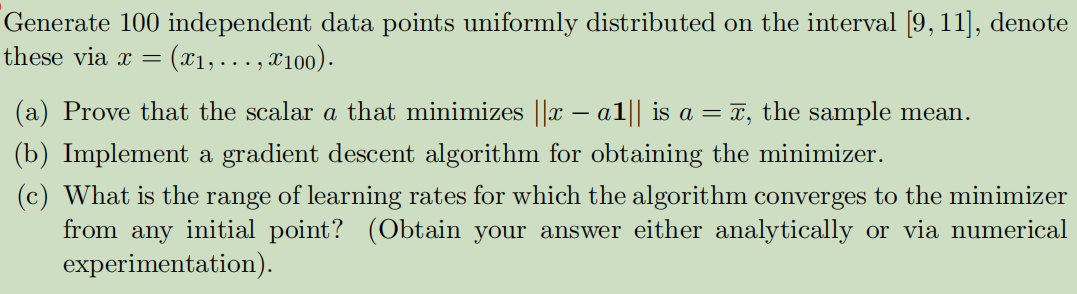

Question: Generate 100 independent data points uniformly distributed on the interval [9, 11], denote these via x = (X1, . . ., 100). (a) Prove that

Generate 100 independent data points uniformly distributed on the interval [9, 11], denote these via x = (X1, . . ., "100). (a) Prove that the scalar a that minimizes |x - al|is a = >, the sample mean. (b) Implement a gradient descent algorithm for obtaining the minimizer. (c) What is the range of learning rates for which the algorithm converges to the minimizer from any initial point? (Obtain your answer either analytically or via numerical experimentation)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts