Question: Given a simplified GRU with input x , output y , and t indicates time. The forget gate at time t is calculated as: f_(t)=ux_(t)+vh_(t-1)

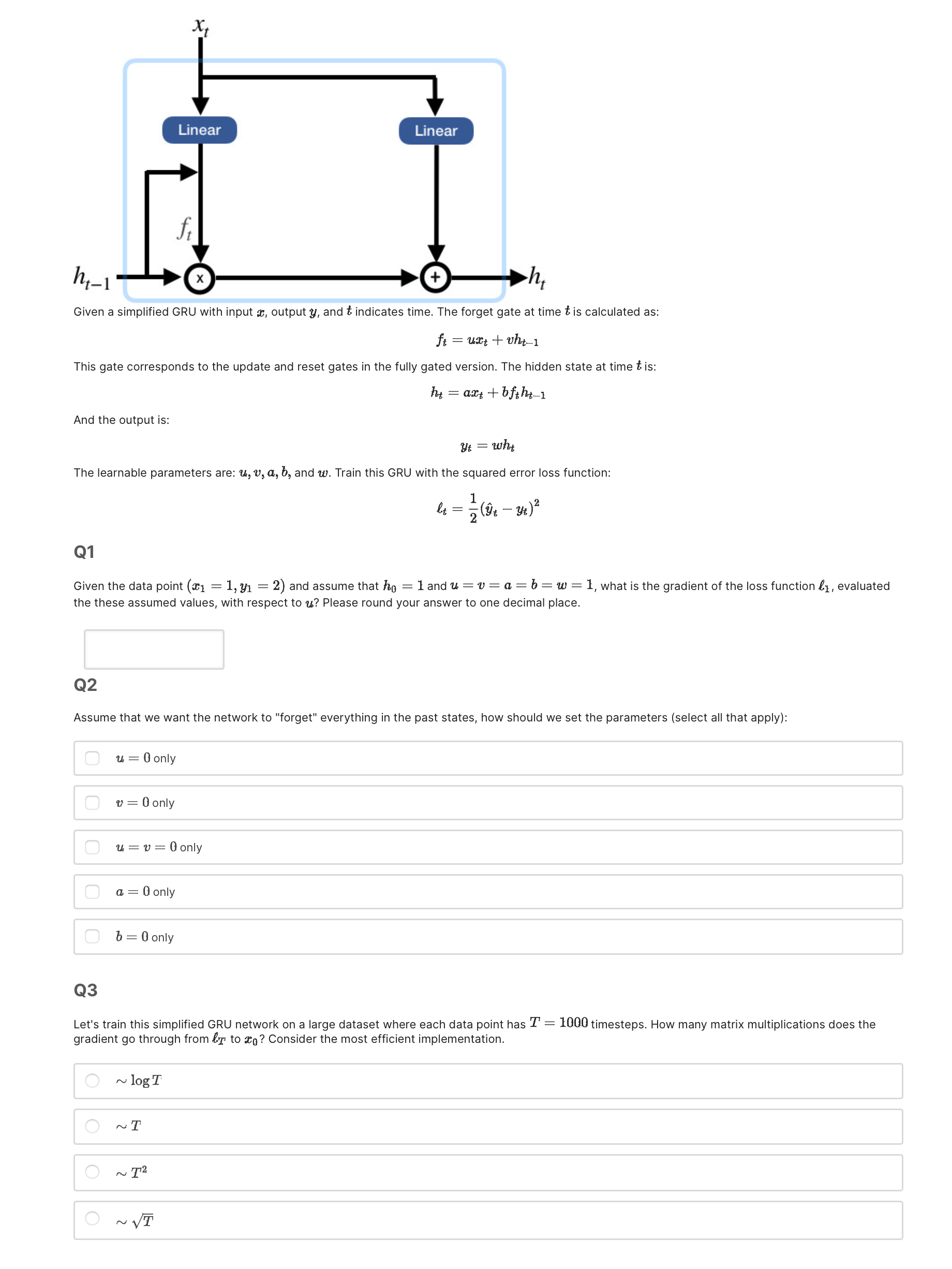

Given a simplified GRU with input

x, output

y, and t indicates time. The forget gate at time t is calculated as:\

f_(t)=ux_(t)+vh_(t-1)\ This gate corresponds to the update and reset gates in the fully gated version. The hidden state at time

tis:\

h_(t)=ax_(t)+bf_(t)h_(b-1)\ And the output is:\

y_(t)=wh_(t)\ The learnable parameters are:

u_(,)v_(,)a,b_(3), and

w. Train this GRU with the squared error loss function:\

l_(t)=(1)/(2)(hat(y)_(t)-y_(t))^(2)\ Part 1\ Given the data point

(x_(1)=1,y_(1)=2)and assume that

h_(0)=1and

u=v=a=b=w=1, what is the gradient of the loss function

l_(1), evaluated\ the these assumed values, with respect to

u? Please round your answer to one decimal place.\ Assume that we want the network to "forget" everything in the past states, how should we set the parameters (select all that apply):\

u=0 only \

v=0only\

u=v=0only\

a=0only\

vec(b)=0 only \ Q3\ Let's train this simplified GRU network on a large dataset where each data point has

T=1000timesteps. How many matrix multiplications does the\ gradient go through from

l_(T)to

x_(0)? Consider the most efficient implementation.\

logT\

T\

T^(2)\

\\\\sqrt(T)

ft=uxt+vht1 This gate corresponds to the update and reset gates in the fully gated version. The hidden state at time t is: ht=axt+bftht1 And the output is: yt=wht The learnable parameters are: u,v,a,b, and w. Train this GRU with the squared error loss function: t=21(y^tyt)2 Q1 Given the data point (x1=1,y1=2) and assume that h0=1 and u=v=a=b=w=1, what is the gradient of the loss function 1, evaluated the these assumed values, with respect to u ? Please round your answer to one decimal place. Q2 Assume that we want the network to "forget" everything in the past states, how should we set the parameters (select all that apply): u=0only v=0 only u=v=0 only a=0 only b=0 only Q3 Let's train this simplified GRU network on a large dataset where each data point has T=1000 timesteps. How many matrix multiplications does the gradient go through from T to x0 ? Consider the most efficient implementation. logT T T2 T

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts