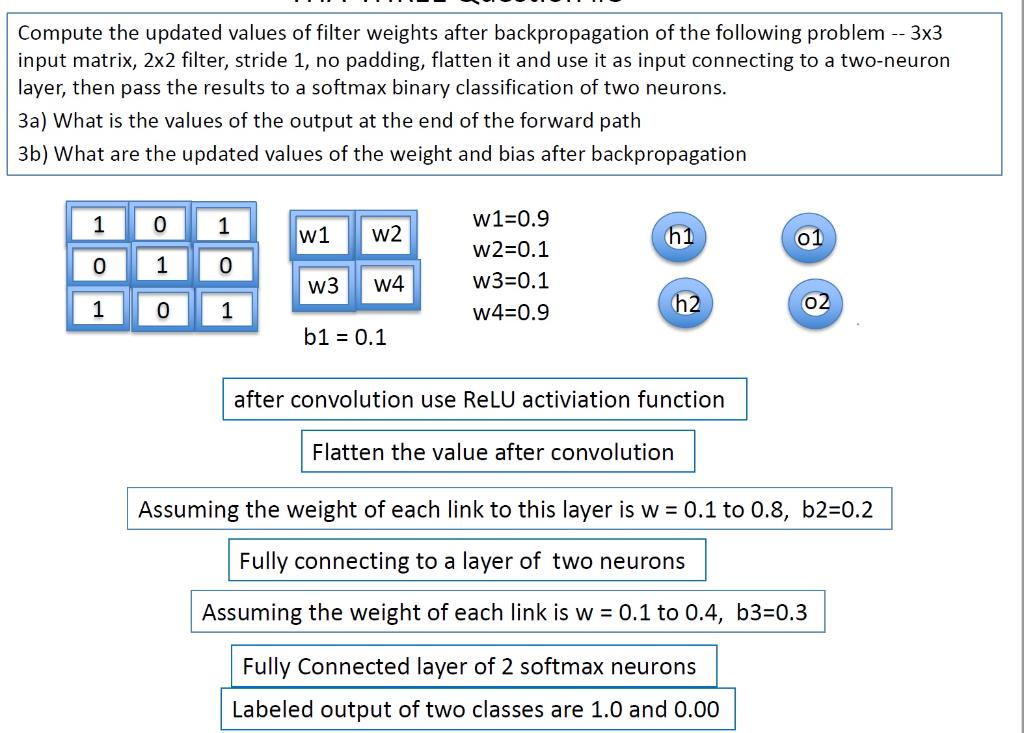

Question: Given the NN shown above: 3x3 input matrix, 2x2 filter, stride 1, no padding, ReLU activation function, flatten it and use it as input connecting

Given the NN shown above: 3x3 input matrix, 2x2 filter, stride 1, no padding, ReLU activation function, flatten it and use it as input connecting to a two-neuron layer, then pass the results to a softmax binary classification of two neurons.

3a) (0.5%) What is the values of the output at the end of the forward path?

3b) (1.5%) What are the updated values of the weight and bias after backpropagation?

Compute the updated values of filter weights after backpropagation of the following problem -- 3x3 input matrix, 2x2 filter, stride 1, no padding, flatten it and use it as input connecting to a two-neuron layer, then pass the results to a softmax binary classification of two neurons. 3a) What is the values of the output at the end of the forward path 3b) What are the updated values of the weight and bias after backpropagation 1 0 1 1 w1 W2 h1 01 o 0 W1=0.9 w2=0.1 W3=0.1 w4=0.9 w3 W4 1 0 1 h2 02 b1 = 0.1 after convolution use ReLU activiation function Flatten the value after convolution Assuming the weight of each link to this layer is w = 0.1 to 0.8, b2=0.2 Fully connecting to a layer of two neurons Assuming the weight of each link is w = 0.1 to 0.4, b3=0.3 Fully Connected layer of 2 softmax neurons Labeled output of two classes are 1.0 and 0.00 Compute the updated values of filter weights after backpropagation of the following problem -- 3x3 input matrix, 2x2 filter, stride 1, no padding, flatten it and use it as input connecting to a two-neuron layer, then pass the results to a softmax binary classification of two neurons. 3a) What is the values of the output at the end of the forward path 3b) What are the updated values of the weight and bias after backpropagation 1 0 1 1 w1 W2 h1 01 o 0 W1=0.9 w2=0.1 W3=0.1 w4=0.9 w3 W4 1 0 1 h2 02 b1 = 0.1 after convolution use ReLU activiation function Flatten the value after convolution Assuming the weight of each link to this layer is w = 0.1 to 0.8, b2=0.2 Fully connecting to a layer of two neurons Assuming the weight of each link is w = 0.1 to 0.4, b3=0.3 Fully Connected layer of 2 softmax neurons Labeled output of two classes are 1.0 and 0.00

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts