Question: Given two matrices 2 -1 1 2 -2 A = 1 1 1 1 1 1 1 -2 2 2 If one uses Jacobi

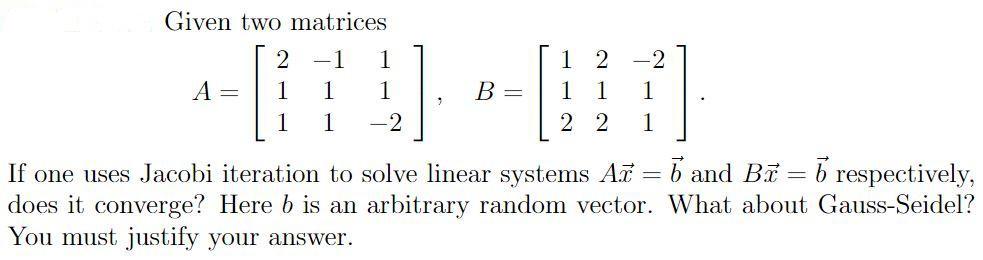

Given two matrices 2 -1 1 2 -2 A = 1 1 1 1 1 1 1 -2 2 2 If one uses Jacobi iteration to solve linear systems A = b and Bi = b respectively, does it converge? Here b is an arbitrary random vector. What about Gauss-Seidel? You must justify your answer.

Step by Step Solution

3.43 Rating (153 Votes )

There are 3 Steps involved in it

Answer Step 1 Jacobi iteration method and Gaus seidel m eth enretegentffetem ate strictly ... View full answer

Get step-by-step solutions from verified subject matter experts