Question: Gradient descent 6. (20 points) Gradients descent optimization. Solve the following problems. (a) (10 points) Consider the function, N P J = In(1 + er)

Gradient descent

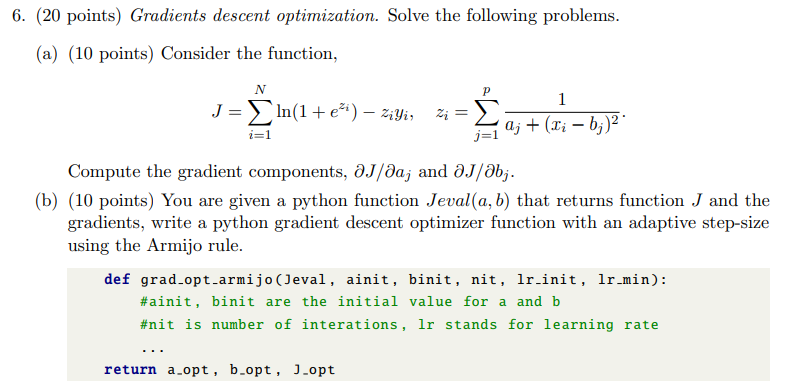

6. (20 points) Gradients descent optimization. Solve the following problems. (a) (10 points) Consider the function, N P J = In(1 + er) - ziyi, Z = i=1 j=1 aj + (Ti - b; )2. Compute the gradient components, J/da; and OJ/abj. (b) (10 points) You are given a python function Jeval(a, b) that returns function J and the gradients, write a python gradient descent optimizer function with an adaptive step-size using the Armijo rule. def grad_opt_armijo (]eval, ainit, binit, nit, lr_init, lr_min): #ainit, binit are the initial value for a and b #nit is number of interations, Ir stands for learning rate return a_opt, b_opt, J_opt

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts