Question: Hello, I cannot providing the data for this question; however, I would like to know the general approach for a problem like this one. Thank

Hello, I cannot providing the data for this question; however, I would like to know the general approach for a problem like this one. Thank you.

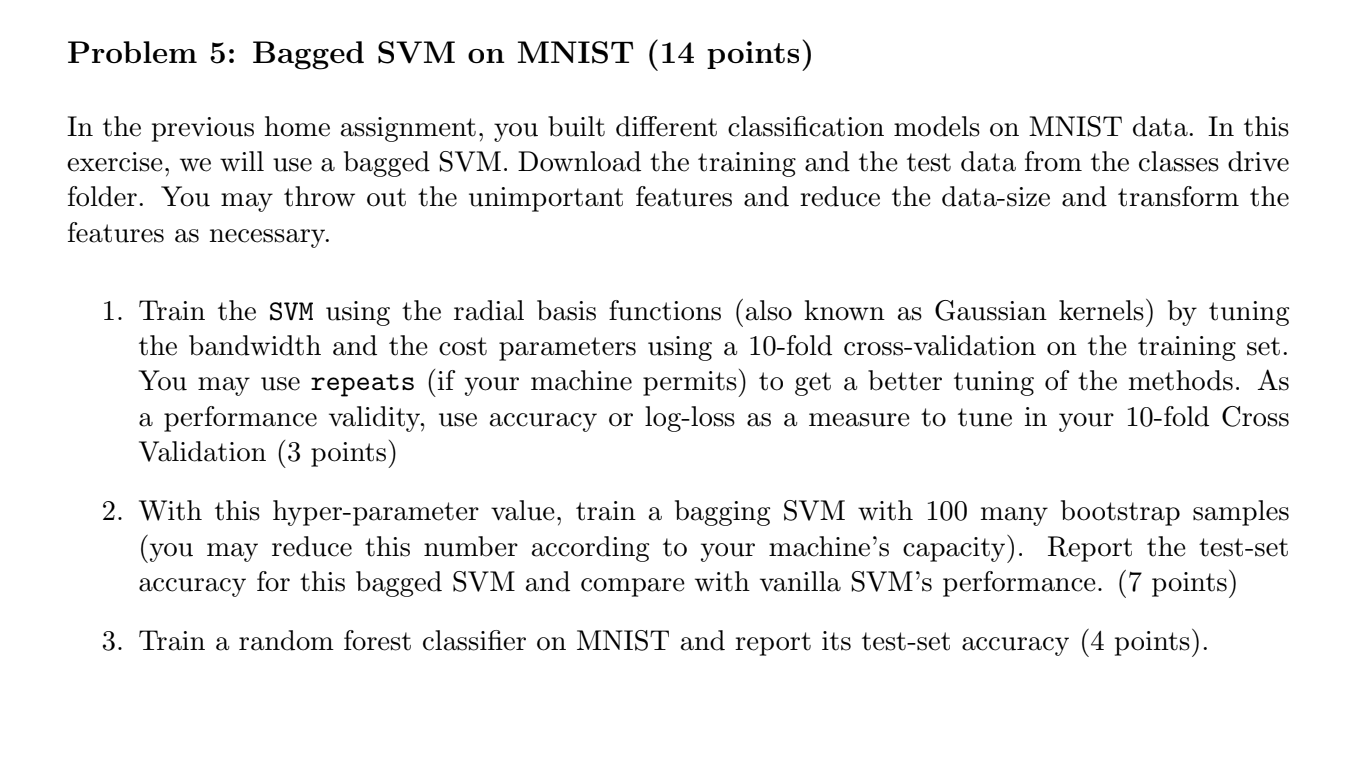

Problem 5: Bagged SVM on MNIST (14 points) In the previous home assignment, you built different classication models on MN IST data. In this exercise, we will use a bagged SVM. Download the training and the test data from the classes drive folder. You may throw out the unimportant features and reduce the data-size and transform the features as necessary. 1. Train the SVM using the radial basis functions (also known as Gaussian kernels) by tuning the bandwidth and the cost parameters using a 10fold crossvalidation on the training set. You may use repeats (if your machine permits) to get a better tuning of the methods. As a performance validity, use accuracy or logloss as a measure to tune in your 10fold Cross Validation (3 points) 2. With this hyperparameter value, train a bagging SVM with 100 many bootstrap samples (you may reduce this number according to your machines capacity). Report the test-set accuracy for this bagged SVM and compare with vanilla SVM's performance. (7 points) 3. Train a random forest classier on MNIST and report its test-set accuracy (4 points)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts