Question: here is figure 7.9 down below 7.3 Now modify the Viterbi algorithm in Figure 7.9 on page 249 to implement the beam search described on

here is figure 7.9 down below

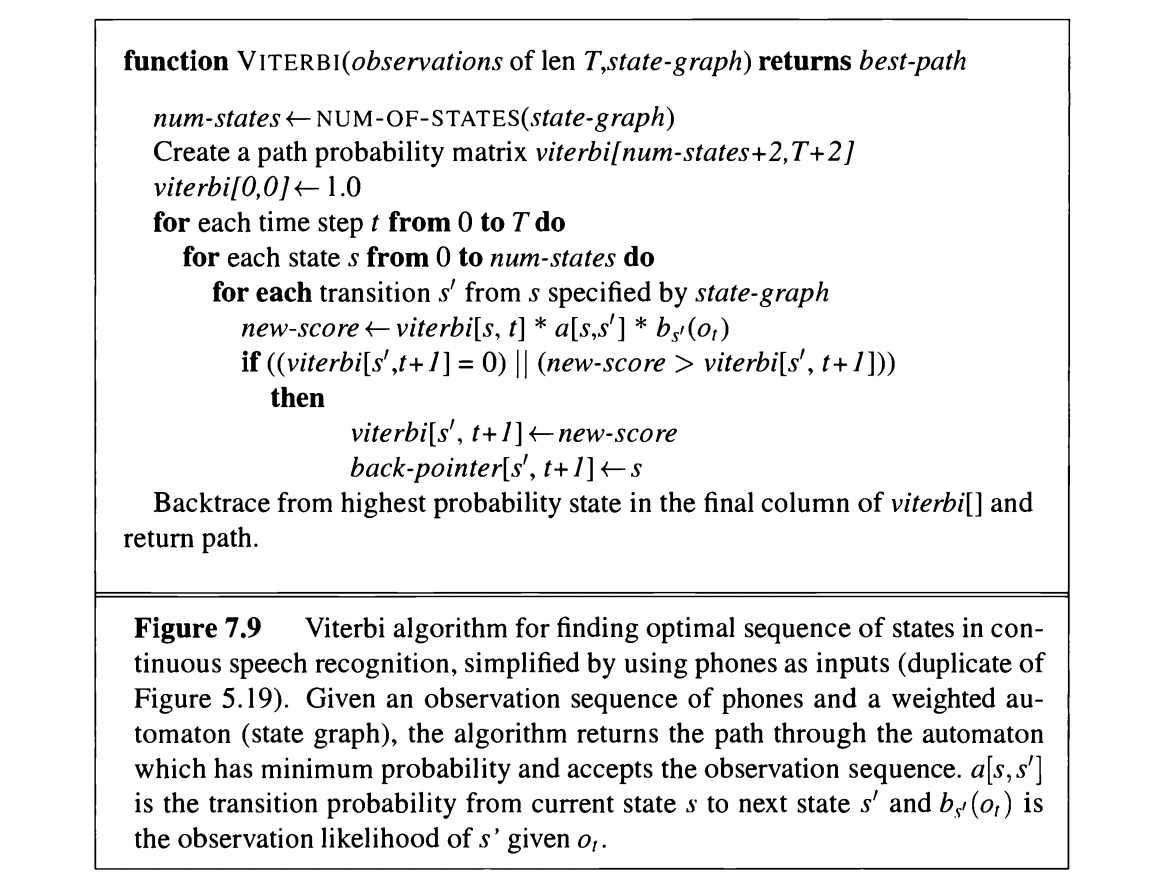

7.3 Now modify the Viterbi algorithm in Figure 7.9 on page 249 to implement the beam search described on page 251. Hint: You will probably need to add in code to check whether a given state is at the end of a word or not. 7.4 Finally, modify the Viterbi algorithm in Figure 7.9 on page 249 with more detailed pseudocode implementing the array of backtrace pointers. function VITERBI(observations of len T,state-graph) returns best-path num-states NUM-OF-STATES(state-graph) Create a path probability matrix viterbi[num-states +2,T+2] viterbi [0,0]1.0 for each time step t from 0 to T do for each state s from 0 to num-states do for each transition s from s specified by state-graph new-score viterbi [s,t]a[s,s]bs(ot) if (( viterbi [s,t+1]=0)( new-score > viterbi [s,t+1])) then viterbi [s,t+1] new-score back-pointer [s,t+1]s Backtrace from highest probability state in the final column of viterbi[] and return path. Figure 7.9 Viterbi algorithm for finding optimal sequence of states in continuous speech recognition, simplified by using phones as inputs (duplicate of Figure 5.19). Given an observation sequence of phones and a weighted automaton (state graph), the algorithm returns the path through the automaton which has minimum probability and accepts the observation sequence. a[s,s] is the transition probability from current state s to next state s and bs(ot) is the observation likelihood of s given ot

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts