Question: Hint: The claim is wrong. Provide a counterexample for it. Recall the two types of support vector machines (SVMs) discussed in the lecture. The hard-margin

Hint: The claim is wrong. Provide a counterexample for it.

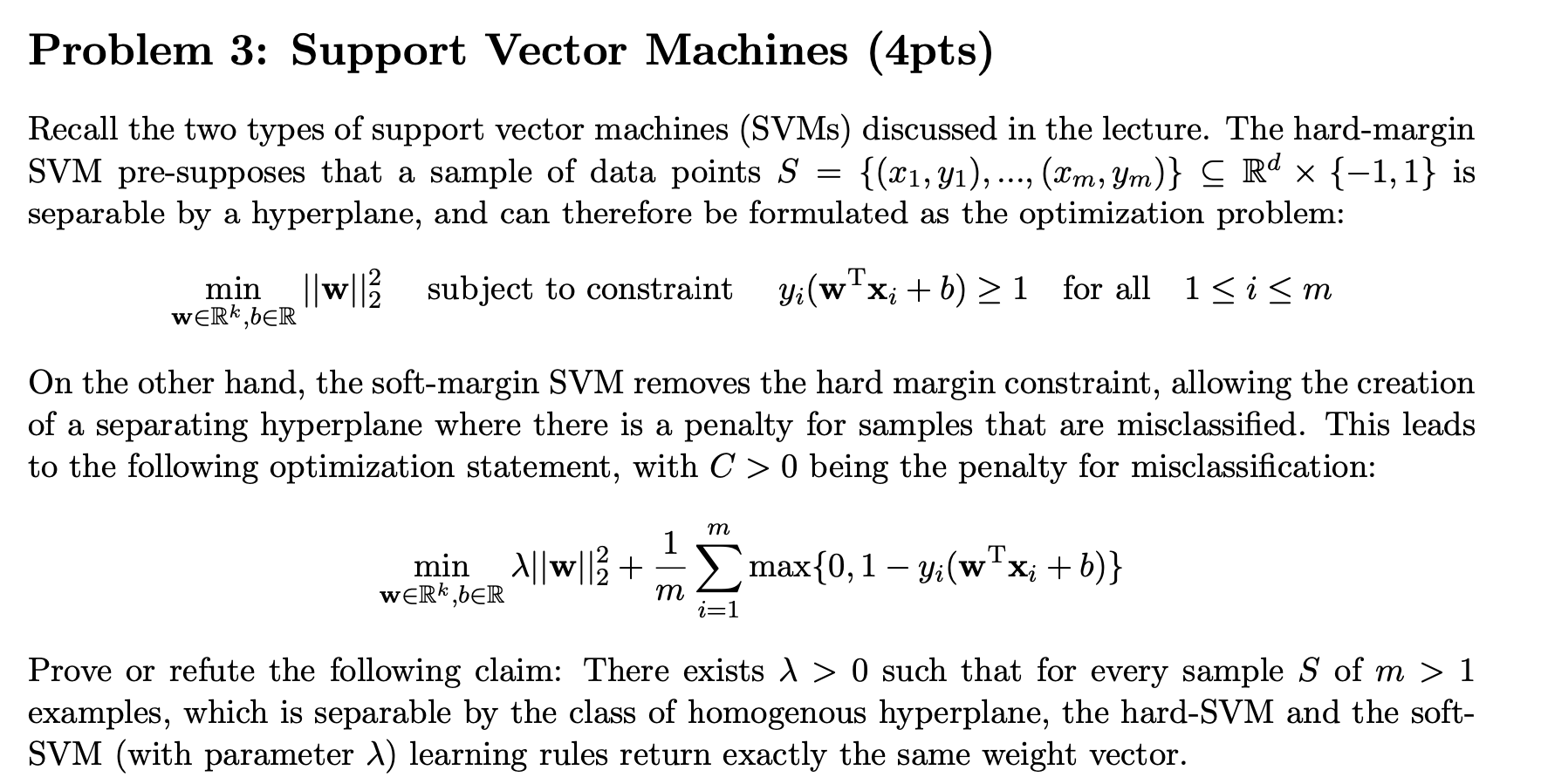

Recall the two types of support vector machines (SVMs) discussed in the lecture. The hard-margin SVM pre-supposes that a sample of data points S={(x1,y1),,(xm,ym)}Rd{1,1} is separable by a hyperplane, and can therefore be formulated as the optimization problem: minwRk,bRw22subjecttoconstraintyi(wTxi+b)1forall1im On the other hand, the soft-margin SVM removes the hard margin constraint, allowing the creation of a separating hyperplane where there is a penalty for samples that are misclassified. This leads to the following optimization statement, with C>0 being the penalty for misclassification: minwRk,bRw22+m1i=1mmax{0,1yi(wTxi+b)} Prove or refute the following claim: There exists >0 such that for every sample S of m>1 examples, which is separable by the class of homogenous hyperplane, the hard-SVM and the softSVM (with parameter ) learning rules return exactly the same weight vector

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts