Question: how to extend the boolean X-OR function f : {0, 1} 2 {0, 1} (defined by: f(a, b) = 1 iff (a, b) = (1,

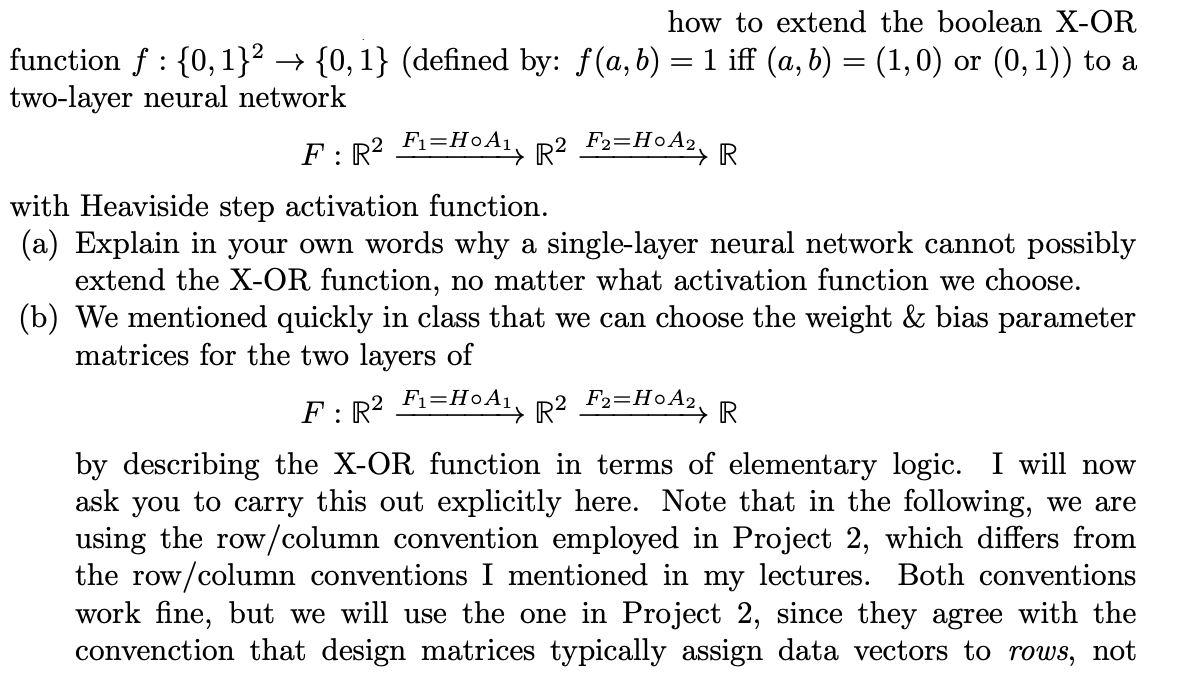

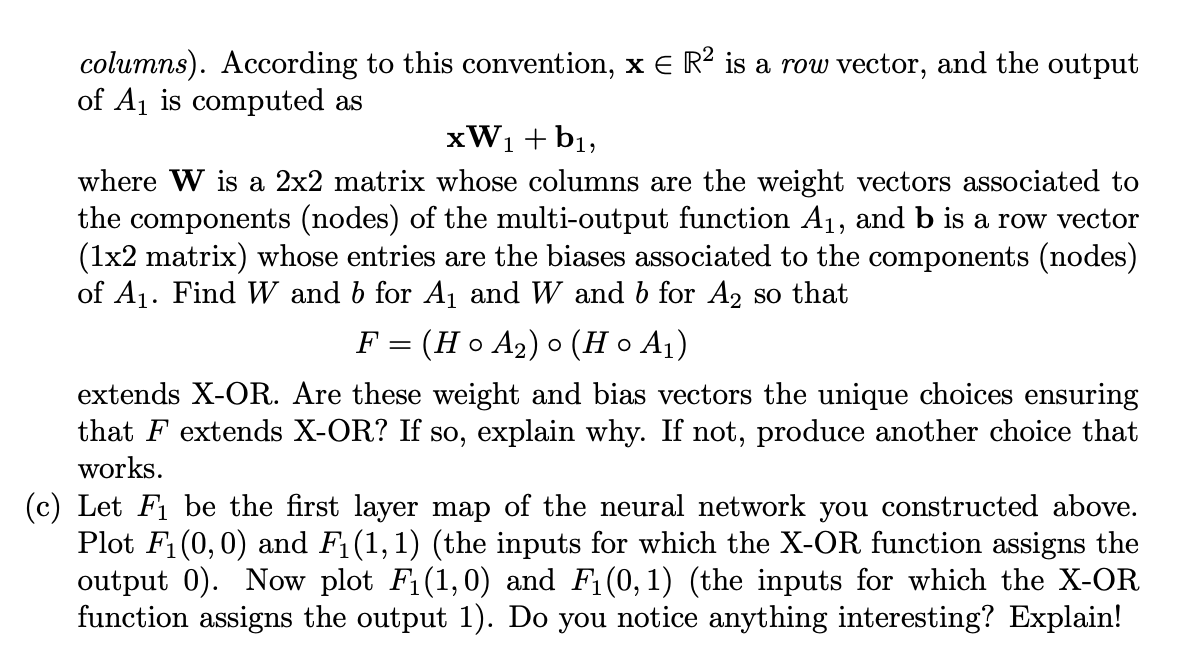

how to extend the boolean X-OR function f : {0, 1} 2 {0, 1} (defined by: f(a, b) = 1 iff (a, b) = (1, 0) or (0, 1)) to a two-layer neural network F : R 2 F1=HA1 R 2 F2=HA2 R with Heaviside step activation function. (a) Explain in your own words why a single-layer neural network cannot possibly extend the X-OR function, no matter what activation function we choose. (b) We mentioned quickly in class that we can choose the weight & bias parameter matrices for the two layers of F : R 2 F1=HA1 R 2 F2=HA2 R by describing the X-OR function in terms of elementary logic. I will now ask you to carry this out explicitly here. Note that in the following, we are using the row/column convention employed in Project 2, which differs from the row/column conventions I mentioned in my lectures. Both conventions work fine, but we will use the one in Project 2, since they agree with the convenction that design matrices typically assign data vectors to rows, not 3 columns). According to this convention, x R 2 is a row vector, and the output of A1 is computed as xW1 + b1, where W is a 2x2 matrix whose columns are the weight vectors associated to the components (nodes) of the multi-output function A1, and b is a row vector (1x2 matrix) whose entries are the biases associated to the components (nodes) of A1. Find W and b for A1 and W and b for A2 so that F = (H A2) (H A1) extends X-OR. Are these weight and bias vectors the unique choices ensuring that F extends X-OR? If so, explain why. If not, produce another choice that works. (c) Let F1 be the first layer map of the neural network you constructed above. Plot F1(0, 0) and F1(1, 1) (the inputs for which the X-OR function assigns the output 0). Now plot F1(1, 0) and F1(0, 1) (the inputs for which the X-OR function assigns the output 1). Do you notice anything interesting? Explain!

how to extend the boolean X-OR function f : {0,1} + {0,1} (defined by: f(a,b) = 1 iff (a,b) = (1,0) or (0,1)) to a two-layer neural network F: R2 Fi=H0A1, R2 F2=H0A2, R with Heaviside step activation function. (a) Explain in your own words why a single-layer neural network cannot possibly extend the X-OR function, no matter what activation function we choose. (b) We mentioned quickly in class that we can choose the weight & bias parameter matrices for the two layers of F: R2 Fi=H0A1, R2 F2=H0A2, R by describing the X-OR function in terms of elementary logic. I will now ask you to carry this out explicitly here. Note that in the following, we are using the row/column convention employed in Project 2, which differs from the row/column conventions I mentioned in my lectures. Both conventions work fine, but we will use the one in Project 2, since they agree with the convenction that design matrices typically assign data vectors to rows, not columns). According to this convention, xe R2 is a row vector, and the output of Aj is computed as xW1 + bi, where W is a 2x2 matrix whose columns are the weight vectors associated to the components (nodes) of the multi-output function A1, and b is a row vector (1x2 matrix) whose entries are the biases associated to the components (nodes) of A1. Find W and b for Aj and W and b for A2 so that F = (H o A2) (H o A1) extends X-OR. Are these weight and bias vectors the unique choices ensuring that F extends X-OR? If so, explain why. If not, produce another choice that works. (c) Let Fi be the first layer map of the neural network you constructed above. Plot F1(0,0) and Fi(1,1) (the inputs for which the X-OR function assigns the output 0). Now plot Fi(1,0) and F1(0, 1) (the inputs for which the X-OR function assigns the output 1). Do you notice anything interesting? Explain! how to extend the boolean X-OR function f : {0,1} + {0,1} (defined by: f(a,b) = 1 iff (a,b) = (1,0) or (0,1)) to a two-layer neural network F: R2 Fi=H0A1, R2 F2=H0A2, R with Heaviside step activation function. (a) Explain in your own words why a single-layer neural network cannot possibly extend the X-OR function, no matter what activation function we choose. (b) We mentioned quickly in class that we can choose the weight & bias parameter matrices for the two layers of F: R2 Fi=H0A1, R2 F2=H0A2, R by describing the X-OR function in terms of elementary logic. I will now ask you to carry this out explicitly here. Note that in the following, we are using the row/column convention employed in Project 2, which differs from the row/column conventions I mentioned in my lectures. Both conventions work fine, but we will use the one in Project 2, since they agree with the convenction that design matrices typically assign data vectors to rows, not columns). According to this convention, xe R2 is a row vector, and the output of Aj is computed as xW1 + bi, where W is a 2x2 matrix whose columns are the weight vectors associated to the components (nodes) of the multi-output function A1, and b is a row vector (1x2 matrix) whose entries are the biases associated to the components (nodes) of A1. Find W and b for Aj and W and b for A2 so that F = (H o A2) (H o A1) extends X-OR. Are these weight and bias vectors the unique choices ensuring that F extends X-OR? If so, explain why. If not, produce another choice that works. (c) Let Fi be the first layer map of the neural network you constructed above. Plot F1(0,0) and Fi(1,1) (the inputs for which the X-OR function assigns the output 0). Now plot Fi(1,0) and F1(0, 1) (the inputs for which the X-OR function assigns the output 1). Do you notice anything interesting? Explain

Step by Step Solution

There are 3 Steps involved in it

It seems youve uploaded two images with some information regarding the extension of the Boolean XOR function using a twolayer neural network Let me break down the questions and provide a detailed resp... View full answer

Get step-by-step solutions from verified subject matter experts