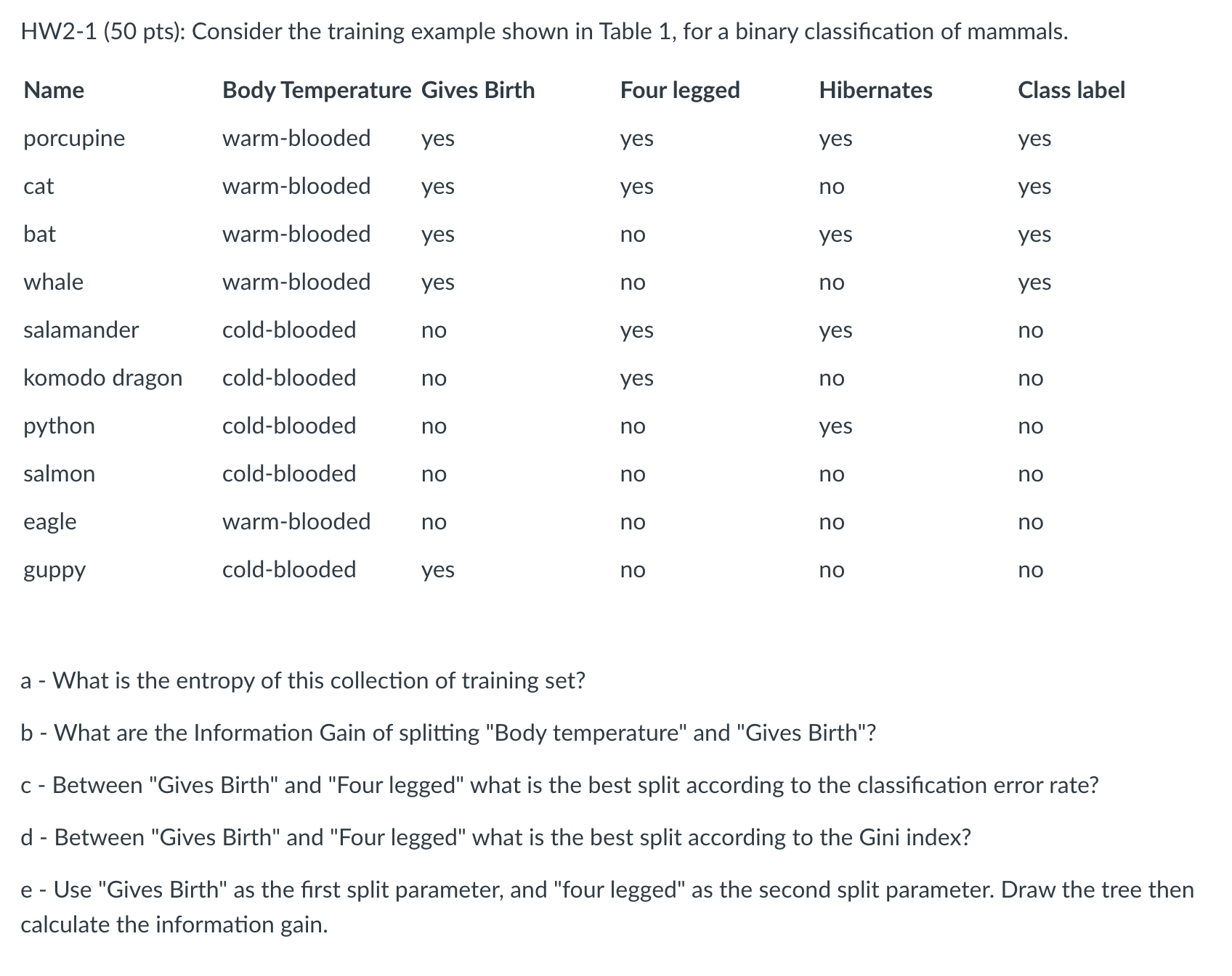

Question: HW2-1 (50 pts): Consider the training example shown in Table 1, for a binary classification of mammals. Body Temperature Gives Birth Four legged yes

HW2-1 (50 pts): Consider the training example shown in Table 1, for a binary classification of mammals. Body Temperature Gives Birth Four legged yes yes Name porcupine cat bat whale salamander komodo dragon python salmon eagle guppy warm-blooded warm-blooded yes warm-blooded yes warm-blooded yes cold-blooded cold-blooded cold-blooded cold-blooded warm-blooded no cold-blooded yes no no no no yes no no yes yes no no no no Hibernates yes no yes no yes no yes no no no Class label yes yes yes yes 2 2 2 2 2 2 no no no no no no a - What is the entropy of this collection of training set? b - What are the Information Gain of splitting "Body temperature" and "Gives Birth"? c - Between "Gives Birth" and "Four legged" what is the best split according to the classification error rate? d - Between "Gives Birth" and "Four legged" what is the best split according to the Gini index? e - Use "Gives Birth" as the first split parameter, and "four legged" as the second split parameter. Draw the tree then calculate the information gain.

Step by Step Solution

There are 3 Steps involved in it

To address this question step by step lets start by calculating the entropy ... View full answer

Get step-by-step solutions from verified subject matter experts