Question: I am working on a project comparing different methods to reduce error rates on the MNIST dataset. I found a result table like the one

I am working on a project comparing different methods to reduce error rates on the MNIST dataset. I found a result table like the one below and need help understanding and implementing these methods:

Methods and Test Error Rates:

Method Test Error Rate

Neural Network Ridge Regularization

Neural Network Dropout Regularization

Multinomial Logistic Regression

Linear Discriminant Analysis

Neural Network LASSO Regularization

Neural Network CNN

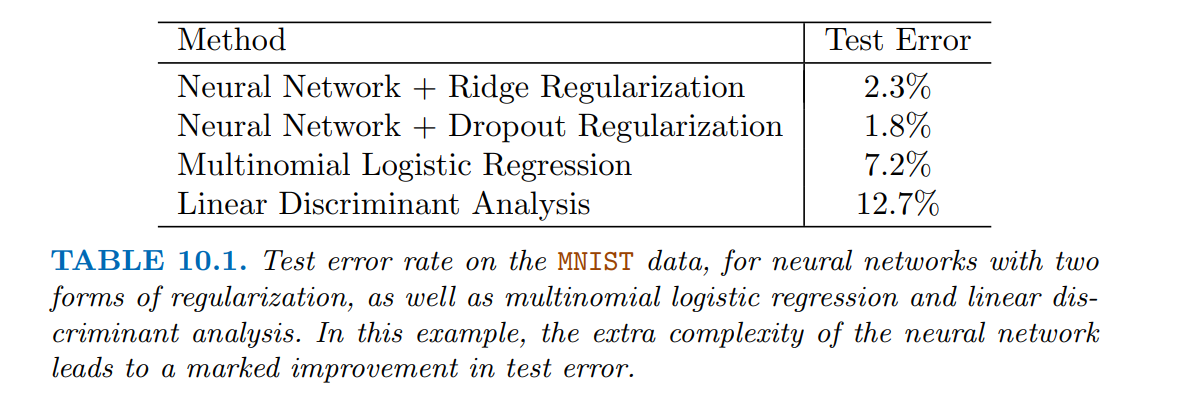

TABLE : Test error rate on the MNIST data, for neural networks with two forms of regularization, as well as multinomial logistic regression and linear discriminant analysis. In this example, the extra complexity of the neural network leads to a marked improvement in test error.

My Questions:

Code for these methods: Can anyone share Python code to implement these methods? Specifically, the implementation of:

Neural Network Ridge Regularization

Neural Network Dropout Regularization

Multinomial Logistic Regression

Linear Discriminant Analysis

Neural Network LASSO Regularization

Neural Network CNN

Explanation of the methods: Can someone explain in detail how each method works and why there is such a significant difference in error rates? Especially why Dropout Regularization performs better than Ridge Regularization in this case.

Use of Multilayer Neural Network: Are these methods using Multilayer Neural Networks? If so how does the architecture look for each method?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock