Question: I need help in understanding the concepts behind these Bayesian Inference questions 4.2, 4.3, 4.6, 4.7, 4.8. I want to know how to approach the

I need help in understanding the concepts behind these Bayesian Inference questions 4.2, 4.3, 4.6, 4.7, 4.8. I want to know how to approach the problem. I want to know what is Laplace Approximation and MAP concepts. What is Log Posterior, closed form solution in this problem?

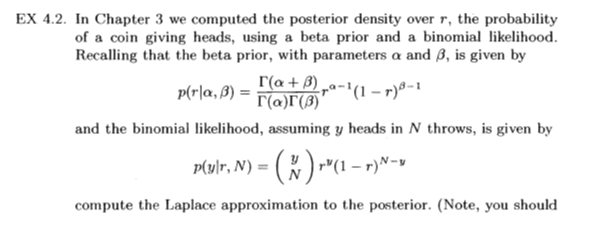

EX 4.2. In Chapter 3 we computed the posterior density over r, the probability of a coin giving heads, using a beta prior and a binomial likelihood. Recalling that the beta prior, with parameters a and 3, is given by r(a + B) p(ra, P) = (a)T(B) a=(1-7)-1 and the binomial likelihood, assuming y heads in / throws, is given by p(ylr, N) = (N -( 1 -r)N-v compute the Laplace approximation to the posterior. (Note, you shouldl \\V I ' I' \\ IV I) compute the Laplace approximation to the posterior. {Note1 you should l66 A First Course in Machine Learning be able to obtain a cloeedform solution for the MAP value, 9", by setting the log posterior to zero, differentiating and equating to zero). EX 4.3. Plot the true beta posterior and the Laplace approximation computed in Exercise EX 4.2 for various values of a, H. y and N

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts