Question: I need help with this python code for a Perceptron with Backpropagation. Here are the requirements: Ability to create a network structure with at least

I need help with this python code for a Perceptron with Backpropagation. Here are the requirements:

- Ability to create a network structure with at least one hidden layer and an arbitrary number of nodes.

- Random weight initialization with small random weights with mean of 0 and a variance of 1.

- Use Stochastic/On-line training updates: Iterate and update weights after each training instance (i.e. do not attempt batch updates)

- Implement a validation set based stopping criterion.

- Shuffle training set at each epoch.

- Option to include a momentum term

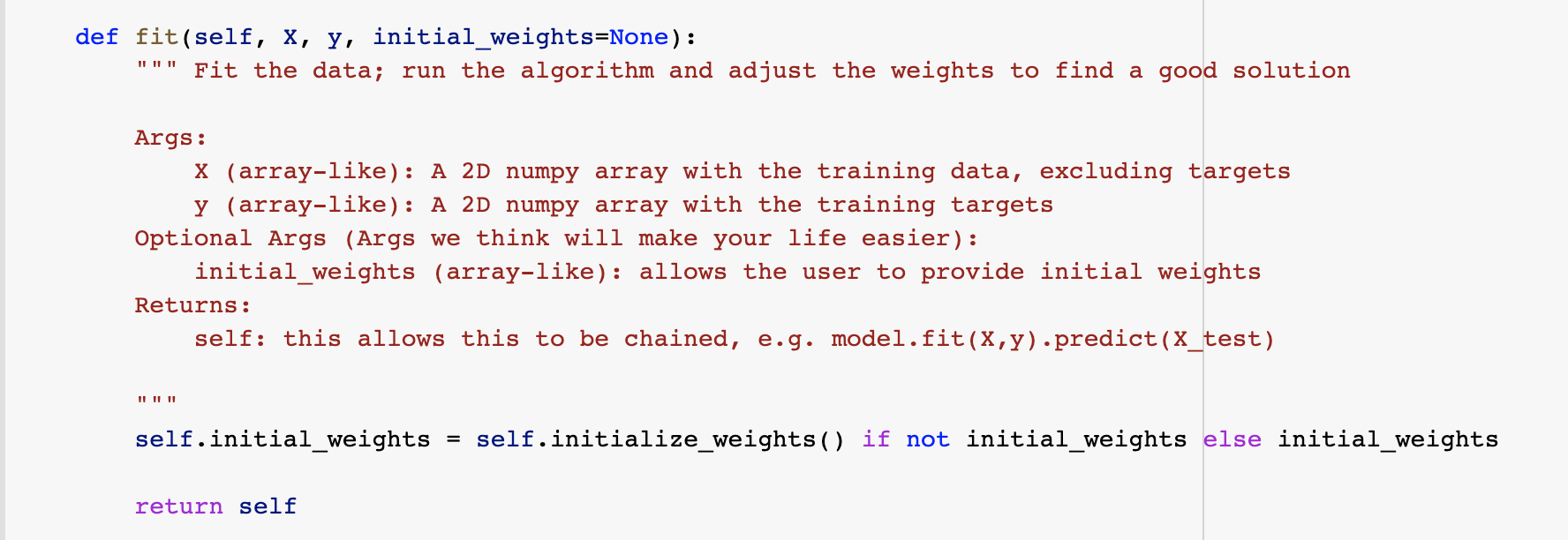

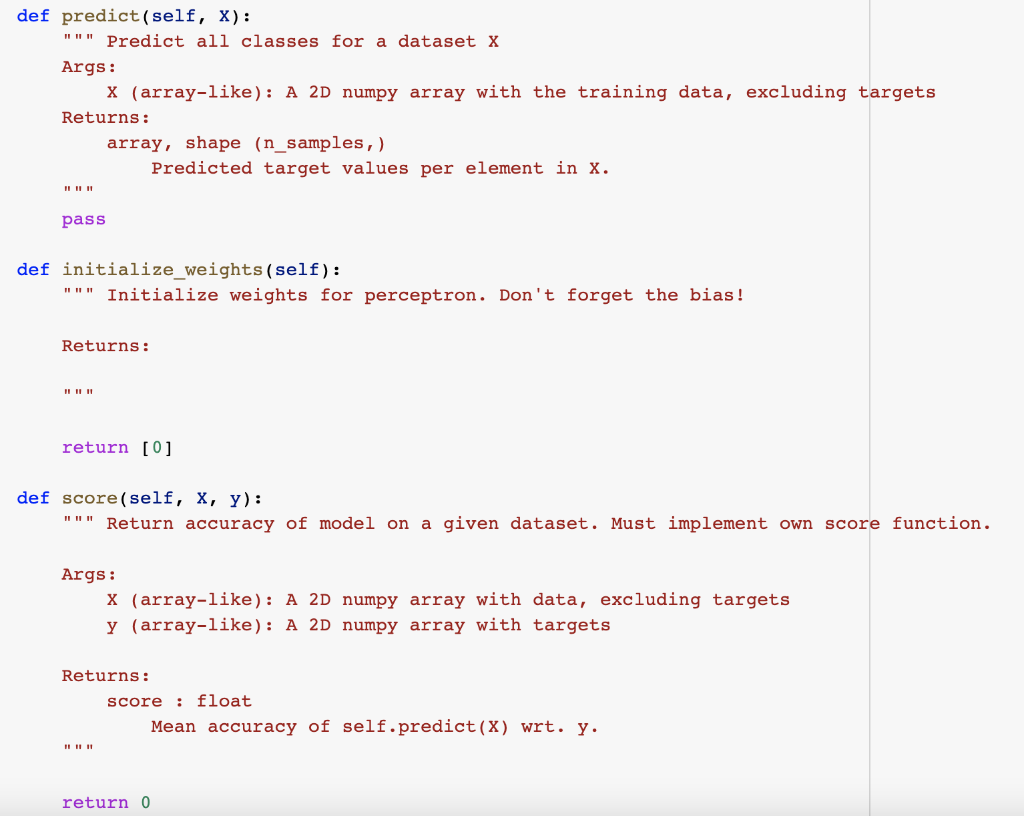

There are really only 2 functions that I need help with (fit() and predict()). Here they are stubbed out:

I know this is probably a hefty order, but I'm truly at the end of my wits here. Let me know if you have any questions about it. I'll take all the help I can get! Thank you!

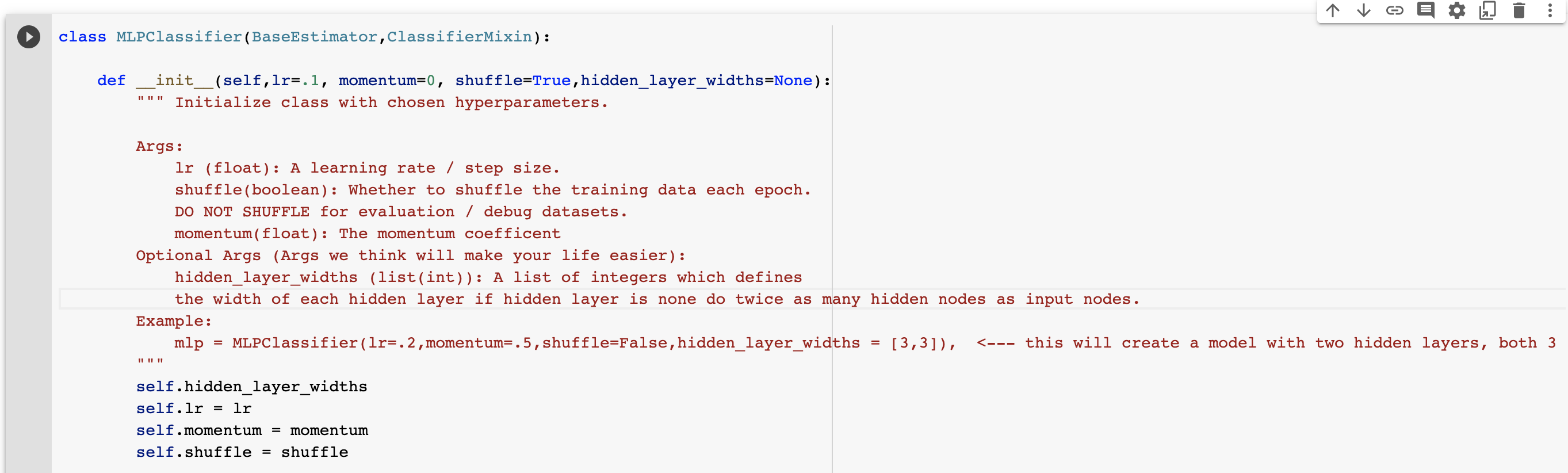

E class MLPClassifier (BaseEstimator,classifierMixin): def init (self,lr=.1, momentum=0, shuffle=True, hidden_layer_widths=None): Initialize class with chosen hyperparameters. II II II Args: lr (float): A learning rate / step size. shuffle (boolean): Whether to shuffle the training data each epoch. DO NOT SHUFFLE for evaluation / debug datasets. momentum(float): The momentum coefficent Optional Args (Args we think will make your life easier): hidden_layer_widths (list(int)): A list of integers which defines the width of each hidden layer if hidden layer is none do twice as many hidden nodes as input nodes. Example: mlp MLPClassifier(lr=.2, momentum=.5, shuffle=False, hidden_layer_widths [3,3]),

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts