Question: I need some serious help with this decision tree algorithm for my machine learning class. Be sure to read the code requirements below, because i

I need some serious help with this decision tree algorithm for my machine learning class. Be sure to read the code requirements below, because i need to write some parts from scratch. Any and all help would be VERY appreciated. Thank you!

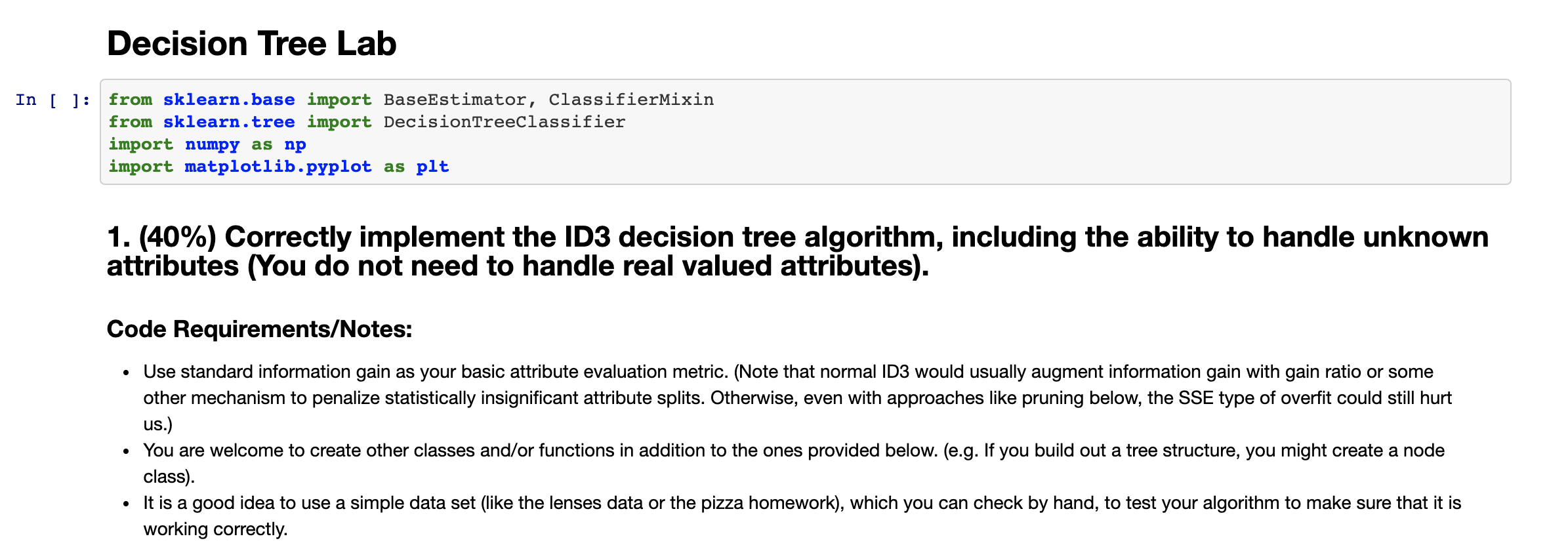

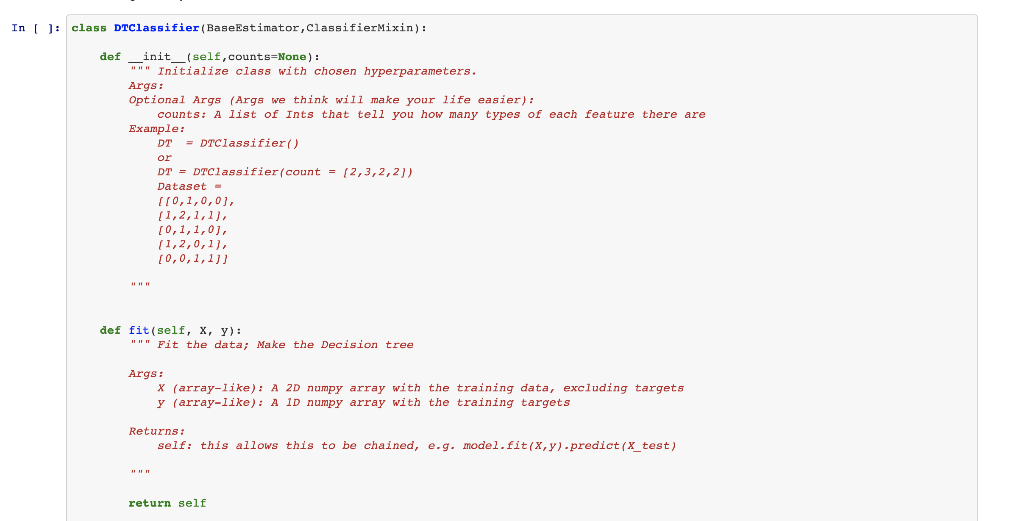

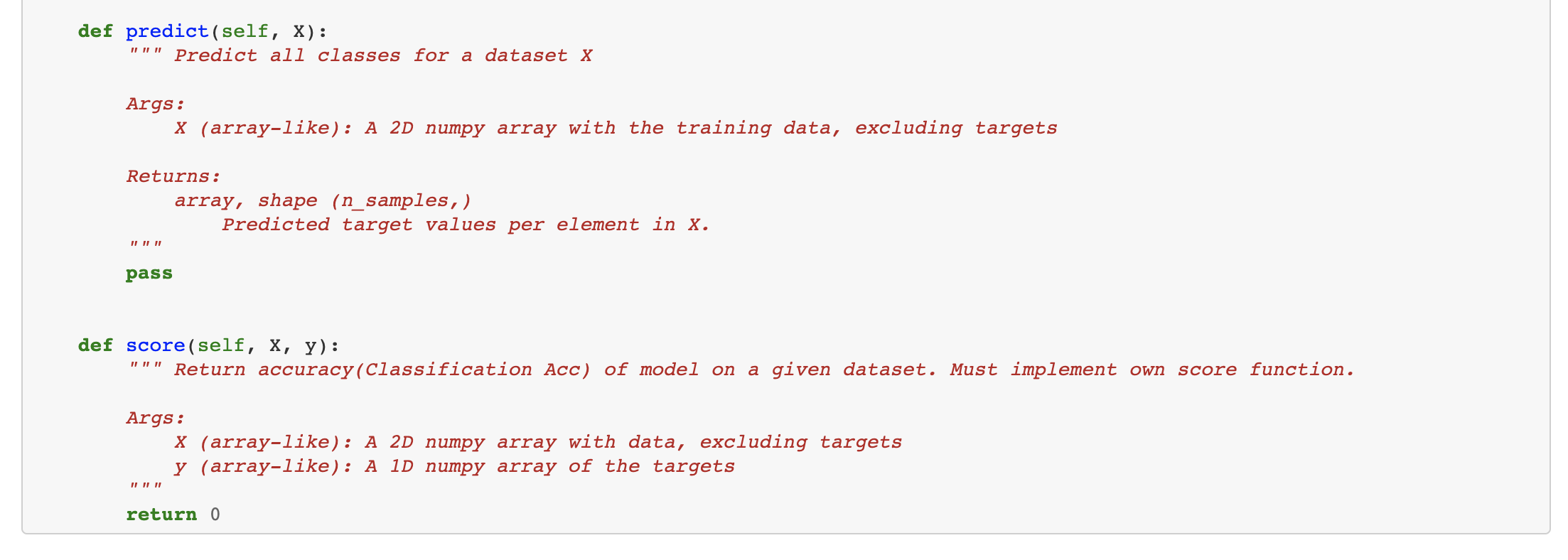

Decision Tree Lab In [ ]: from sklearn.base import BaseEstimator, classifierMixin from sklearn.tree import DecisionTreeclassifier import numpy as np import matplotlib.pyplot as plt 1. (40%) Correctly implement the ID3 decision tree algorithm, including the ability to handle unknown attributes (You do not need to handle real valued attributes). Code Requirements/Notes: Use standard information gain as your basic attribute evaluation metric. (Note that normal ID3 would usually augment information gain with gain ratio or some other mechanism to penalize statistically insignificant attribute splits. Otherwise, even with approaches like pruning below, the SSE type of overfit could still hurt us.) You are welcome to create other classes and/or functions in addition to the ones provided below. (e.g. If you build out a tree structure, you might create a node class). It is a good idea to use a simple data set (like the lenses data or the pizza homework), which you can check by hand, to test your algorithm to make sure that it is working correctly. In ( ): class DTClassifier (BaseEstimator,classifierMixin): def __init__(self,counts=None): "!!" Initialize class with chosen hyperparameters. Args: Optional Args (Args we think will make your life easier): counts: A list of Ints that tell you how many types of each feature there are Example: DT = DTClassifier() or DT = DrClassifier(count = 12,3,2,2)) Dataset - [[0,1,0,0], [1,2,1,1). [0,1,1,0), 11,2,0,1) [0,0,1,1)] 11 !!!! def fit (self, X, Y): """ Fit the data; Make the Decision tree Args : x (array-like): A 2D numpy array with the training data, excluding targets y (array-like): A ID numpy array with the training targets Returns: self: this allows this to be chained, e.g. model.fit(x,y)-predict(x_test) return self def predict(self, X): Predict all classes for a dataset X II 11 11 Args: X (array-like): A 2D numpy array with the training data, excluding targets Returns: array, shape (n_samples, ) Predicted target values per element in X. pass def score (self, X, Y): Return accuracy(Classification Acc) of model on a given dataset. Must implement own score function. Args: X (array-like): A 2D numpy array with data, excluding targets y (array-like): A 1D numpy array of the targets II 11 11 return 0 Decision Tree Lab In [ ]: from sklearn.base import BaseEstimator, classifierMixin from sklearn.tree import DecisionTreeclassifier import numpy as np import matplotlib.pyplot as plt 1. (40%) Correctly implement the ID3 decision tree algorithm, including the ability to handle unknown attributes (You do not need to handle real valued attributes). Code Requirements/Notes: Use standard information gain as your basic attribute evaluation metric. (Note that normal ID3 would usually augment information gain with gain ratio or some other mechanism to penalize statistically insignificant attribute splits. Otherwise, even with approaches like pruning below, the SSE type of overfit could still hurt us.) You are welcome to create other classes and/or functions in addition to the ones provided below. (e.g. If you build out a tree structure, you might create a node class). It is a good idea to use a simple data set (like the lenses data or the pizza homework), which you can check by hand, to test your algorithm to make sure that it is working correctly. In ( ): class DTClassifier (BaseEstimator,classifierMixin): def __init__(self,counts=None): "!!" Initialize class with chosen hyperparameters. Args: Optional Args (Args we think will make your life easier): counts: A list of Ints that tell you how many types of each feature there are Example: DT = DTClassifier() or DT = DrClassifier(count = 12,3,2,2)) Dataset - [[0,1,0,0], [1,2,1,1). [0,1,1,0), 11,2,0,1) [0,0,1,1)] 11 !!!! def fit (self, X, Y): """ Fit the data; Make the Decision tree Args : x (array-like): A 2D numpy array with the training data, excluding targets y (array-like): A ID numpy array with the training targets Returns: self: this allows this to be chained, e.g. model.fit(x,y)-predict(x_test) return self def predict(self, X): Predict all classes for a dataset X II 11 11 Args: X (array-like): A 2D numpy array with the training data, excluding targets Returns: array, shape (n_samples, ) Predicted target values per element in X. pass def score (self, X, Y): Return accuracy(Classification Acc) of model on a given dataset. Must implement own score function. Args: X (array-like): A 2D numpy array with data, excluding targets y (array-like): A 1D numpy array of the targets II 11 11 return 0

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts