Question: II. Given the following neural network with fully connection layer and ReLU activations, including two input units (i1, i2), four hidden units (h1, h2)

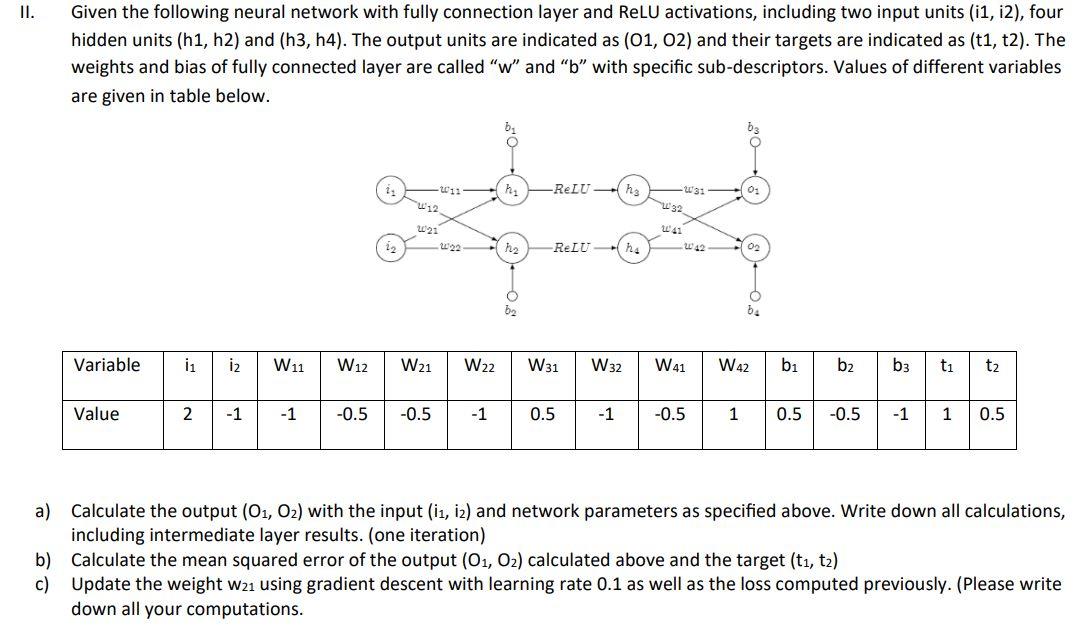

II. Given the following neural network with fully connection layer and ReLU activations, including two input units (i1, i2), four hidden units (h1, h2) and (h3, h4). The output units are indicated as (01, 02) and their targets are indicated as (t1, t2). The weights and bias of fully connected layer are called "w" and "b" with specific sub-descriptors. Values of different variables are given in table below. Variable Value 1 12 2 -1 -1 -222 -0.5 -0.5 h W11 W12 W21 W22 W31 W32 -1 -ReLUhs -ReLU 0.5 -1 ba W41 W42 -0.5 b b 1 0.5 -0.5 b3 t t -1 1 0.5 a) Calculate the output (O1, O) with the input (i1, 12) and network parameters as specified above. Write down all calculations, including intermediate layer results. (one iteration) b) Calculate the mean squared error of the output (O1, O) calculated above and the target (t, t2) c) Update the weight w21 using gradient descent with learning rate 0.1 as well as the loss computed previously. (Please write down all your computations.

Step by Step Solution

There are 3 Steps involved in it

a Calculate the output O1O2 with the input i1i2 and network parameters as specified above Wr... View full answer

Get step-by-step solutions from verified subject matter experts