Question: Implement (Program simple Machine Learning Models (Perceptron Model) to simulate the following operations. If you can't find the final weights within 30h epochs, you can

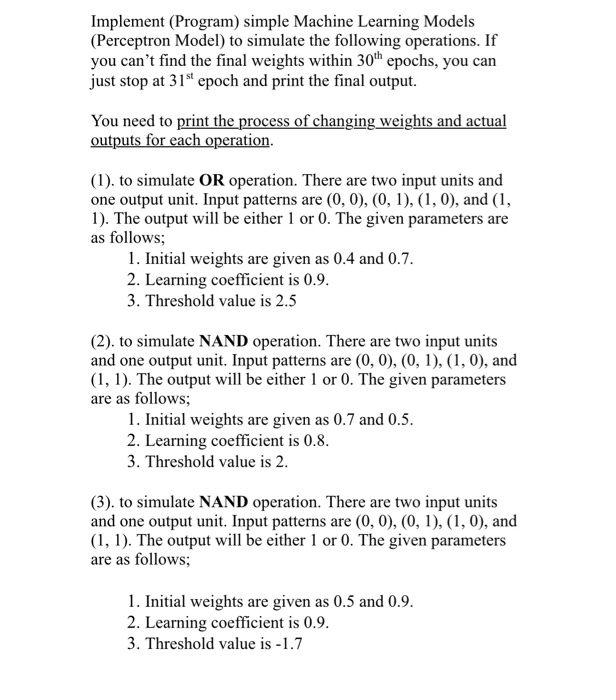

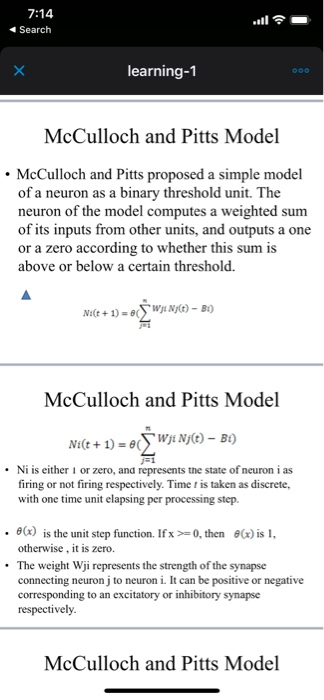

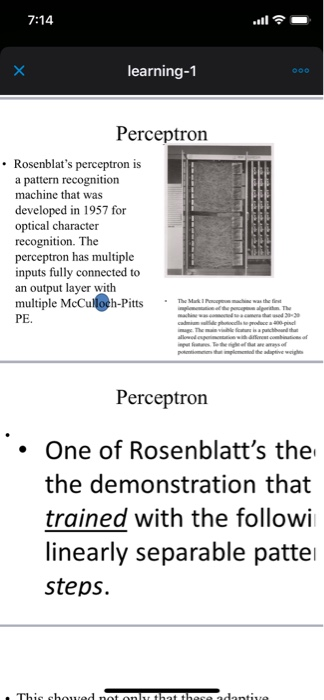

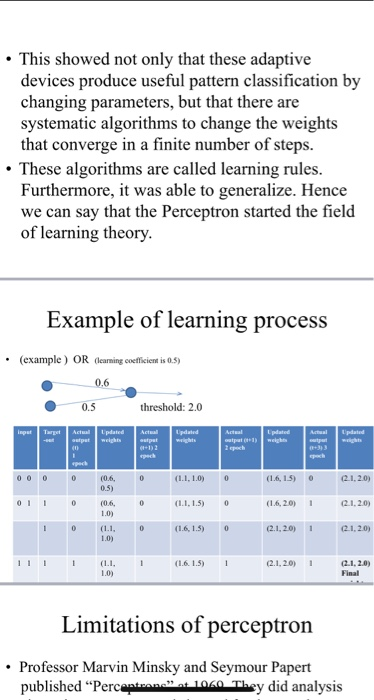

Implement (Program simple Machine Learning Models (Perceptron Model) to simulate the following operations. If you can't find the final weights within 30h epochs, you can just stop at 31" epoch and print the final output. You need to print the process of changing weights and actual outputs for each operation. (1). to simulate OR operation. There are two input units and one output unit. Input patterns are (0, 0), (0, 1), (1, 0), and (1, 1). The output will be either 1 or 0. The given parameters are as follows; 1. Initial weights are given as 0.4 and 0.7. 2. Learning coefficient is 0.9. 3. Threshold value is 2.5 (2). to simulate NAND operation. There are two input units and one output unit. Input patterns are (0, 0), (0, 1), (1, 0), and (1,1). The output will be either 1 or 0. The given parameters are as follows; 1. Initial weights are given as 0.7 and 0.5. 2. Learning coefficient is 0.8. 3. Threshold value is 2. (3). to simulate NAND operation. There are two input units and one output unit. Input patterns are (0, 0), (0, 1), (1, 0), and (1, 1). The output will be either 1 or 0. The given parameters are as follows; 1. Initial weights are given as 0.5 and 0.9. 2. Learning coefficient is 0.9. 3. Threshold value is -1.7 7:14 Search learning-1 McCulloch and Pitts Model McCulloch and Pitts proposed a simple model of a neuron as a binary threshold unit. The neuron of the model computes a weighted sum of its inputs from other units, and outputs a one or a zero according to whether this sum is above or below a certain threshold. Na(t+1) - SWji Ny(t) - B9 McCulloch and Pitts Model Ni(t+1) = 0 WJENJE) B! Ni is either or zero, and represents the state of neuroni as firing or not firing respectively. Time is taken as discrete, with one time unit elapsing per processing step. . 06) is the unit step function. If x =0, then G) is 1. otherwise, it is zero. The weight Wji represents the strength of the synapse connecting neuronj to neuroni. It can be positive or negative corresponding to an excitatory or inhibitory synapse respectively. McCulloch and Pitts Model 7:14 x learning-1 Perceptron Rosenblat's perceptron is a pattern recognition machine that was developed in 1957 for optical character recognition. The perceptron has multiple inputs fully connected to an output layer with multiple McCulloch-Pitts PE. M - The a stheit fees Perceptron One of Rosenblatt's the the demonstration that trained with the followii linearly separable patter steps. This chowed not only that these adantive This showed not only that these adaptive devices produce useful pattern classification by changing parameters, but that there are systematic algorithms to change the weights that converge in a finite number of steps. These algorithms are called learning rules. Furthermore, it was able to generalize. Hence we can say that the Perceptron started the field of learning theory. Example of learning process (example ) OR (carning coefficient is 0.5) 0.5 threshold: 2.0 Actual (0.6. (11.10) (14, 15) 2.1.2.0) (1.1.1.5) (1420) 2.1.2011 (16.1.5) 1 !!! (11 (16.1.5) 2.1.2012 .12) Final Limitations of perceptron Professor Marvin Minsky and Seymour Papert published "Perceptron 1040 They did analysis

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts