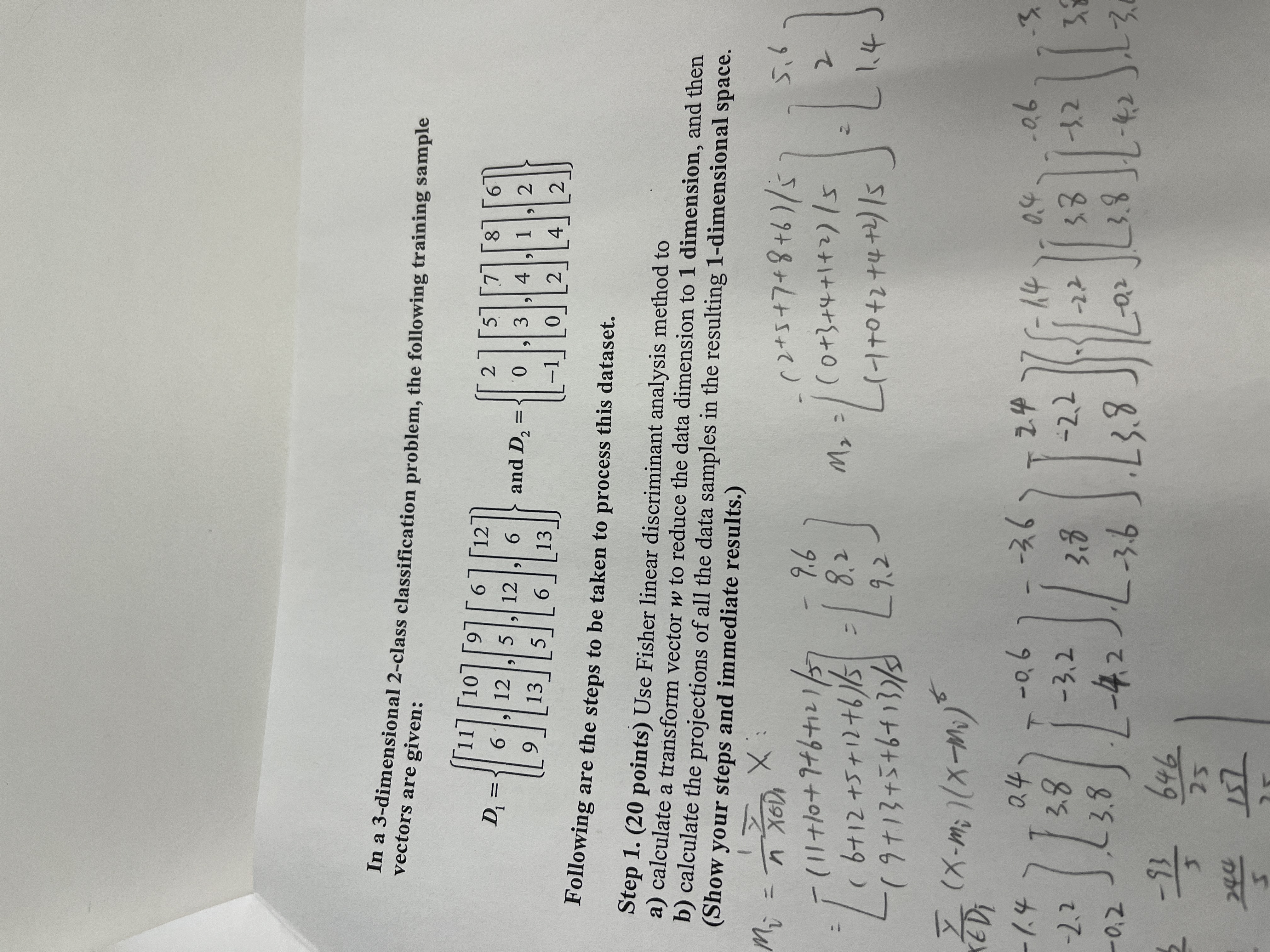

Question: In a 3-dimensional 2-class classification problem, the following training sample vectors are given: Following are the steps to be taken to process this dataset. Step

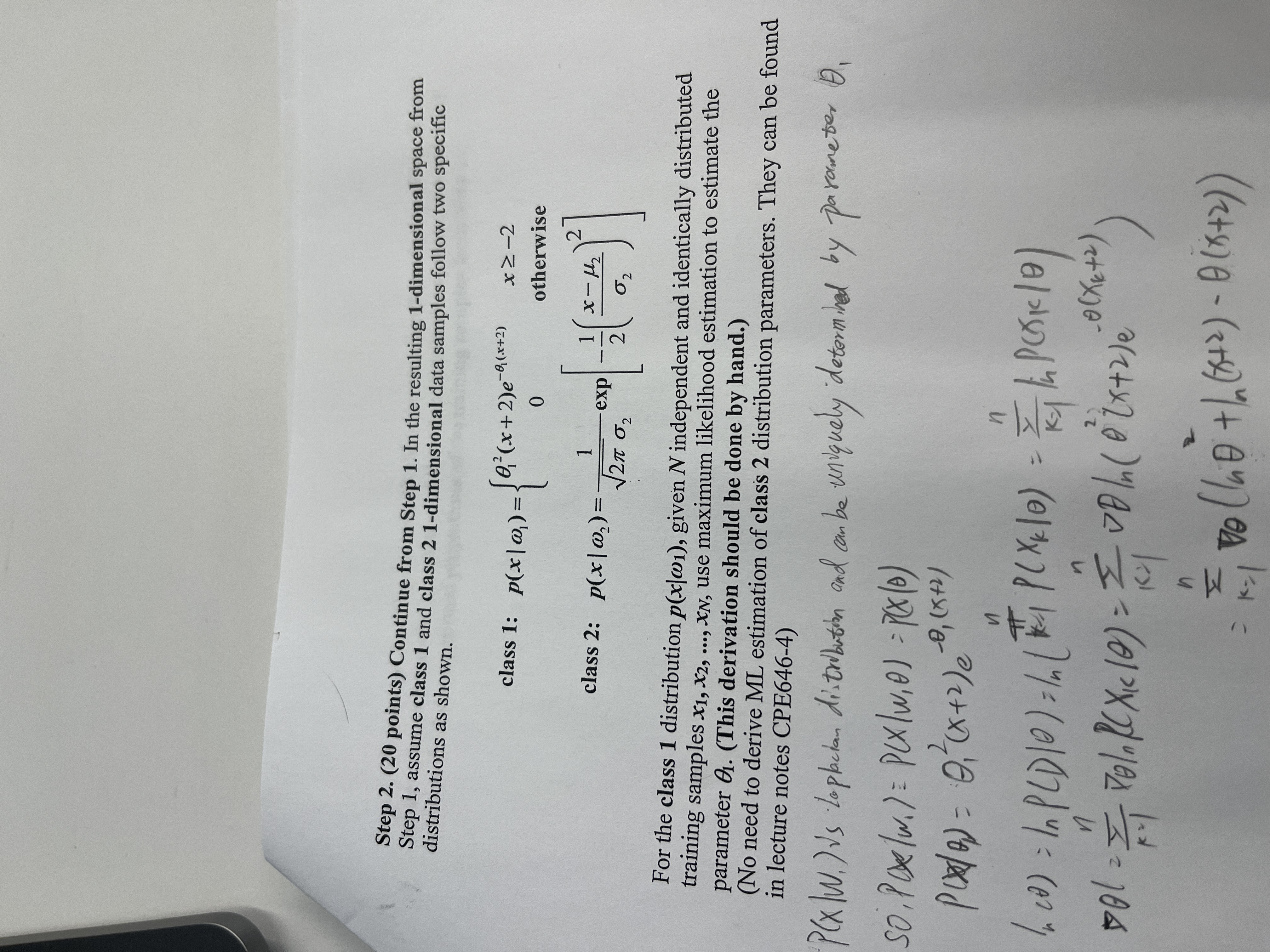

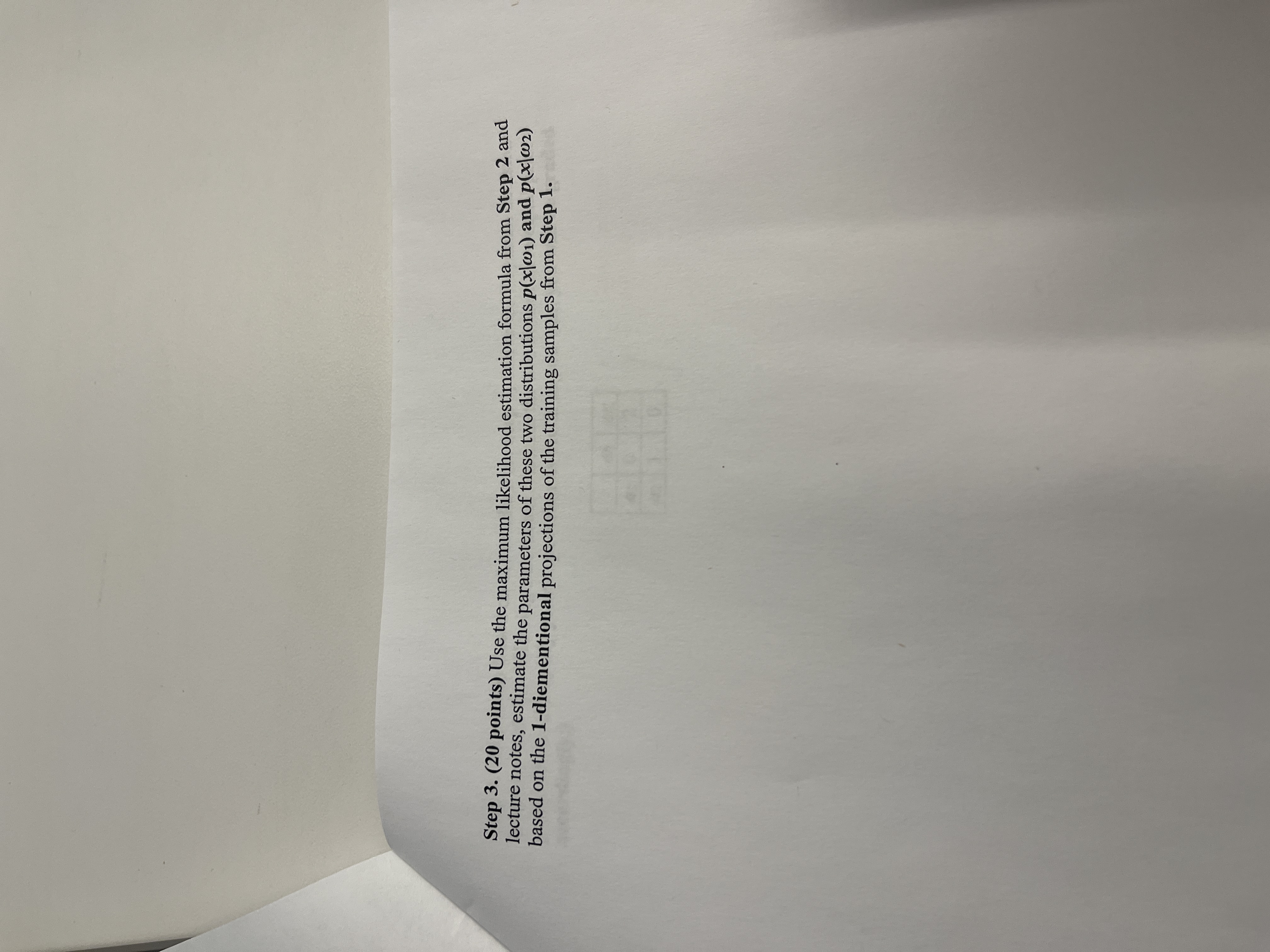

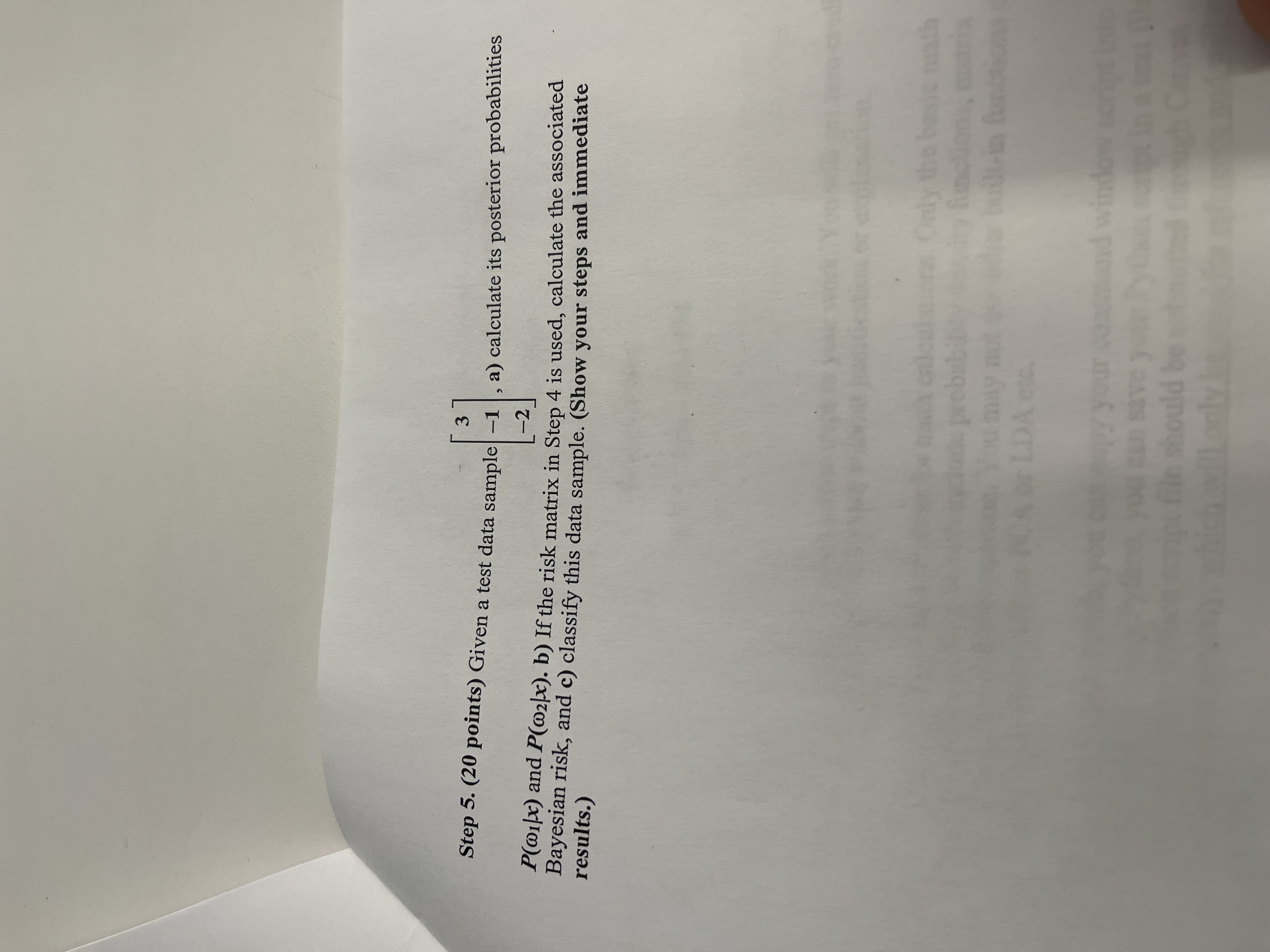

In a 3-dimensional 2-class classification problem, the following training sample vectors are given: Following are the steps to be taken to process this dataset. Step 1. (20 points) Use Fisher linear discriminant analysis method to a) calculate a transform vector w to reduce the data dimension to 1 dimension, and then b) calculate the projections of all the data samples in the resulting 1-dimensional space. (Show your steps and immediate results.) Mi = 1 XED, X : ( 2 + 5 + 7 +8 + 6 ) / 5 5.6 ( 11 + 10 + 9+6 +12 1 / 57 - 9.6 8,2 M z = (0+ 3 + 4 + 1 + 2 ) 1 5 2 ( 6 + 12 + 5 + 12 + 6 / 15 |: ( 9 + 13 + 5 + 6 + 1 3) /5 9 . 2 ( - 1+ 0 + 2 + 4 + 2 ) 15 7 ( X - mi )(X- Mi ) E Di 1.4 0.4 - 0 6 - 3.6 2.4 - 14 10.4 1- 06 -2.2 3.8 - 3. 2 3.8 - 2 2 - 22 3.8 -32 - 0:2 3.8 - 4.2 -3.6 8 0. 2 3. 3. 646 125 157Step 2. (20 points) Continue from Step 1. In the resulting 1-dimensional space from Step 1, assume class 1 and class 2 1-dimensional data samples follow two specific distributions as shown. class 1: P(x| @ ) =( x+2)e-(.x+2 ) x2 -2 0 otherwise x - 12 class 2: p(x | @2) = 2T 02 - exp For the class 1 distribution p(x|w1), given N independent and identically distributed training samples x1, X2, ..., XN, use maximum likelihood estimation to estimate the parameter Q. (This derivation should be done by hand.) (No need to derive ML estimation of class 2 distribution parameters. They can be found in lecture notes CPE646-4) P(X/W. ) VS Laplacian distribution and can be uniquely determined by parameter D, so , Pose / w . ) = P(x / w/ 0) : Pex ( 0 ) In In ca ) : In PLDID ) = In ( # 1 P( X K 10 ) = In PCSic /0) 0 ( XK + 2 ) DO l = I Fola RCXIcle) = = VD In ( @ (x+ 2 ) e n I Vo ( lot ( 75 + 2 ) - 0 ( 35 + 2 ) )Step 3. (20 points) Use the maximum likelihood estimation formula from Step 2 and lecture notes, estimate the parameters of these two distributions p(x|w1) and p(x|w2) based on the 1-diementional projections of the training samples from Step 1.Step 4. (20 points) Based on the results from Step 3, derive the Bayesian decision boundary with the following risk matrix. Assume the two classes have equal prior probabilities. (Simplify your expression as much as you can, you will be graded accordingly.) W1 a1 0 2 Late a2 0Step 5. (20 points) Given a test data sample -1 , a) calculate its posterior probabilities P(wilx) and P(@2/x). b) If the risk matrix in Step 4 is used, calculate the associated results.) Bayesian risk, and c) classify this data sample. (Show your steps and immediate Only the basic math why functions, matrix you may not built-in function OF LDA etc. My your Band winds you RED SAVE y ipt file should be cavill only

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts