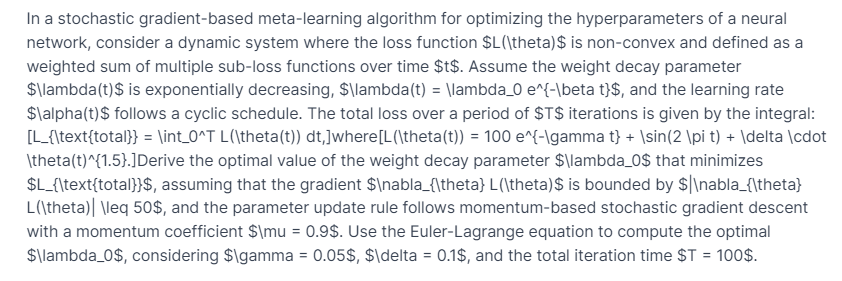

Question: In a stochastic gradient - based meta - learning algorithm for optimizing the hyperparameters of a neural network, consider a dynamic system where the loss

In a stochastic gradientbased metalearning algorithm for optimizing the hyperparameters of a neural

network, consider a dynamic system where the loss function $Lltheta$ is nonconvex and defined as a

weighted sum of multiple subloss functions over time $$ Assume the weight decay parameter

$lalphat$ follows a cyclic schedule. The total loss over a period of $$ iterations is given by the integral:

LtexttotalintT Lthetat dtwhereLthetat gamma delta cdot

thetat

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock