Question: just work coding part 5, 6, 7 Part 2: More Realistic Math Now lets increase the complexity slightly. Lets try to do gradient descent for

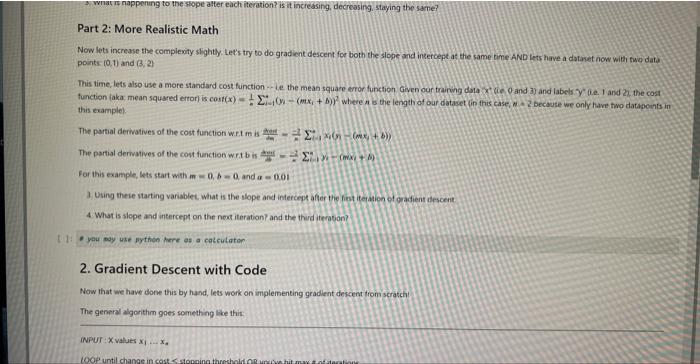

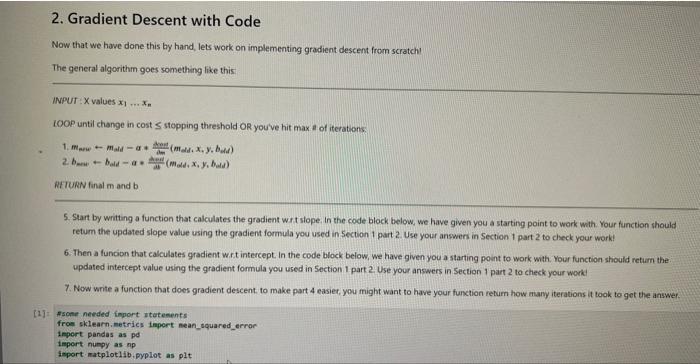

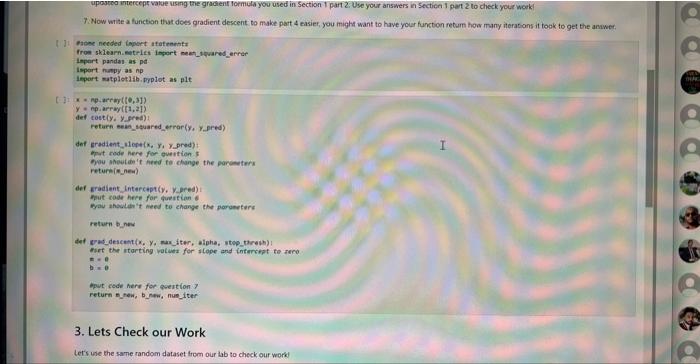

Part 2: More Realistic Math Now lets increase the complexity slightly. Lets try to do gradient descent for both the slope and insercept at the same time AND lets have a datanet now with fwe data points (0,1) and (3,2) This time, lets also use a more standard cost function . . Le the mean square error function. Given our training dial x (ie 0 and 3 ) and labels . y " fie. I and 2i the cost function (aka, mean squared error) is cos(x)=n1i=1(yi(mxi+b))2 where n b the length of our datavet fin this case, n. 2 because we only have fwo dataponts in this examplel. The partial derivalives of the cost function w.rt b is 25dai=2(y0(mx)+b) For this example, lets start with m=0,b=0, and a=0.01 3. Using these starting variablet, what is the slope and intercept after the fiet itedien of gradient descent 4. What is slope and intercept on the neet iteration? and the third iterabon? - yau nay use Bython here as a cakculater. 2. Gradient Descent with Code Now that we have done this by hand, lets work on implementing gradient descent from scratch! The general algorithin goes something like thic: 2. Gradient Descent with Code Now that we have done this by hand, lets work on implementing gradient descent from scratch! The general algorithm goes something like this: INPUT: X values x1xn IOOP until change in cost stopping threshold OR you've hit max a of iterations: 1. manw+malda+mAmal(madd,x,y,badd) 2. bmen+badda+adhed(madd,x,y,badd) RETURN final m and b 5. Start by writting a function that calculates the gradient wirt slope, In the code block below, we have given you a starting point to work with. Your function should retum the updated slope value using the gradient formula you used in Section 1 part 2. Use your answers in Section 1 part 2 to check your work! 6. Then a funcion that calculates gradient w.r.t intercept. In the code block below, we have given you a starting point to work with. Your function should retum the: updated intercept value using the gradient formula you used in 5 ection 1 part 2 . Use your answers in Section 1 part 2 to check your work! 7. Now write a function that does gradient descent. to make part 4 easiet, you might want to have your function retum how mary iterations it took to get the answer. [1]. Fsone needed finport statenents from sklearn, netries inpert nean_tquared_error 1eport pandas as po inport nunpy as np import: matplot1ib. pyplot as p1t Papse necded Leport ifafrentio froe sklearn. eetries ingert meet_tquared anrar Inpart pandas as pd- lopert naspy as np Inport matpletaib. Foplot as pit return b_ nev def gacidescant(x, y0 max-fter, alphe, step threub) ! fort the itarting valuer for a tope and intercept to zeno. m=e b=e. Hput code here for esertion 7 return firew, b. new, nue iteer 3. Lets Check our Work Let's we the same random dataset from our lab to check our work

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts