Question: Let D = {(x, y),..., (In, Yn)} where xi R and y R be the training data that you are given. As you have

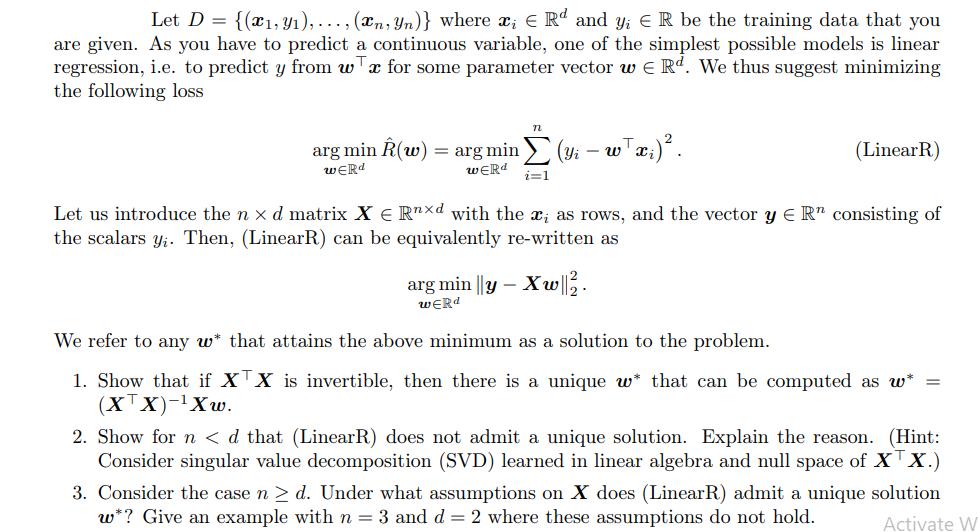

Let D = {(x, y),..., (In, Yn)} where xi R and y R be the training data that you are given. As you have to predict a continuous variable, one of the simplest possible models is linear regression, i.e. to predict y from wx for some parameter vector w Rd. We thus suggest minimizing the following loss n arg min (w) = arg min (y - w x;) . WERD werd i=1 (LinearR) Let us introduce the nx d matrix XE Rxd with the x, as rows, and the vector y Rr consisting of the scalars y. Then, (LinearR) can be equivalently re-written as arg min ly - Xw||2. WERD We refer to any w* that attains the above minimum as a solution to the problem. 1. Show that if XX is invertible, then there is a unique w* that can be computed as w* = (XX)-Xw. 2. Show for n < d that (LinearR) does not admit a unique solution. Explain the reason. (Hint: Consider singular value decomposition (SVD) learned in linear algebra and null space of XTX.) 3. Consider the case n d. Under what assumptions on X does (LinearR) admit a unique solution w*? Give an example with n = 3 and d = 2 where these assumptions do not hold. Activate W

Step by Step Solution

3.33 Rating (159 Votes )

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts