Question: Let us say we want to train a reinforcement learning (RL) agent with the objective to minimize voltage deviation from some reference value. 1. [5

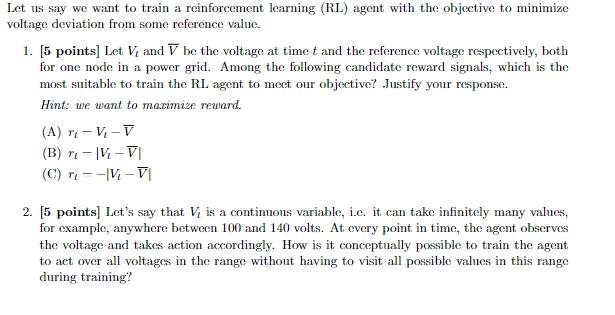

Let us say we want to train a reinforcement learning (RL) agent with the objective to minimize voltage deviation from some reference value. 1. [5 points] Let Vi and V be the voltage at time t and the reference voltage respectively, both for one node in a power grid. Among the following candidate reward signals, which is the most suitable to train the RL agent to meet our objective? Justify your response. Hint: we want to maximize reward. (A) r-V-V (B) - V-VI (C) --IV-V 2. [5 points) Let's say that Vi is a continuous variable, i.e. it can take infinitely many values, for example, anywhere between 100 and 140 volts. At every point in time, the agent observes the voltage and takes action accordingly. How is it conceptually possible to train the agent to act over all voltages in the range without having to visit all possible values in this range during training? Let us say we want to train a reinforcement learning (RL) agent with the objective to minimize voltage deviation from some reference value. 1. [5 points] Let Vi and V be the voltage at time t and the reference voltage respectively, both for one node in a power grid. Among the following candidate reward signals, which is the most suitable to train the RL agent to meet our objective? Justify your response. Hint: we want to maximize reward. (A) r-V-V (B) - V-VI (C) --IV-V 2. [5 points) Let's say that Vi is a continuous variable, i.e. it can take infinitely many values, for example, anywhere between 100 and 140 volts. At every point in time, the agent observes the voltage and takes action accordingly. How is it conceptually possible to train the agent to act over all voltages in the range without having to visit all possible values in this range during training

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts