Question: Lexer.cc #include #include #include #include #include #include lexer.h #include inputbuf.h using namespace std; string reserved[] = { END_OF_FILE, IF, WHILE, DO, THEN, PRINT, PLUS, MINUS,

![namespace std; string reserved[] = { "END_OF_FILE", "IF", "WHILE", "DO", "THEN", "PRINT",](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f0948a4930e_69766f09489c096b.jpg)

Lexer.cc

#include

#include "lexer.h" #include "inputbuf.h"

using namespace std;

string reserved[] = { "END_OF_FILE", "IF", "WHILE", "DO", "THEN", "PRINT", "PLUS", "MINUS", "DIV", "MULT", "EQUAL", "COLON", "COMMA", "SEMICOLON", "LBRAC", "RBRAC", "LPAREN", "RPAREN", "NOTEQUAL", "GREATER", "LESS", "LTEQ", "GTEQ", "DOT", "NUM", "ID", "ERROR" // TODO: Add labels for new token types here (as string) };

#define KEYWORDS_COUNT 5 string keyword[] = { "IF", "WHILE", "DO", "THEN", "PRINT" };

void Token::Print() { cout lexeme token_type] line_no

LexicalAnalyzer::LexicalAnalyzer() { this->line_no = 1; tmp.lexeme = ""; tmp.line_no = 1; tmp.token_type = ERROR; }

bool LexicalAnalyzer::SkipSpace() { char c; bool space_encountered = false;

input.GetChar(c); line_no += (c == ' ');

while (!input.EndOfInput() && isspace(c)) { space_encountered = true; input.GetChar(c); line_no += (c == ' '); }

if (!input.EndOfInput()) { input.UngetChar(c); } return space_encountered; }

bool LexicalAnalyzer::IsKeyword(string s) { for (int i = 0; i

TokenType LexicalAnalyzer::FindKeywordIndex(string s) { for (int i = 0; i

Token LexicalAnalyzer::ScanNumber() { char c;

input.GetChar(c); if (isdigit(c)) { if (c == '0') { tmp.lexeme = "0"; } else { tmp.lexeme = ""; while (!input.EndOfInput() && isdigit(c)) { tmp.lexeme += c; input.GetChar(c); } if (!input.EndOfInput()) { input.UngetChar(c); } } // TODO: You can check for REALNUM, BASE08NUM and BASE16NUM here! tmp.token_type = NUM; tmp.line_no = line_no; return tmp; } else { if (!input.EndOfInput()) { input.UngetChar(c); } tmp.lexeme = ""; tmp.token_type = ERROR; tmp.line_no = line_no; return tmp; } }

Token LexicalAnalyzer::ScanIdOrKeyword() { char c; input.GetChar(c);

if (isalpha(c)) { tmp.lexeme = ""; while (!input.EndOfInput() && isalnum(c)) { tmp.lexeme += c; input.GetChar(c); } if (!input.EndOfInput()) { input.UngetChar(c); } tmp.line_no = line_no; if (IsKeyword(tmp.lexeme)) tmp.token_type = FindKeywordIndex(tmp.lexeme); else tmp.token_type = ID; } else { if (!input.EndOfInput()) { input.UngetChar(c); } tmp.lexeme = ""; tmp.token_type = ERROR; } return tmp; }

// you should unget tokens in the reverse order in which they // are obtained. If you execute // // t1 = lexer.GetToken(); // t2 = lexer.GetToken(); // t3 = lexer.GetToken(); // // in this order, you should execute // // lexer.UngetToken(t3); // lexer.UngetToken(t2); // lexer.UngetToken(t1); // // if you want to unget all three tokens. Note that it does not // make sense to unget t1 without first ungetting t2 and t3 // TokenType LexicalAnalyzer::UngetToken(Token tok) { tokens.push_back(tok);; return tok.token_type; }

Token LexicalAnalyzer::GetToken() { char c;

// if there are tokens that were previously // stored due to UngetToken(), pop a token and // return it without reading from input if (!tokens.empty()) { tmp = tokens.back(); tokens.pop_back(); return tmp; }

SkipSpace(); tmp.lexeme = ""; tmp.line_no = line_no; input.GetChar(c); switch (c) { case '.': tmp.token_type = DOT; return tmp; case '+': tmp.token_type = PLUS; return tmp; case '-': tmp.token_type = MINUS; return tmp; case '/': tmp.token_type = DIV; return tmp; case '*': tmp.token_type = MULT; return tmp; case '=': tmp.token_type = EQUAL; return tmp; case ':': tmp.token_type = COLON; return tmp; case ',': tmp.token_type = COMMA; return tmp; case ';': tmp.token_type = SEMICOLON; return tmp; case '[': tmp.token_type = LBRAC; return tmp; case ']': tmp.token_type = RBRAC; return tmp; case '(': tmp.token_type = LPAREN; return tmp; case ')': tmp.token_type = RPAREN; return tmp; case '') { tmp.token_type = NOTEQUAL; } else { if (!input.EndOfInput()) { input.UngetChar(c); } tmp.token_type = LESS; } return tmp; case '>': input.GetChar(c); if (c == '=') { tmp.token_type = GTEQ; } else { if (!input.EndOfInput()) { input.UngetChar(c); } tmp.token_type = GREATER; } return tmp; default: if (isdigit(c)) { input.UngetChar(c); return ScanNumber(); } else if (isalpha(c)) { input.UngetChar(c); return ScanIdOrKeyword(); } else if (input.EndOfInput()) tmp.token_type = END_OF_FILE; else tmp.token_type = ERROR;

return tmp; } }

int main() { LexicalAnalyzer lexer; Token token;

token = lexer.GetToken(); token.Print(); while (token.token_type != END_OF_FILE) { token = lexer.GetToken(); token.Print(); } }

Lexer.h

#ifndef __LEXER__H__ #define __LEXER__H__

#include

#include "inputbuf.h"

// ------- token types -------------------

typedef enum { END_OF_FILE = 0, IF, WHILE, DO, THEN, PRINT, PLUS, MINUS, DIV, MULT, EQUAL, COLON, COMMA, SEMICOLON, LBRAC, RBRAC, LPAREN, RPAREN, NOTEQUAL, GREATER, LESS, LTEQ, GTEQ, DOT, NUM, ID, ERROR // TODO: Add labels for new token types here } TokenType;

class Token { public: void Print();

std::string lexeme; TokenType token_type; int line_no; };

class LexicalAnalyzer { public: Token GetToken(); TokenType UngetToken(Token); LexicalAnalyzer();

private: std::vector

bool SkipSpace(); bool IsKeyword(std::string); TokenType FindKeywordIndex(std::string); Token ScanIdOrKeyword(); Token ScanNumber(); };

#endif //__LEXER__H__

inputbuf.cc

#include

#include "inputbuf.h"

using namespace std;

bool InputBuffer::EndOfInput() { if (!input_buffer.empty()) return false; else return cin.eof(); }

char InputBuffer::UngetChar(char c) { if (c != EOF) input_buffer.push_back(c);; return c; }

void InputBuffer::GetChar(char& c) { if (!input_buffer.empty()) { c = input_buffer.back(); input_buffer.pop_back(); } else { cin.get(c); } }

string InputBuffer::UngetString(string s) { for (int i = 0; i

inputbuf.h

#ifndef __INPUT_BUFFER__H__

#define __INPUT_BUFFER__H__

#include

class InputBuffer {

public:

void GetChar(char&);

char UngetChar(char);

std::string UngetString(std::string);

bool EndOfInput();

private:

std::vector

};

#endif //__INPUT_BUFFER__H__

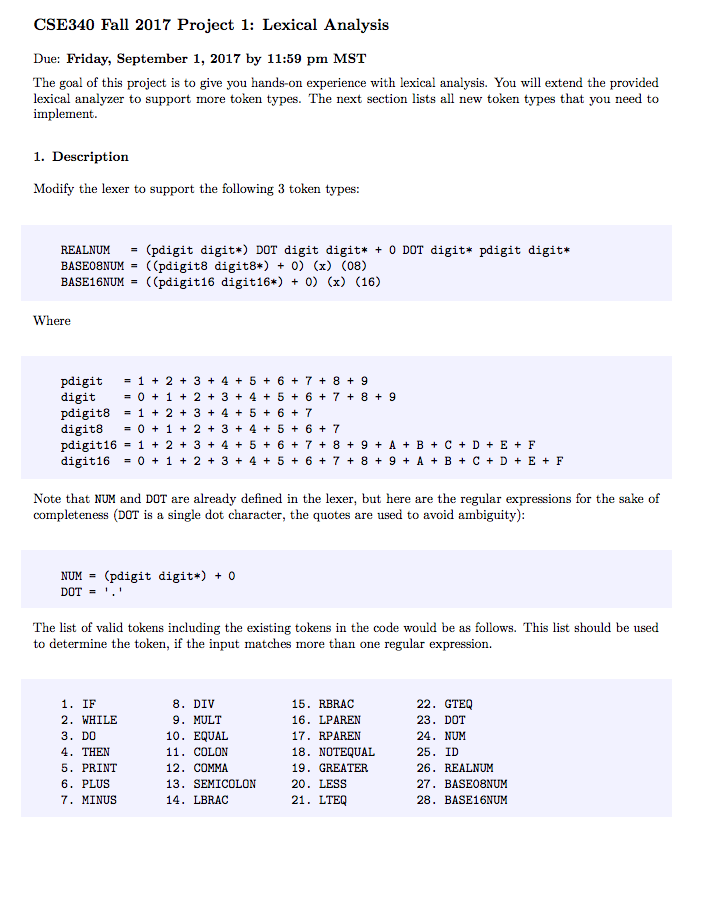

CSE340 Fall 2017 Project 1: Lexical Analysis Due: Friday, September 1, 2017 by 11:59 pm MST The goal of this project is to give you hands-on experience with lexical analysis. You will extend the provided lexical analyzer to support more token types. The next section lists al new token types that you need to implement. 1. Description Modify the lexer to support the following 3 token types REALNUM (pdigit digit*) DOT digit digit* + 0 DOT digit* pdigit digit* BASE08NUM= ((pdigit8 digit) + 0) (x) (08) BASE16NUM= ((pdigit 16 digit16*) + 0) (x) (16) Where pdigit 1+ 2+ 3+ 4+5+6+7+8+9 digit =0+1+2+3+4+5+6+7+8+9 pdigit8 =1+2+3+4+5+6+7 digit8 =0+1+2+3+4+5+6+7 pdigit16 = 1 + 2 + 3 + 4 + 5 + 6 + 7 + 8 + 9 + A + B + C + D + E + F digit16 =0+1+2+3+4+5+6+7+8+9+A+B+C+D+E+F Note that NUM and DOT are already defined in the lexer, but here are the regular expressions for the sake of completeness (DOT is a single dot character, the quotes are used to avoid ambiguity) NUM = (pdigit digit*) + 0 DOT'. The list of valid tokens including the existing tokens in the code would be as follows. This list should be used to determine the token, if the input matches more than one regular expression. 1. IF 2. WHILE 3. DO 4. THEN 5. PRINT 6. PLUS 7. MINUS 8. DIV 9. MULT 10. EQUAL 11. COLON 12. COMMA 15. RBRAC 16. LPAREN 17. RPAREN 18. NOTEQUAL 19. GREATER 20. LESS 21. LTEOQ 22. GTEQ 23. DOT 24. NUM 25. ID 26. REALNUM 27. BASE08NUM 28. BASE16NUM 13. SEMICOLON 14. LBRAC

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts