Question: Linear Classification Question 2 2 pts Select all that correctly states about the linear classifiers. If the data is linearly separable, the perceptron algorithm is

Linear Classification

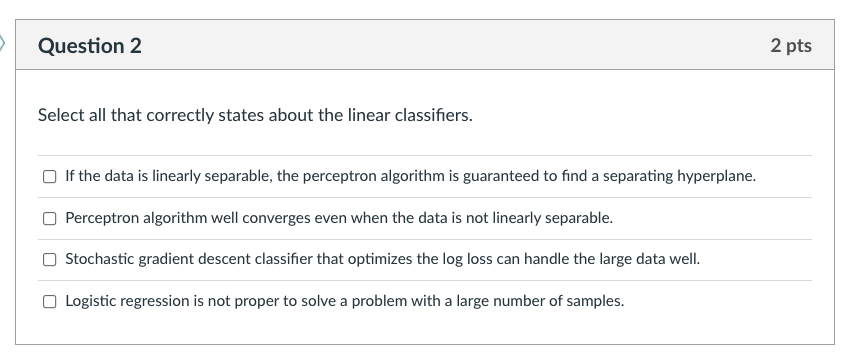

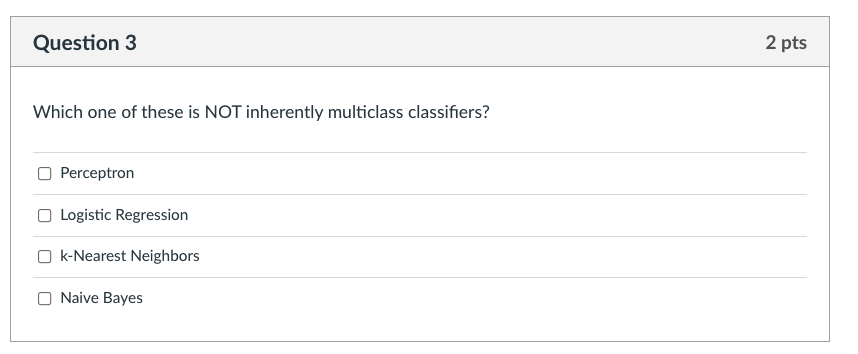

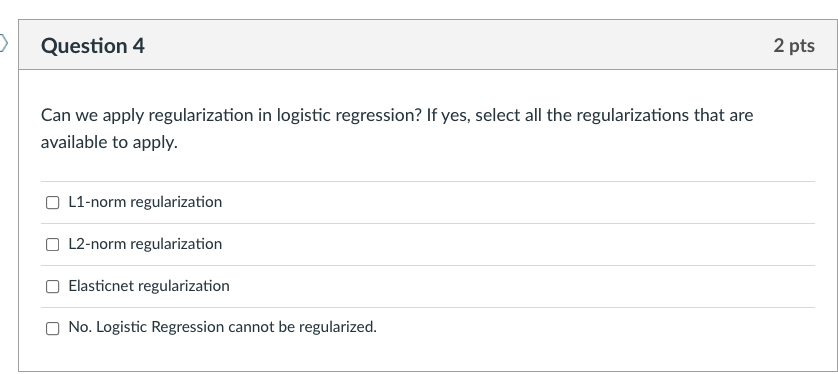

Question 2 2 pts Select all that correctly states about the linear classifiers. If the data is linearly separable, the perceptron algorithm is guaranteed to find a separating hyperplane. Perceptron algorithm well converges even when the data is not linearly separable. Stochastic gradient descent classifier that optimizes the log loss can handle the large data well. Logistic regression is not proper to solve a problem with a large number of samples. Question 3 2 pts Which one of these is NOT inherently multiclass classifiers? Perceptron Logistic Regression k-Nearest Neighbors Naive Bayes > Question 4 2 pts Can we apply regularization in logistic regression? If yes, select all the regularizations that are available to apply. L1-norm regularization L2-norm regularization Elasticnet regularization No. Logistic Regression cannot be regularized

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts