Question: Machine Learning Help 4. [Ridge Regression (*), 20 bonus points) Consider the regularized linear regression objective shown below: N 1 J(w) = (h(xn, w) tn)?

Machine Learning Help

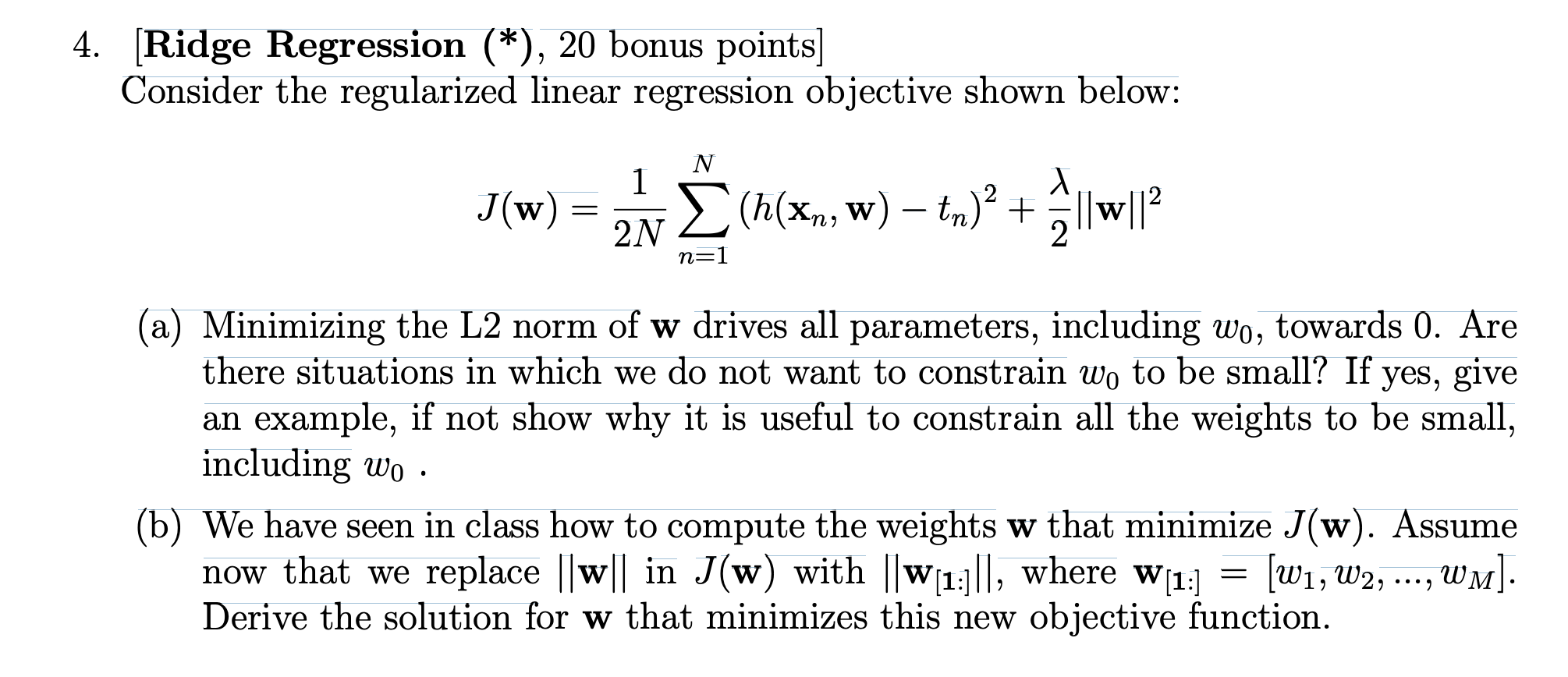

4. [Ridge Regression (*), 20 bonus points) Consider the regularized linear regression objective shown below: N 1 J(w) = (h(xn, w) tn)? + ||w|l? 2N n=1 (a) Minimizing the L2 norm of w drives all parameters, including wo, towards 0. Are there situations in which we do not want to constrain wo to be small? If yes, give an example, if not show why it is useful to constrain all the weights to be small, including wo (b) We have seen in class how to compute the weights w that minimize J(w). Assume now that we replace ||w|| in J(w) with ||w(13)||, where w(1:) (W1, W2, ..., WM] . Derive the solution for w that minimizes this new objective function. . 4. [Ridge Regression (*), 20 bonus points) Consider the regularized linear regression objective shown below: N 1 J(w) = (h(xn, w) tn)? + ||w|l? 2N n=1 (a) Minimizing the L2 norm of w drives all parameters, including wo, towards 0. Are there situations in which we do not want to constrain wo to be small? If yes, give an example, if not show why it is useful to constrain all the weights to be small, including wo (b) We have seen in class how to compute the weights w that minimize J(w). Assume now that we replace ||w|| in J(w) with ||w(13)||, where w(1:) (W1, W2, ..., WM] . Derive the solution for w that minimizes this new objective function

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts