Question: #MachineLearning #PythonProgramming Please help. WILL UPVOTE! Please provide the Python Code for the question above! Use the dataset Olivetti Faces from Scikit Learn 1. In

#MachineLearning #PythonProgramming

Please help. WILL UPVOTE!

Please provide the Python Code for the question above! Use the dataset Olivetti Faces from Scikit Learn

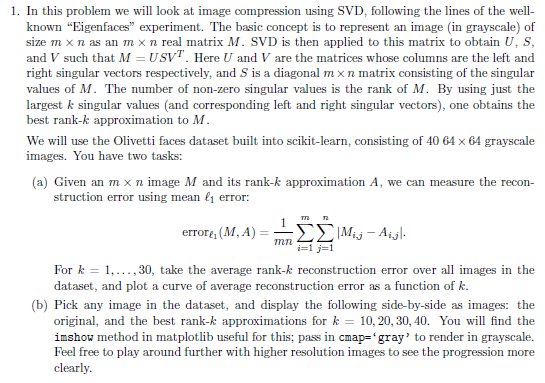

1. In this problem we will look at image compression using SVD, following the lines of the well- known "Eigenfaces" experiment. The basic concept is to represent an image (in grayscale) of size m xn as an m x n real matrix M. SVD is then applied to this matrix to obtain US, and V such that M = USVT. Here U and V are the matrices whose columns are the left and right singular vectors respectively, and S is a diagonal m x n matrix consisting of the singular values of M. The number of non-zero singular values is the rank of M. By using just the largest k singular values and corresponding left and right singular vectors), one obtains the best rank-k approximation to M. We will use the Olivetti faces dataset built into scikit-learn, consisting of 40 64 x 64 grayscale images. You have two tasks: (a) Given an m x n image M and its rank-k approximation A, we can measure the recon- struction error using mean l error: 1 errore, (MA) |Mi 5 - Aigl. mn i=1 j=1 For k = 1,..., 30, take the average rank-k reconstruction error over all images in the dataset, and plot a curve of average reconstruction error as a function of k. (b) Pick any image in the dataset, and display the following side-by-side as images: the original, and the best rank-k approximations for k = 10,20,30,40. You will find the imshow method in matplotlib useful for this, pass in cmap="gray' to render in grayscale. Feel free to play around further with higher resolution images to see the progression more clearly

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts