Question: Markov Chain Let {i : i = 1, 2, . . .} be a probability distribution, and consider the Markov chain whose transition probability matrix

Markov Chain

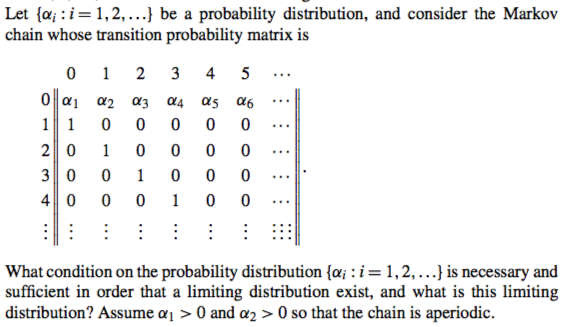

Let {i : i = 1, 2, . . .} be a probability distribution, and consider the Markov chain whose transition probability matrix is

What condition on the probability distribution {alpha i : i = 1, 2, . . .} is necessary and sufficient in order that a limiting distribution exist, and what is this limiting distribution? Assume alpha 1 > 0 and alpha 2 > 0 so that the chain is aperiodic.

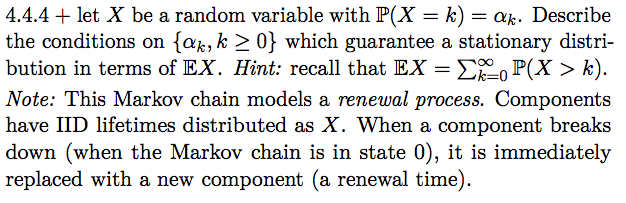

+ let X be a random variable with P(X = k) = k. Describe the conditions on {k , k 0} which guarantee a stationary distribution in terms of EX. Hint: recall that EX = sumk=0 P(X > k).

Note: This Markov chain models a renewal process. Components have IID lifetimes distributed as X. When a component breaks down (when the Markov chain is in state 0), it is immediately replaced with a new component (a renewal time).

Let {@;:1=1,2,...} be a probability dis;]'ibutinn, and consider the Markov chain whose transition probability matrix is 0 1 2 3 4 35 Ofleey a2 a3y oy s o 11T 0 0O 49 9 0 200 1 O O O O 3j]0 0 1 0 0 O 440 O0 O 1 0 O What condition on the probability distribution {o; : i = 1, 2, ...} is necessary and sufficient in order that a limiting distribution exist, and what is this limiting distribution? Assume o) > 0 and o> = 0 so that the chain is aperiodic. 4.4.4 + let X be a random variable with WK = in] = ark. Describe the conditions on {cam k 23 0} which guarantee a stationary distri- bution in terms of EX . Hint: recall that EX = 23:\" 1P{X :- k). Note: This Markov chain models a renewal process. Components have HD lifetimes distributed as X. VJhen a component breaks down (when the Markov chain is in state 0), it is innnediately replaced with a new component (a renewal time)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts